用docker搭建spark集群

最近在学习spark, 有了docker搭建集群太方便了安装好docker之后,在vbox里面做一个共享目录, 方便虚拟机从host拷贝文件运行docker,接着运行boot2docker ssh这样就进到虚拟机里面了, 因为需要用到github上的脚本, 可以拷贝你的ssh文件到虚拟机里面cp /Users/zd/.ssh/id_* ~/.

·

系统是osx

最近在学习spark, 有了docker搭建集群太方便了

安装好docker之后,在vbox里面做一个共享目录, 方便虚拟机从host拷贝文件

运行docker, 接着运行

boot2docker ssh 这样就进到虚拟机里面了, 因为需要用到github上的脚本, 可以拷贝你的ssh文件到虚拟机里面 cp /Users/name/.ssh/id_* ~/.ssh/ 接着输入你需要修改deploy/start_spark_cluster.sh 的start_master方法, 加入-p 8080:8080, 这是做端口映射,将容器的8080端口暴露给虚拟机,然后你在外部可以tce-ab输入s输入python.tcz 输入esc键 然后 输入 i 安装python 同样的还需要安装bash.tcz ,方法同上 接着就clone amplab的docker脚本了 git clone git@github.com:amplab/docker-scripts.git 注意: 这些更改到你退出虚拟机后不会被保存下来, 记得提出虚拟机前,在mac中再运行下boot2docker saveok,现在进到docker-scripts 进入apache-hadoop-hdfs-precise dnsmasq-precise spark-1.0.0这3个目录,运行里面的build脚本,运行docker images,就能看到相应的images

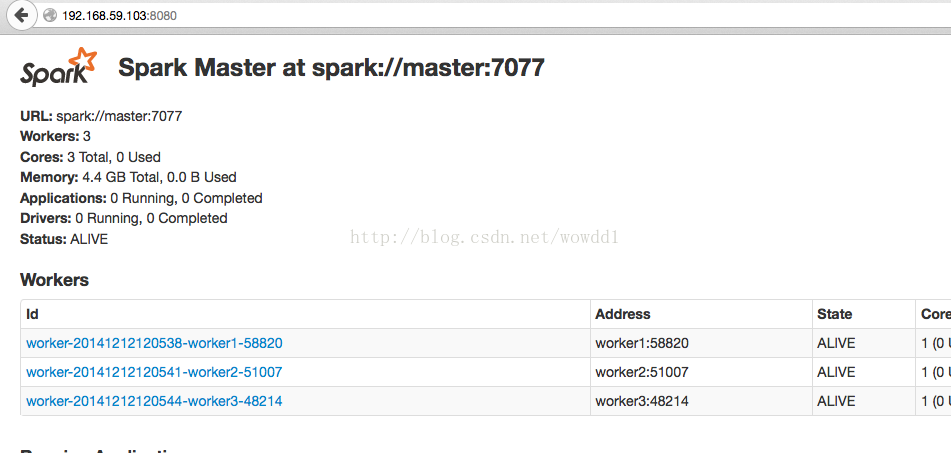

访问虚拟机,这样你在浏览器中可以看到集群的运行状态

work也要做端口映射, 8080给了master,8081 8082给work1 work2,依次类推

具体修改如下图所示:

接着就可以启动集群了,运行 sudo ./deploy/deploy.sh -i spark:1.0.0 -w 3

*** Starting Spark 1.0.0 ***

starting nameserver container

started nameserver container: be7031996f698e0ec012448e3fbe65918e72902afc1fe5116fde90858b116a60

DNS host->IP file mapped: /tmp/dnsdir_12623/0hosts

NAMESERVER_IP: 172.17.0.23

waiting for nameserver to come up

starting master container

started master container: 58a8169e8a0afcda7b3bc063409a602c7accba7e77d1e82366390d0b1e6ce4ae

MASTER_IP: 172.17.0.24

waiting for master ......

waiting for nameserver to find master

starting worker container

started worker container: 8f5e14583c06419417ab918041b11f01278c471796a8b6d7824f6d9f40fb70cd

worker1 at port 8081, WORKER_IP is 172.17.0.25

starting worker container

started worker container: 118fa52a1662b061138151d907a649e4a34da755c5e84bf25d2a724102f728da

worker2 at port 8082, WORKER_IP is 172.17.0.26

starting worker container

started worker container: fc1905d24cdb4a97afad6c0f115e1700a9d8a13a610672a16b193739990b8e61

worker3 at port 8083, WORKER_IP is 172.17.0.27

waiting for workers to register .....

***********************************************************************

start shell via: sudo /home/docker/docker-scripts/deploy/start_shell.sh -i spark-shell:1.0.0 -n be7031996f698e0ec012448e3fbe65918e72902afc1fe5116fde90858b116a60

visit Spark WebUI at: http://172.17.0.24:8080/

visit Hadoop Namenode at: http://172.17.0.24:50070

ssh into master via: ssh -i /home/docker/docker-scripts/deploy/../apache-hadoop-hdfs-precise/files/id_rsa -o UserKnownHostsFile=/dev/null -o StrictHostKeyChecking=no root@172.17.0.24

/data mapped:

kill master via: sudo docker kill 58a8169e8a0afcda7b3bc063409a602c7accba7e77d1e82366390d0b1e6ce4ae

***********************************************************************

to enable cluster name resolution add the following line to _the top_ of your host's /etc/resolv.conf:

nameserver 172.17.0.23

运行docker ps:

docker@boot2docker:~/docker-scripts$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

fc1905d24cdb spark-worker:1.0.0 "/root/spark_worker_ 21 seconds ago Up 19 seconds 8888/tcp, 0.0.0.0:8083->8081/tcp evil_hopper

118fa52a1662 spark-worker:1.0.0 "/root/spark_worker_ 24 seconds ago Up 23 seconds 8888/tcp, 0.0.0.0:8082->8081/tcp sharp_yonath

8f5e14583c06 spark-worker:1.0.0 "/root/spark_worker_ 27 seconds ago Up 26 seconds 8888/tcp, 0.0.0.0:8081->8081/tcp berserk_einstein

58a8169e8a0a spark-master:1.0.0 "/root/spark_master_ 40 seconds ago Up 39 seconds 7077/tcp, 0.0.0.0:8080->8080/tcp insane_einstein

be7031996f69 amplab/dnsmasq-precise:latest "/root/dnsmasq_files 42 seconds ago Up 41 seconds trusting_sammet

在ports列可以看到相应的端口都做了映射

在外部运行boot2docker ip 可以看到虚拟机的ip,我这边是

bash-3.2$ boot2docker ip

The VM's Host only interface IP address is: 192.168.59.103

接着打开浏览器,依次输入

192.168.59.103:8080 查看master状态192.168.59.103:8081 查看work1状态...192.168.59.103:8082 查看work2状态

用sudo ./deploy/kill_all.sh spark可以关闭集群

参考:

http://ju.outofmemory.cn/entry/63486

https://github.com/amplab/docker-scripts

http://docs.docker.com/userguide/usingdocker/#seeing-docker-command-usage

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)