史上最详细:在VMware虚拟机里搭建DB2 purescale测试

说明DB2的purescale功能由于牵扯到多款产品,包括GPFS, RSCT,TSA,所以非常复杂,搭建的过程稍有不慎,就会遇到很多的报错。本文详细地介绍了如何在VMware虚拟机里搭建出一个Linux环境下的purescale集群,集群有两个节点node01,node02,每个节点上一个member,一个cf。测试过程中,输入的命令以蓝色表示,前面#表示用root用户执行,$表示实

说明

DB2的purescale功能由于牵扯到多款产品,包括GPFS, RSCT,TSA,所以非常复杂,搭建的过程稍有不慎,就会遇到很多的报错。本文详细地介绍了如何在VMware虚拟机里搭建出一个Linux环境下的purescale集群,集群有两个节点node01,node02,每个节点上一个member,一个cf。测试过程中,输入的命令以蓝色表示,前面#表示用root用户执行,$表示实例用户执行。需要强调的地方用红色标出。

测试环境

windows 7FlashFXP

SecureCRT

DB2 10.5FP8

SUSE Linux 11.4

Vmware 10.0.1

测试步骤

1. 安装两个SUSE

Vmware里安装两个SUSE,安装的步骤是一样的,注意要点:

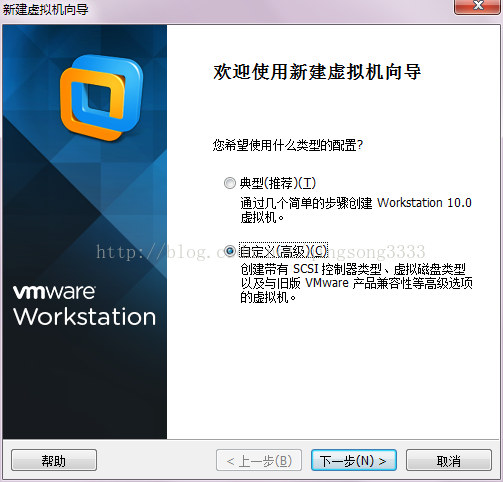

1.1 选择自定义安装

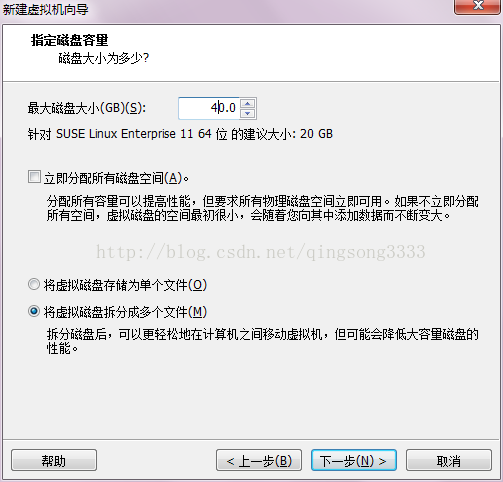

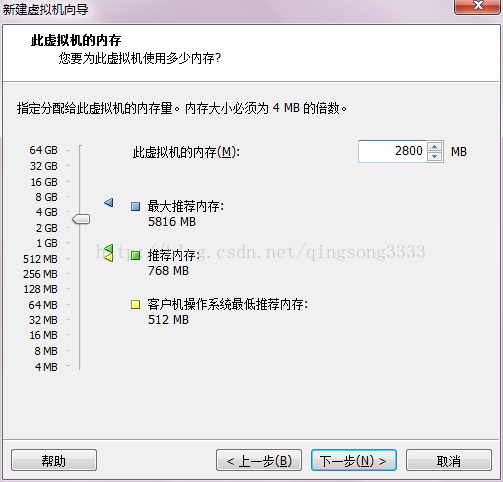

1.2 磁盘和内存大小

选择磁盘和内存大小的时候,磁盘建议至少40G,内存至少要2560M (我一开始的时候设置内存为2000M,最后启动CF的时候总是报错)

其他的都采用默认选项就好,注意检查默认网络采用的是否是NAT方式。

2. 修改主机名和hosts文件 (两台机器都要做)

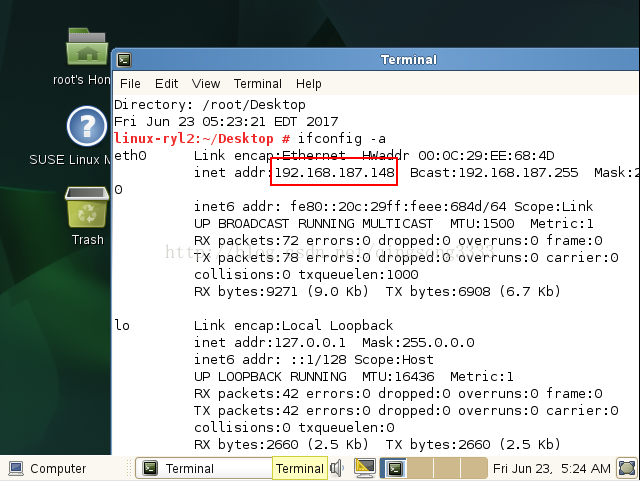

2.1 查看IP地址:

安装完成之后,两台虚拟机会自动开启,登录之后,在桌面上空白处点击右键->Open in Terminal->输入命令 "ipconfig -a":

查到IP地址之后,后面的大部分步骤都可以不用在虚拟机里做了,直接使用win7下的secureCRT或者PuTTY连接到虚拟机即可。

2.2 修改hostname

在/etc/rc.d/boot.localnet开头添加一行export HOSTNAME=node01

另一台机器主机名修改为node02,这个重启之后生效的,所以不必着急现在就看到效果。

2.3 修改 /etc/hosts文件如下:

其中192.168.187.148,192.168.187.149分别是两台机器的IP地址

# cat /etc/hosts

127.0.0.1 localhost192.168.187.148 node01

192.168.187.149 node02

3. 安装必要的软件包(两台机器都要做)

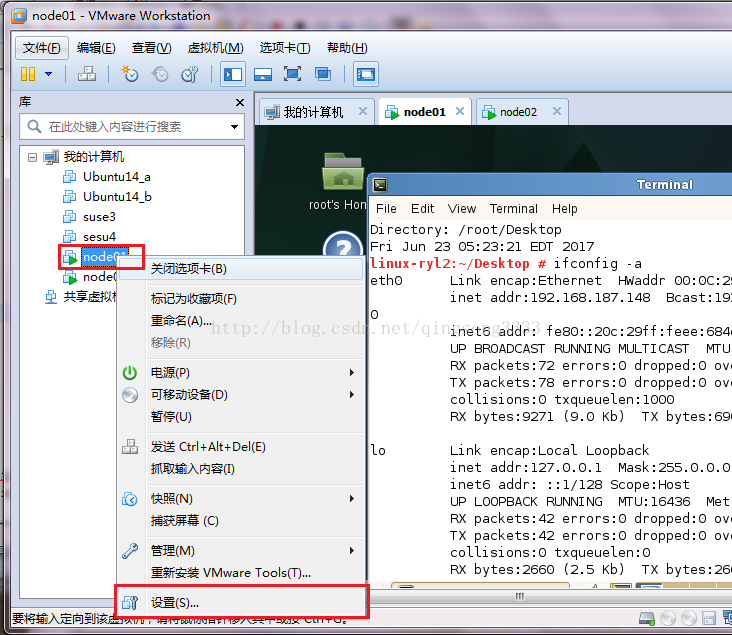

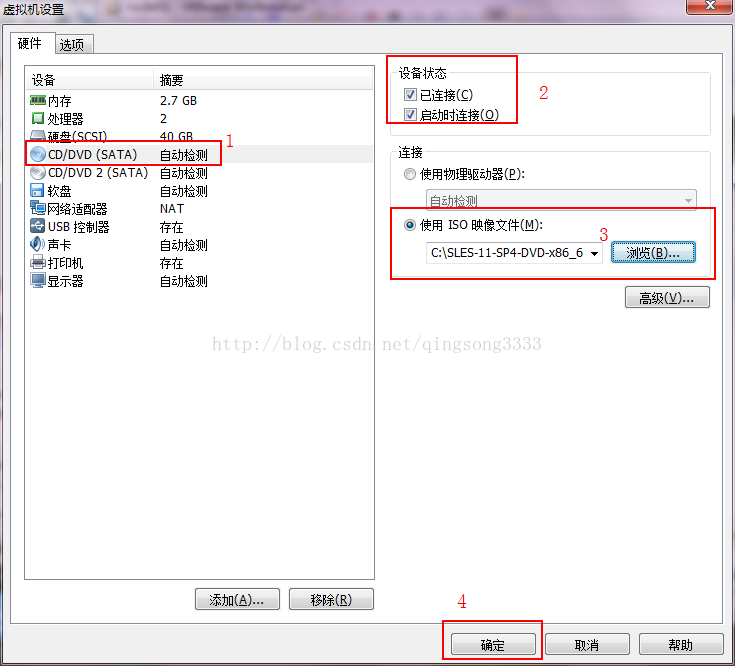

安装软件包前,要先把“光盘”放入光驱,在虚拟机名子上右键->设置

# zypper install pam-32bit

# zypper install glibc-locale-32bit

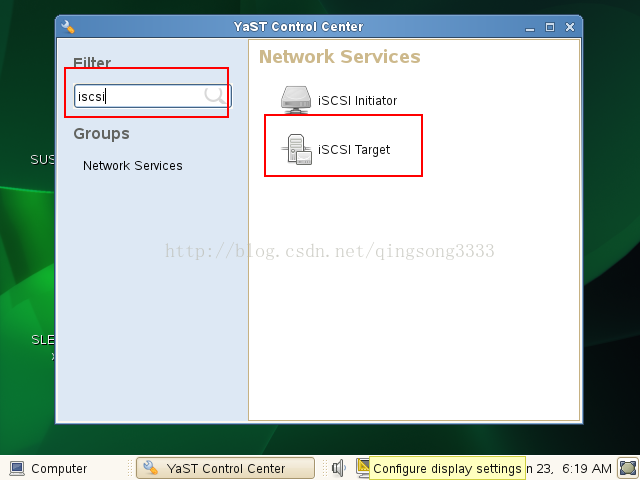

# zypper install iscsitarget

还要设置一些环境变量和文件

# echo "export DB2USENONIB=TRUE" >> /etc/profile.local

# cp -v /usr/src/linux-3.0.101-63-obj/x86_64/default/include/generated/autoconf.h /lib/modules/3.0.101-63-default/build/include/linux

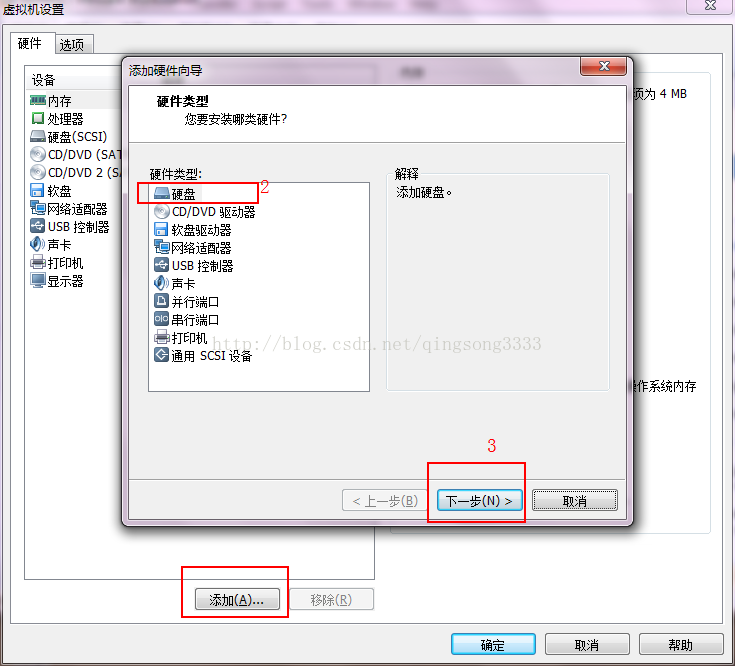

4. 添加磁盘 (两台机器都要做)

由于purescale是把数据放在共享存储的,所以我们需要一个共享磁盘,方案是在node01上添加一块磁盘,然后共享给node02

之后按默认选择就可以,大小我使用了40G。

node02上也要添加一块硬盘,但用不到,主要目地是使两边的盘符保持一致,所以大小设置为0.01G即可。

都添加完成之后,重启两个虚拟机。

5 iscsi实现磁盘共享

在上一步中,添加了磁盘,这一步的目的是让它变成共享磁盘,node01和node02都能访问。

5.1 共享之前,查看磁盘状态

共享之前,可以分别在两个节点上查看磁盘状态,每个节点上有两个磁盘,sda和sdb:

node01:~ # fdisk -lDisk /dev/sdb: 42.9 GB, 42949672960 bytes

255 heads, 63 sectors/track, 5221 cylinders, total 83886080 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sdb doesn't contain a valid partition table

Disk /dev/sda: 42.9 GB, 42949672960 bytes

255 heads, 63 sectors/track, 5221 cylinders, total 83886080 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x000a4042

Device Boot Start End Blocks Id System

/dev/sda1 2048 4208639 2103296 82 Linux swap / Solaris

/dev/sda2 * 4208640 83886079 39838720 83 Linux

node02:~ # fdisk -l

Disk /dev/sda: 42.9 GB, 42949672960 bytes

255 heads, 63 sectors/track, 5221 cylinders, total 83886080 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x0007a70b

Device Boot Start End Blocks Id System

/dev/sda1 2048 4208639 2103296 82 Linux swap / Solaris

/dev/sda2 * 4208640 83886079 39838720 83 Linux

Disk /dev/sdb: 10 MB, 10485760 bytes

64 heads, 32 sectors/track, 10 cylinders, total 20480 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sdb doesn't contain a valid partition table

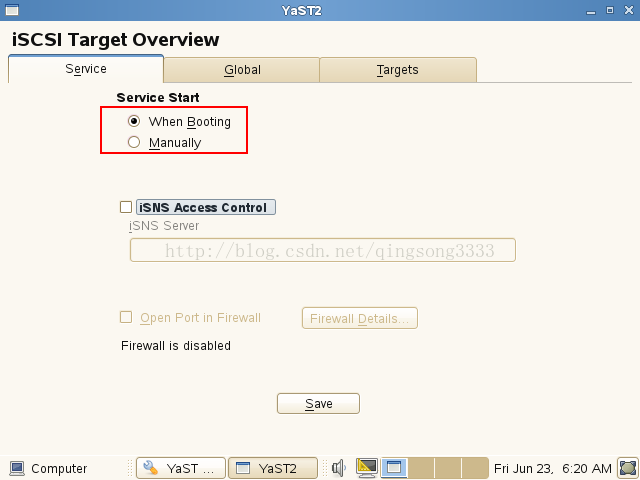

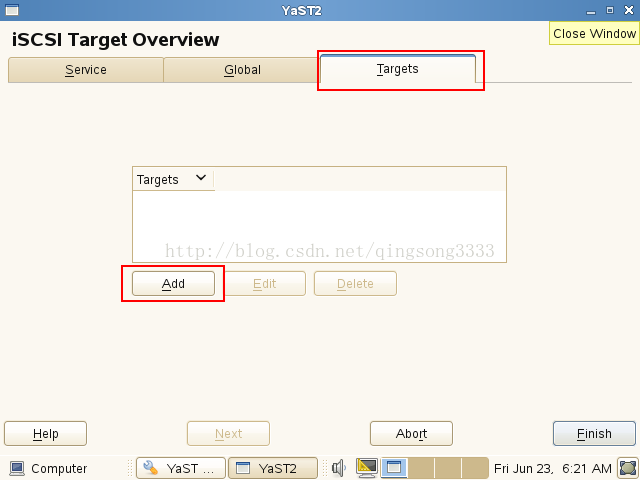

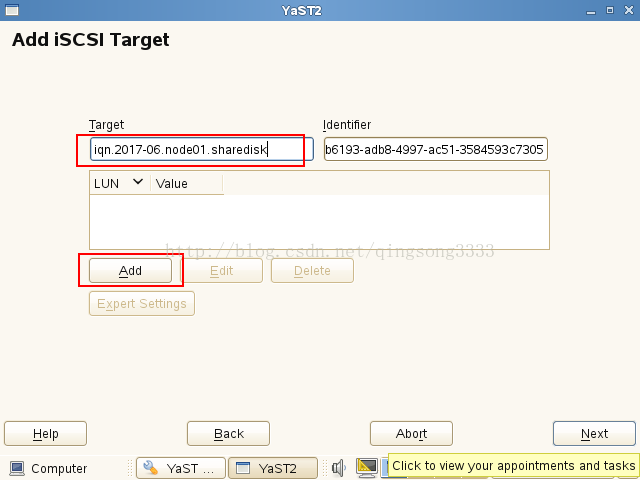

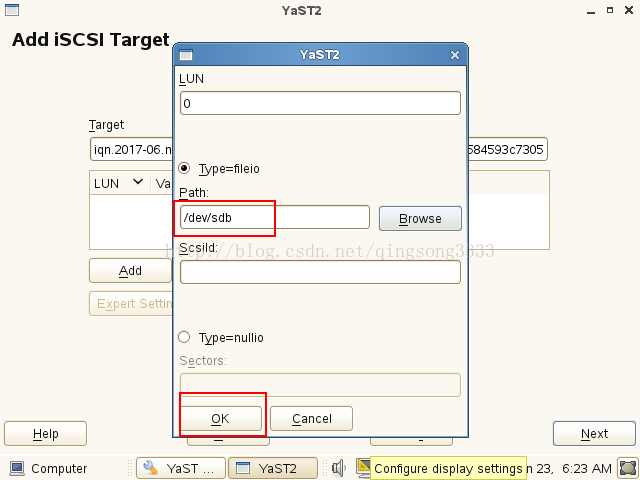

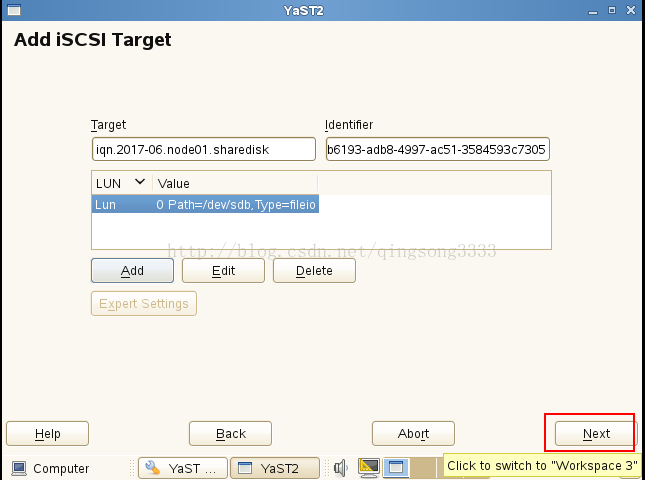

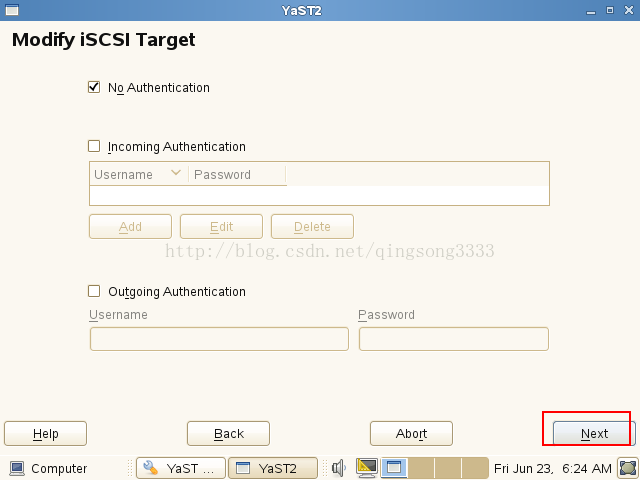

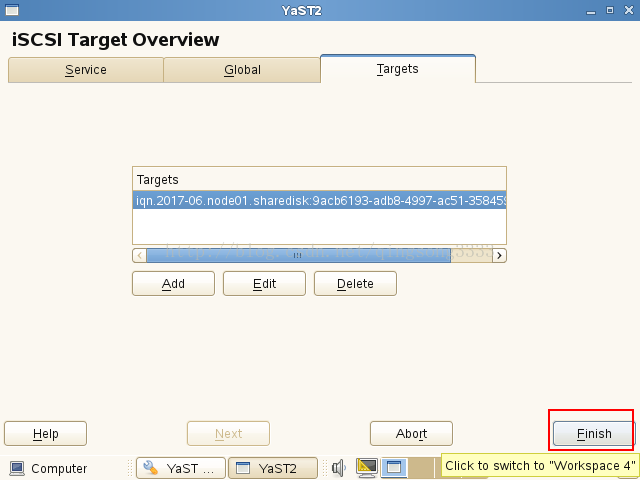

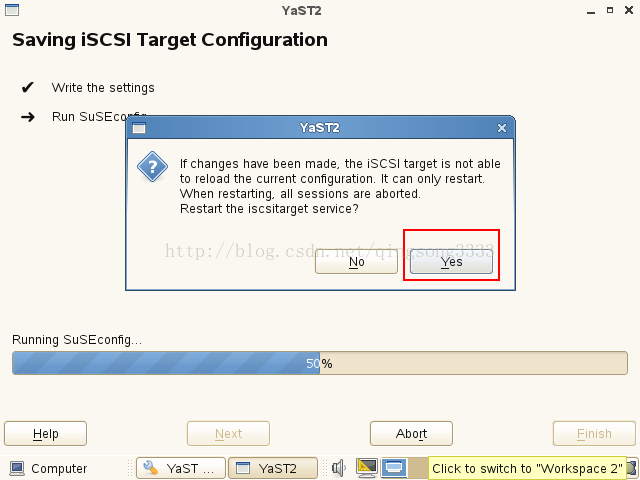

5.2 只在node01上配置iSCSI Target

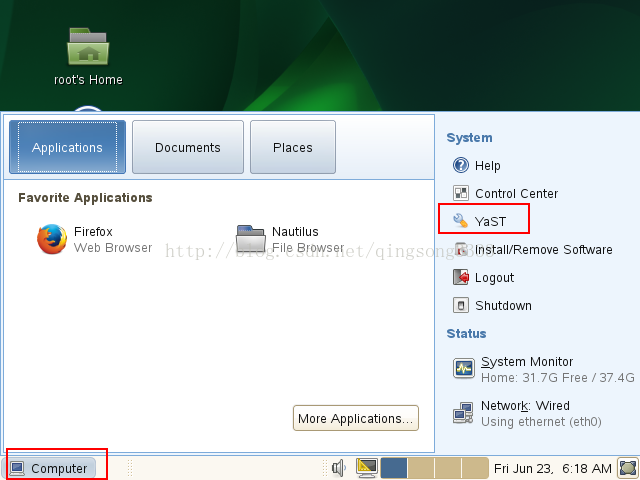

目地是把node01上新添加的那个磁盘/dev/sdb为作target,需要到虚拟机里配置:

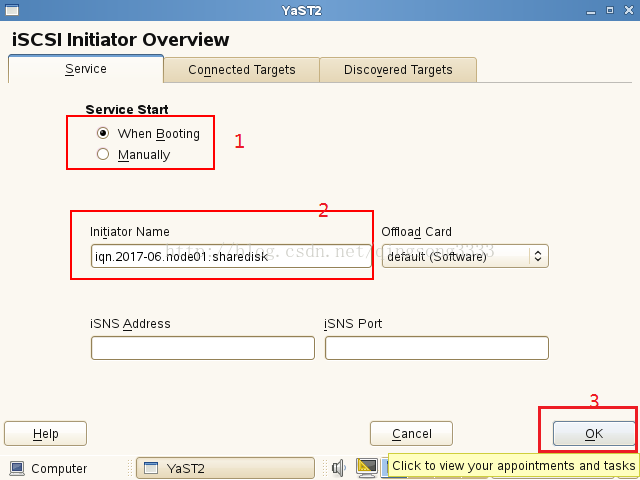

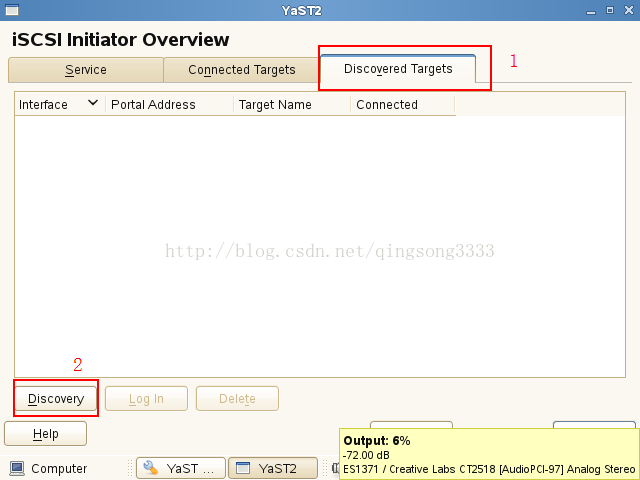

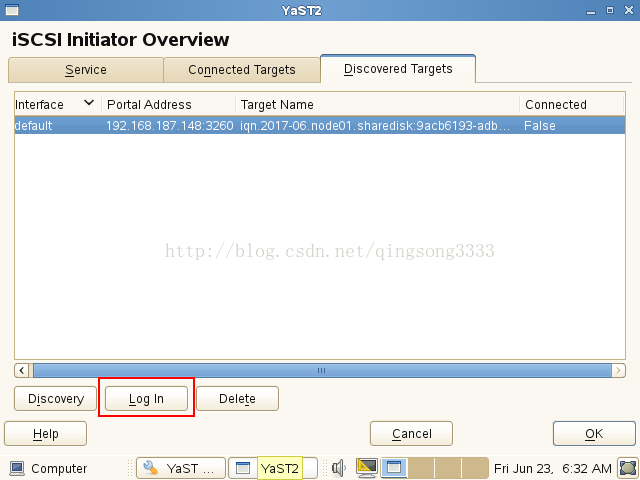

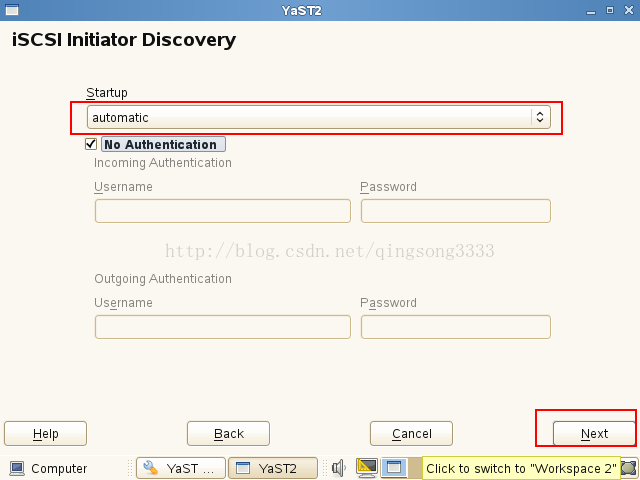

5.3 在node01上配置iSCSI Initiator:

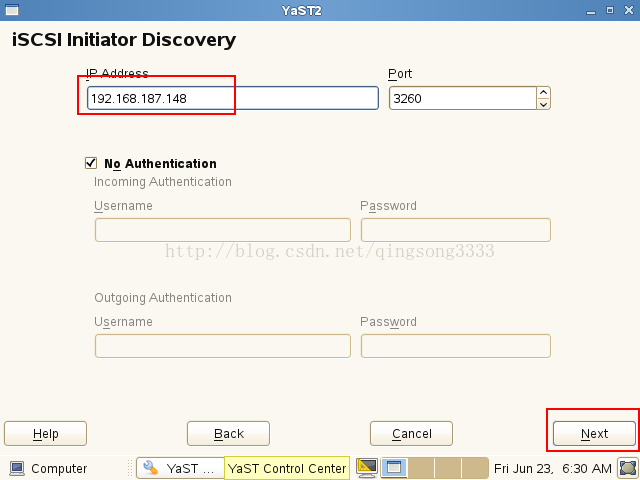

填写node01的IP地址

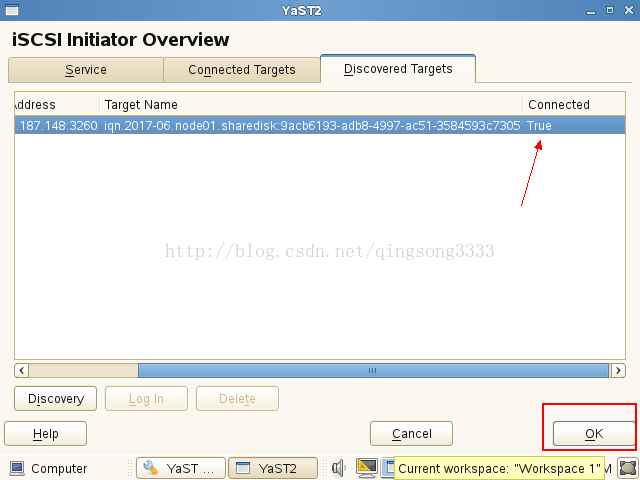

再次查看,发现多了一个磁盘/dev/sdc

node01:~ # fdisk -l

Disk /dev/sdb: 42.9 GB, 42949672960 bytes

255 heads, 63 sectors/track, 5221 cylinders, total 83886080 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sdb doesn't contain a valid partition table

Disk /dev/sda: 42.9 GB, 42949672960 bytes

255 heads, 63 sectors/track, 5221 cylinders, total 83886080 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x000a4042

Device Boot Start End Blocks Id System

/dev/sda1 2048 4208639 2103296 82 Linux swap / Solaris

/dev/sda2 * 4208640 83886079 39838720 83 Linux

Disk /dev/sdc: 42.9 GB, 42949672960 bytes

64 heads, 32 sectors/track, 40960 cylinders, total 83886080 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sdc doesn't contain a valid partition table

5.4 同样的办法,在node02上也配置iSCSI Initiator

输入IP那一步,仍然要写node01的IP地址,配置完成之后,fdisk -l的输出如下:

node02:~ # fdisk -lDisk /dev/sda: 42.9 GB, 42949672960 bytes

255 heads, 63 sectors/track, 5221 cylinders, total 83886080 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x0007a70b

Device Boot Start End Blocks Id System

/dev/sda1 2048 4208639 2103296 82 Linux swap / Solaris

/dev/sda2 * 4208640 83886079 39838720 83 Linux

Disk /dev/sdb: 10 MB, 10485760 bytes

64 heads, 32 sectors/track, 10 cylinders, total 20480 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sdb doesn't contain a valid partition table

Disk /dev/sdc: 42.9 GB, 42949672960 bytes

64 heads, 32 sectors/track, 40960 cylinders, total 83886080 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sdc doesn't contain a valid partition table

node02:~ #

这一步完成之后,两台机器上都多了一个磁盘/dev/sdc,实际上他们共享的是node01上的/dev/sdb

6. 创建DB2用户和组(两台机器都要做)

# groupadd -g 999 db2iadm# groupadd -g 998 db2fadm

# groupadd -g 997 dasadm

# useradd -u 1004 -g db2iadm -m -d /home/db2inst1 db2inst1

# useradd -u 1003 -g db2fadm -m -d /home/db2fend db2fend

# useradd -u 1002 -g dasadm -m -d /home/dasusr dasusr

# passwd db2inst1

# passwd db2fend

# passwd dasusr

7. 配置ssh信任(两台机器都要做)

这里root用户和db2inst1用户都要配置,配置SSH信任的办法可以自行参考网络,或者http://blog.csdn.net/qingsong3333/article/details/73695895

在home目录下,如果不存在,就新建一个目录".ssh",

root:

# cd $HOME

# mkdir .ssh

# ssh-keygen

# cd .ssh

# touch authorized_keys

# cat id_rsa.pub >> authorized_keys

# chmod 600 authorized_keys

# su - db2inst1

$ mkdir .ssh

$ ssh-keygen

$ cd .ssh

$ touch authorized_keys

$ cat id_rsa.pub >> authorized_keys

$ chmod 600 authorized_keys

然后分别将自己机器上的id_rsa.pub文本内容追加到对方机器里对应用户(root对root,db2inst1对db2inst1)上的authorized_keys文件里

两台机器上,都分别用root和db2inst1用户测试,如果以下命令都不需要输入密码,则成功:

# ssh node01 date

# ssh node02 date

# su - db2inst1

$ ssh node01 date

$ ssh node02 date

8. 安装DB2(两台机器都要做)

可以使用FlashFXP将安装包上传到虚拟机上:# tar -zxvf v10.5fp8_linuxx64_server_t.tar.gz

# ./server_t/db2_install

DBI1324W Support of the db2_install command is deprecated.

Default directory for installation of products - /opt/ibm/db2/V10.5

***********************************************************

Install into default directory (/opt/ibm/db2/V10.5) ? [yes/no]

yes

Specify one of the following keywords to install DB2 products.

SERVER

CONSV

EXP

CLIENT

RTCL

Enter "help" to redisplay product names.

Enter "quit" to exit.

***********************************************************

SERVER

***********************************************************

Do you want to install the DB2 pureScale Feature? [yes/no]

yes

DB2 installation is being initialized.

Total number of tasks to be performed: 53

Total estimated time for all tasks to be performed: 2183 second(s)

Task #1 start

Description: Checking license agreement acceptance

Estimated time 1 second(s)

Task #1 end

.

9. 配置GPFS(只在node01上做)

可以使用db2cluster命令来做,也可以仅使用GPFS的命令。(其实db2cluster命令就是调用的GPFS命令)9.1 创建GPFS cluster:

新建一个gpfs.nodes文件,内容如下# cat /tmp/gpfs.nodes

node01:quorum-manager

node02:quorum-manager

# /usr/lpp/mmfs/bin/mmcrcluster -p node01 -s node02 -n /tmp/gpfs.nodes -r /usr/bin/ssh -R /usr/bin/scp

9.2 添加license:

# /usr/lpp/mmfs/bin/mmchlicense server --accept -N node01,node02

9.3 修改配置参数:

# /usr/lpp/mmfs/bin/mmchconfig maxFilesToCache=20000# /usr/lpp/mmfs/bin/mmchconfig usePersistentReserve=yes

# /usr/lpp/mmfs/bin/mmchconfig verifyGpfsReady=yes

# /usr/lpp/mmfs/bin/mmchconfig totalPingTimeout=75

# /usr/lpp/mmfs/bin/mmlscluster

GPFS cluster information

========================

GPFS cluster name: node01

GPFS cluster id: 2620703579963216106

GPFS UID domain: node01

Remote shell command: /usr/bin/ssh

Remote file copy command: /usr/bin/scp

Repository type: CCR

Node Daemon node name IP address Admin node name Designation

-----------------------------------------------------------------------

1 node01 192.168.187.148 node01 quorum-manager

2 node02 192.168.187.149 node02 quorum-manager9.4 启动GPFS daemons

启动GPFS 并查看状态,等一会之后,如果变成active说明没问题:

# /usr/lpp/mmfs/bin/mmstartup -a# /usr/lpp/mmfs/bin/mmgetstate -a

9.5 创建GPFS文件系统

新建一个gpfs.disks文件,内容如下# cat /tmp/gpfs.disks

%nsd:

device=/dev/sdc

nsd=qsmiao

usage=dataAndMetadata

# /usr/lpp/mmfs/bin/mmcrnsd -F /tmp/gpfs.disks -v yes

# /usr/lpp/mmfs/bin/mmlsnsd

File system Disk name NSD servers

---------------------------------------------------------------------------

(free disk) qsmiao (directly attached)

# /usr/lpp/mmfs/bin/mmcrfs /gpfs20170623 gpfsdev qsmiao -B 1024K -m 1 -M 2 -r 1 -R 2

其中gpfs20170623是给目录起的名子,gpfsdev是给gpfs设备起的名子

# cat /etc/fstab

# /usr/lpp/mmfs/bin/mmmount all -a

Thu Jun 22 06:50:14 EDT 2017: mmmount: Mounting file systems ...

9.6 在node01和node02都可以看到创建的文件系统

node01:~ # dfFilesystem 1K-blocks Used Available Use% Mounted on

/dev/sda2 39213504 11834268 25387300 32% /

udev 1403056 132 1402924 1% /dev

tmpfs 1403056 808 1402248 1% /dev/shm

/dev/gpfsdev 41943040 478208 41464832 2% /gpfs20170623

node01:~ # echo "I'm writing to shared filesystem" > /gpfs20170623/hello.txt

node02:~ # df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/sda2 39213504 11834016 25387552 32% /

udev 1403056 132 1402924 1% /dev

tmpfs 1403056 804 1402252 1% /dev/shm

/dev/gpfsdev 41943040 41943040 0 100% /gpfs20170623

node02:~ # cat /gpfs20170623/hello.txt

I'm writing to shared filesystem

至此,我们可以看到,GPFS共享文件系统已经创建好,node01和node02可以对其并发访问

10. 创建实例(只在node01上做)

# /opt/ibm/db2/V10.5/instance/db2icrt -cf node01 -cfnet node01 -m node01 -mnet node01 -instance_shared_dir /gpfs20170623 -tbdev 192.168.187.2 -u db2fend db2inst1

上面的命令在node01上创建了一个member,一个CF。其中-tbdev 为网关地址即可, 请参考最后如何查看网关地址。

# su - db2inst1

$ db2set DB2_SD_ALLOW_SLOW_NETWORK=ON

$ db2licm -a db2aese_u.lic

$ db2start

06/23/2017 13:46:38 0 0 SQL1063N DB2START processing was successful.

SQL1063N DB2START processing was successful.

$ db2instance -list

ID TYPE STATE HOME_HOST CURRENT_HOST <..略..>

-- ---- ----- --------- ------------ <..略..>

0 MEMBER STARTED node01 node01 <..略..>

128 CF PRIMARY node01 node01 <..略..>

HOSTNAME STATE INSTANCE_STOPPED ALERT

-------- ----- ---------------- -----

node01 ACTIVE NO NO11. 添加另一个member(只在node01上做)

# /opt/ibm/db2/V10.5/instance/db2iupdt -d -add -m node02 -mnet node02 db2inst112. 添加另一个CF(只在node01上做):

# su - db2inst1$ db2stop

06/23/2017 14:10:27 1 0 SQL1032N No start database manager command was issued.

06/23/2017 14:10:55 0 0 SQL1064N DB2STOP processing was successful.

SQL6033W Stop command processing was attempted on "2" node(s). "1" node(s) were successfully stopped. "1" node(s) were already stopped. "0" node(s) could not be stopped.

$ su - root

# /opt/ibm/db2/V10.5/instance/db2iupdt -d -add -cf node02 -cfnet node02 db2inst1

13. 启动实例(只在node01上做):

# su - db2inst1$ db2start

06/23/2017 14:31:53 1 0 SQL1063N DB2START processing was successful.

06/23/2017 14:32:04 0 0 SQL1063N DB2START processing was successful.

SQL1063N DB2START processing was successful.

$ db2instance -list

ID TYPE STATE HOME_HOST CURRENT_HOST <..略..>

-- ---- ----- --------- ------------ <..略..>

0 MEMBER STARTED node01 node01 <..略..>

1 MEMBER STARTED node02 node02 <..略..>

128 CF PRIMARY node01 node01 <..略..>

129 CF PEER node02 node02 <..略..>

HOSTNAME STATE INSTANCE_STOPPED ALERT

-------- ----- ---------------- -----

node02 ACTIVE NO NO

node01 ACTIVE NO NO14. 创建数据库(只在node01上做)

$ db2 "create db sample"$ db2 "connect to sample"

$ db2 "create table t1(id int, address char(20))"

$ db2 "insert into t1 values(123, 'Beijing')"

15. 验证(只在node02上做)

# su - db2inst1$ db2 "connect to sample"

$ db2 "list applications global"

Auth Id Application Appl. Application Id DB # of

Name Handle Name Agents

-------- -------------- ---------- ----------------------------- -------- -----

DB2INST1 db2bp 75 *N0.db2inst1.170623184141 SAMPLE 1

DB2INST1 db2bp 65591 *N1.db2inst1.170623184447 SAMPLE 1 $ db2 "insert into t1 values(223,'NanJing')"

$ db2 "select * from t1"

ID ADDRESS

----------- --------------------

123 Beijing

223 NanJing

2 record(s) selected.

$ db2 "force applications all"

$ db2stop

答疑

1.) DPF环境下,实例目录是共享的。但Purescale实例目录不是共享的,我只见你在node01上创建了实例,为什么node02上也有自己的实例和实例目录?答:在添加node02节点上的member或者CF时,它会自动在node02上创建实例,也就是第10步。

2.) 如何查看网关?

答:查看网关,其中0.0.0.0开头的,即为默认网关,即192.168.187.2

# netstat -rn

Kernel IP routing table

Destination Gateway Genmask Flags MSS Window irtt Iface

0.0.0.0 192.168.187.2 0.0.0.0 UG 0 0 0 eth0

127.0.0.0 0.0.0.0 255.0.0.0 U 0 0 0 lo

169.254.0.0 0.0.0.0 255.255.0.0 U 0 0 0 eth0

192.168.187.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

3.)

为什么第11步的时候,db2stop说有一个节点SQL1032N,另一个节点SQL1064N?

答:新添加的member是没有启动的。

参考资料

http://www.db2china.net/Article/31549https://www.ibm.com/developerworks/cn/data/library/techarticle/dm-1207maoq/

更多推荐

已为社区贡献5条内容

已为社区贡献5条内容

所有评论(0)