1+X云计算平台运维与开发认证(中级)样卷A-实操过程

选择题可能有些题目有点小问题,请酌情参考,主要还是以实操为主单选题(200)1.下面哪个是软件代码版本控制软件?(10分)A、projectB、SVN(正确答案)C、notepad++D、Xshell2.下面哪个阶段不是项目管理流程中的阶段?(10分)A、项目立项B、项目开发C、项目测试D、项目质保(正确答案)3.VRRP协议报文使用的固定组播地址是? (10分)A、127.0.0.1B、192.

相关内容:

- 1+X云计算平台运维与开发认证(中级)样卷A-过程与答案

- 1+X云计算平台运维与开发认证(中级)样卷B-过程与答案

- 1+X云计算平台运维与开发认证(中级)样卷C-过程与答案

- 1+X云计算平台运维与开发认证(中级)样卷D-过程与答案

- 1+X云计算平台运维与开发认证(中级)样卷E-过程与答案

- 1+X中级商城集群搭建(三台主机)

选择题可能有些题目有点小问题,请酌情参考,主要还是以实操为主

单选题(200)

1.下面哪个是软件代码版本控制软件?(10分)

A、project

B、SVN(正确答案)

C、notepad++

D、Xshell

2.下面哪个阶段不是项目管理流程中的阶段?(10分)

A、项目立项

B、项目开发

C、项目测试

D、项目质保(正确答案)

3.VRRP协议报文使用的固定组播地址是? (10分)

A、127.0.0.1

B、192.168.0.1

C、169.254.254.254

D、 224.0.0.18(正确答案)

4.每个物理端口传输速率为100 Mb/s,将2个物理端口聚合成逻辑端口后,该聚合端口AP的传输速率为多少? (10分)

A、200Mb/s(正确答案)

B、100Mb/s

C、300Mb/s

D、50Mb/s

5.下列关于DHCP服务器的描述中,正确的是?(10分)

A、客户端只能接受本网段DHCP服务器提供的IP地址

B、需要保留的IP地址可以包含在DHCP服务器的地址池中(正确答案)

C、DHCP服务器不能帮助用户指定DNS服务器

D、DHCP服务器可以将一个IP地址同时分配给两个不同的用户

6.下列选项当中,创建名称为test的数据库的正确命令是?(10分)

A、mysql -uroot –p000000 create test

B、mysqladmin -uroot –p000000 create test(正确答案)

C、mysql -u root-p 000000 create test

D、mysqladmin -u root-p 000000 create test

7.操作Nginx时需要与哪个进程进行通讯?(10分)

A、主进程(正确答案)

B、通讯进程

C、网络进程

D、worker进程

8.Nginx中重新加载配置Master在接受到什么信号后,会先重新加载配置?(10分)

A、kill -HUP pid(正确答案)

B、start -HUP pid

C、stop -HUP pid

D、restart -HUP pid

9.以下哪个服务为OpenStack平台提供了消息服务?(10分)

A、Keystone

B、Neutron

C、RabbitMQ(正确答案)

D、Nova

10.OpenStack在以下哪个版本正式发布Horizon?(10分)

A、Cactus

B、Diablo

C、Essex(正确答案)

D、Folsom

11.下列选项当中,哪个是Neutron查询网络服务列表信息的命令?(10分)

A、neutron agent-list(正确答案)

B、neutron network-show

C、neutron agent-show

D、neutron network-list

12.以下关于腾讯云按量计费的描述中,哪项是错误的?(10分)

A、先使用后付款,相对预付费更灵活,用多少付多少,计费准确,无资源浪费。

B、可按需紧急增加或者减少资源,快速根据业务需要调整资源购买需求。

C、单位价格较预付费低。(正确答案)

D、较大量资源临时增加时,可能出现无资源可用情况。

13.下列关于CDN的说法,错误的是?(10分)

A、简单接入

B、统计简单

C、配置复杂(正确答案)

D、多样管理

14.VPN连接与专线接入是两种连接企业数据中心与腾讯云的方法,下列说法错误的是?(10分)

A、专线接入具备更安全、更稳定、更低时延、更大带宽等特性

B、VPN 连接具有配置简单,云端配置实时生效、可靠性高等特点

C、专线接入的定价由物理专线定价、专用通道定价组成

D、VPN 通道、对端网关、VPN 网关需要付费使用(正确答案)

15.关于腾讯云关系型数据库CDB的产品优势,下列说法不正确的是?(10分)

A、默认支持双主备份模式(正确答案)

B、用户可根据业务情况选择精确到秒粒度的按量计费模式,或者价格更优惠的包年包月模式。

C、提供多种存储介质选型,可根据业务情况灵活选择。

D、仅需几步可轻松完成数据库从部署到访问,不需要预先准备基础设施,也不需要安装和

维护数据库软件。

16.以下关于腾讯云按量计费的描述中,哪项是错误的?(10分)

A、先使用后付款,相对预付费更灵活,用多少付多少,计费准确,无资源浪费。

B、可按需紧急增加或者减少资源,快速根据业务需要调整资源购买需求。

C、单位价格较预付费低。(正确答案)

D、较大量资源临时增加时,可能出现无资源可用情况。

17.用户购买了上海地区按量计费 500G SSD 云硬盘,使用2小时后释放,已知上海地区SSD云硬盘单价为0.0033元/GB*结算单位,则用户需要付费多少元?(10分)

A、3.3(正确答案)

B、198

C、11880

D、0.14

18.腾讯云CDN提供哪些加速服务,使用高性能缓存系统,降低访问时延,提高资源可用性?(10分)

A、静态内容加速

B、下载分发加速

C、音视频点播加速

D、以上所有(正确答案)

19.下面哪个是Kubernetes可操作的最小对象?(10分)

A、 container

B、 Pod(正确答案)

C、 image

D、 volume

20.下面哪个不是属于Shell的种类_。(10分)

A、Bourne Shell

B、Bourne-Again Shell

C、Korn Shell

D、J2SE(正确答案)

多选题(200分)

1.常见的项目开发模型有哪些?(10分)

A、快速原型模型

B、增量模型

C、瀑布模型(正确答案)

D、敏捷开发模型(正确答案)

2.下面关于项目立项启动表述正确的是?(10分)

A、项目启动阶段,将项目的目标、规划与任务进行完整地定义和阐述,成一份完成的项目工作任务书(正确答案)

B、项目启动会是宣导项目重要性的关键节点,必须就项目目标、上线条件、管理权限和项目干系人列表达成共识(正确答案)

C、项目启动阶段必须确定明确的责任人(正确答案)

D、项目立项启动过程需要明确开发、测试阶段的任务(正确答案)

3.VRRP协议的优点有哪些?(10分)

A、容错率低

B、适应性强(正确答案)

C、网络开销小(正确答案)

D、简化网络管理(正确答案)

4.VRRP协议中定义了哪些状态? (10分)

A、活动状态(正确答案)

B、转发状态

C、备份状态(正确答案)

D、初始状态(正确答案)

5.在Linux系统,关于硬链接的描述正确的是?(10分)

A、跨文件系统

B、不可以跨文件系统(正确答案)

C、为链接文件创建新的i节点

D、链接文件的i节点与被链接文件的i节点相同(正确答案)

6.批量删除当前目录下后缀名为.c的文件。如a.c、b.c。(10分)

A、rm .c(正确答案) B、find . -name ".c" -maxdepth 1 | xargs rm(正确答案)

C、find . -name "*.c" | xargs rm

D、以上都不正确

7.哪些是zookeeper主要角色?(10分)

A、领导者(正确答案)

B、学习者(正确答案)

C、客户端(正确答案)

D、服务端

8.Kafka应用场景有哪些?(10分)

A、 日志收集(正确答案)

B、 消息系统(正确答案)

C、 运营指标(正确答案)

D、 流式处理(正确答案)

9.制定银行容器平台的需求时,建议考虑包括的方面有哪些?(10分)

A、管理大规模容器集群能力(正确答案)

B、为满足金融业务的监管和安全要求,平台需要考虑应用的高可用性和业务连续性、多租户安全隔离、不同等级业务隔离(正确答案)

C、器平台还对公网提供访问,那么还需要考虑访问链路加密、安全证书(正确答案)

D、防火墙策略、安全漏洞扫描、镜像安全、后台运维的4A纳管、审计日志(正确答案)

10.下面属于nova组件中的服务的是?(10分)

A、nova-api(正确答案)

B、nova-scheduler(正确答案)

C、nova-novncproxy(正确答案)

D、nova-controller

11.Glance服务可以采用的后端存储有哪些?(10分)

A、简单文件系统(正确答案)

B、Swift(正确答案)

C、Ceph(正确答案)

D、S3云存储(正确答案)

12.Ceilometer数据收集方式有哪些?(10分)

A、触发收集(正确答案)

B、自发收集

C、循环收集

D、轮询收集(正确答案)

13.腾讯云服务器多地域多可用区部署有哪些优势?(10分)

A、用户就近选择,降低时延提高速度(正确答案)

B、可用区间故障相互隔离,无故障扩散(正确答案)

C、保障业务连续性(正确答案)

D、保证高可用性(正确答案)

14.云服务器可用的镜像类型有哪几个?(10分)

A、公有镜像(正确答案)

B、自定义镜像(正确答案)

C、服务市场镜像(正确答案)

D、个人镜像

15.以下关于弹性伸缩特点描述正确的有哪几项?(10分)

A、弹性伸缩可以根据您的业务需求和策略,自动调整 CVM 计算资源(正确答案)

B、弹性伸缩的计费方式为按云服务器所使用的资源来计费(正确答案)

C、弹性伸缩的计费方式为按年度计费

D、以上皆无

16.下列关于腾讯云负载均衡说法正确的是?(10分)

A、公网应用型支持七层、四层转发(正确答案)

B、内网应用型不支持四层转发

C、公网传统型支持七层、四层转发(正确答案)

D、内网传统型不支持七层转发(正确答案)

17.下面关于容器编排的说法,不正确的是?(10分)

A、 容器编排是指对单独组件和应用层的工作进行组织的流程

B、 应用一般由单独容器化的组件(通常称为微服务)组成

C、 对单个容器进行组织的流程即称为容器编排(正确答案)

D、 容器编排工具仅允许用户指导容器部署与自动更新(正确答案)

18.下面关于OpenShift集群缩容的说法,正确的是?(10分)

A、 缩容是指减少集群的网络资源

B、 缩容时集群管理员需要保证新的容器不会再创建于要缩减的计算节点之上(正确答案)

C、 缩容时要保证当前运行在计划缩减的计算节点之上的容器能迁移到其他计算节点之上(正确答案)

D、 般的缩容过程主要步骤包括禁止参与调度、节点容器撤离和移除计算节点(正确答案)

19.IaaS平台中拥有下面哪些项目_。(10分)

A、ERP

B、Openstack(正确答案)

C、Cloudstack(正确答案)

D、CRM

20.Python中使用requests第三方库的优点在于_。(10分)

A、持使用Cookie保持会话(正确答案)

B、支持文件上传(正确答案)

C、支持自动确定响应内容的编码(正确答案)

D、对用户来说比较人性化(正确答案)

实操题(600分)

1.路由器管理(40分)

配置R1和R2路由器(路由器使用R2220),R1路由器配置端口g0/0/1地址为192.168.1.1/30,端口g0/0/1连接R2路由器。配置端口g0/0/2地址为192.168.2.1/24,作为内部PC1机网关地址。R2路由器配置端口g0/0/1地址为192.168.1.2/30,端口g0/0/1连接R1路由器,配置端口g0/0/2地址为192.168.3.1/24,作为内部PC2机网关地址。R1和R2路由器启用OSPF动态路由协议自动学习路由。使PC1和PC2可以相互访问。(所有配置命令使用完整命令)将上述所有操作命令及返回结果以文本形式提交到答题框。

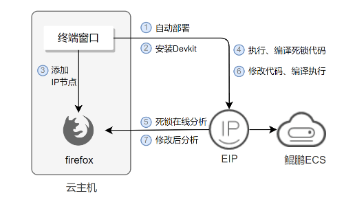

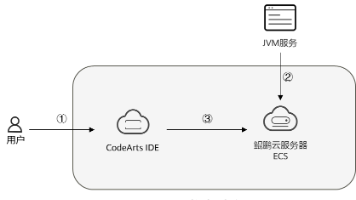

架构图(考试时连接两台路由就行了,但为了学到东西所以练习时采用完整的部署方案):

R1配置:

<Huawei>system-view

[Huawei]sysname R1

[R1]interface GigabitEthernet 0/0/1

[R1-GigabitEthernet0/0/1]ip address 192.168.1.1 30

[R1-GigabitEthernet0/0/1]quit

[R1]interface GigabitEthernet 0/0/2

[R1-GigabitEthernet0/0/2]ip address 192.168.2.1 24

[R1-GigabitEthernet0/0/2]quit

[R1]ospf 1

[R1-ospf-1]area 0

[R1-ospf-1-area-0.0.0.0]network 192.168.1.0 0.0.0.3

[R1-ospf-1-area-0.0.0.0]network 192.168.2.0 0.0.0.255

R2配置:

<Huawei>system-view

[Huawei]sysname R2

[R2]interface GigabitEthernet 0/0/1

[R2-GigabitEthernet0/0/1]ip address 192.168.1.2 30

[R2-GigabitEthernet0/0/1]quit

[R2]interface GigabitEthernet 0/0/2

[R2-GigabitEthernet0/0/2]ip address 192.168.3.1 24

[R2-GigabitEthernet0/0/2]quit

[R2]ospf 1

[R2-ospf-1]area 0

[R2-ospf-1-area-0.0.0.0]network 192.168.1.0 0.0.0.3

[R2-ospf-1-area-0.0.0.0]network 192.168.3.0 0.0.0.255

2.无线AC管理(40分)

配置无线AC控制器(型号使用AC6005),开启dhcp功能,设置vlan20网关地址为172.16.20.1/24,并配置vlan20接口服务器池,设置dhcp分发dns为114.114.114.114、223.5.5.5。将上述所有操作命令及返回结果以文本形式提交到答题框。

架构图(考试时命令对就行了,只用 一台AC就够了,但为了学到东西所以练习时采用完整的部署方案)上面图是已经配置好了的

AC配置:

<AC6005>system-view

[AC6005]vlan batch 10

[AC6005]vlan batch 20

[AC6005]dhcp enable

[AC6005]interface vlanif 20

[AC6005-Vlanif20]description USER

[AC6005-Vlanif20]ip address 172.16.20.1 24

[AC6005-Vlanif20]dhcp select interface

[AC6005-Vlanif20]dhcp server dns-list 114.114.114.114 223.5.5.5

[AC6005-Vlanif20]quit

[AC6005]interface Vlanif 10

[AC6005-Vlanif10]description AP_Manage

[AC6005-Vlanif10]ip address 192.168.10.254 24

[AC6005-Vlanif10]dhcp select interface

[AC6005-Vlanif10]quit

[AC6005]capwap source interface Vlanif 10

[AC6005]wlan

[AC6005-wlan-view]regulatory-domain-profile name office-domain

[AC6005-wlan-regulate-domain-office-domain]country-code CN

[AC6005-wlan-regulate-domain-office-domain]quit

[AC6005-wlan-view]ssid-profile name office-ssid

[AC6005-wlan-ssid-prof-office-ssid]ssid office

[AC6005-wlan-ssid-prof-office-ssid]quit

[AC6005-wlan-view]security-profile name office-security

[AC6005-wlan-sec-prof-office-security]security wpa-wpa2 psk pass-phrase 123456789 aes

[AC6005-wlan-sec-prof-office-security]quit

[AC6005-wlan-view]vap-profile name office-vap

[AC6005-wlan-vap-prof-office-vap]forward-mode direct-forward

[AC6005-wlan-vap-prof-office-vap]security-profile office-security

[AC6005-wlan-vap-prof-office-vap]ssid-profile office-ssid

[AC6005-wlan-vap-prof-office-vap]service-vlan vlan-id 20

[AC6005-wlan-vap-prof-office-vap]quit

[AC6005-wlan-view]ap-group name office-ap-group

[AC6005-wlan-ap-group-office-ap-group]regulatory-domain-profile office-domain

[AC6005-wlan-ap-group-office-ap-group]vap-profile office-vap wlan 1 radio 0

[AC6005-wlan-ap-group-office-ap-group]vap-profile office-vap wlan 1 radio 1

[AC6005-wlan-ap-group-office-ap-group]quit

获取AP的mac(点击设置):

[AC6005-wlan-view]ap-id 0 ap-mac 00E0-FC99-4730

[AC6005-wlan-ap-0]ap-group office-ap-group

[AC6005-wlan-ap-0]quit

[AC6005-wlan-view]quit

[AC6005]interface GigabitEthernet 0/0/1

[AC6005-GigabitEthernet0/0/1]port link-type trunk

[AC6005-GigabitEthernet0/0/1]port trunk allow-pass vlan 10 20

[AC6005-GigabitEthernet0/0/1]quit

SW1配置:

<Huawei>system-view

[Huawei]sysname SW1

[SW1]vlan batch 100 101

[SW1]interface GigabitEthernet 0/0/24

[SW1-GigabitEthernet0/0/24]description to_AC

[SW1-GigabitEthernet0/0/24]port link-type trunk

[SW1-GigabitEthernet0/0/24]port trunk allow-pass vlan 10 20

[SW1-GigabitEthernet0/0/24]quit

[SW1]interface GigabitEthernet 0/0/1

[SW1-GigabitEthernet0/0/1]description to_AP

[SW1-GigabitEthernet0/0/1]port link-type trunk

[SW1-GigabitEthernet0/0/1]port trunk pvid vlan 10

[SW1-GigabitEthernet0/0/1]port trunk allow-pass vlan 10 20

[SW1-GigabitEthernet0/0/1]quit

结果(随便点个连接WiFi的机子查IP):

发现主机已经获得地址

3.YUM源管理(40分)

若存在一个CentOS-7-x86_64-DVD-1511.iso的镜像文件,使用这个镜像文件配置本地yum源,要求将这个镜像文件挂载在/opt/centos目录,请问如何配置自己的local.repo文件,使得可以使用该镜像中的软件包,安装软件。请将local.repo文件的内容以文本形式提交到答题框。

[root@xiandian ~]# yum install -y vsftpd

# 注释:在配置文件中添加一行anon_root=/opt

[root@xiandian ~]# vim /etc/vsftpd/vsftpd.conf

anon_root=/opt

[root@xiandian ~]# systemctl restart vsftpd

[root@xiandian ~]# systemctl enable vsftpd

Created symlink from /etc/systemd/system/multi-user.target.wants/vsftpd.service to /usr/lib/systemd/system/vsftpd.service.

[root@xiandian ~]# systemctl stop firewalld

[root@xiandian ~]# systemctl disable firewalld

# 注释:selinux防火墙,设置访问模式,将SELINUX=enforcing改成SELINUX=Permissive(得重启才生效):

[root@xiandian ~]# vim /etc/selinux/config

SELINUX=Permissive

# 注释:配置临时访问模式(无需重启):

[root@xiandian ~]# setenforce 0

[root@xiandian ~]# getenforce

Permissive

[root@xiandian ~]# mkdir /opt/centos

[root@xiandian ~]# mount /opt/CentOS-7-x86_64-DVD-1511.iso /opt/centos/

mount: /dev/loop1 is write-protected, mounting read-only

[root@xiandian ~]# df -Th

Filesystem Type Size Used Avail Use% Mounted on

/dev/sda3 xfs 246G 8.0G 238G 4% /

devtmpfs devtmpfs 3.9G 0 3.9G 0% /dev

tmpfs tmpfs 3.9G 0 3.9G 0% /dev/shm

tmpfs tmpfs 3.9G 8.5M 3.9G 1% /run

tmpfs tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup

/dev/sda1 xfs 509M 119M 391M 24% /boot

tmpfs tmpfs 781M 0 781M 0% /run/user/0

/dev/loop0 iso9660 4.1G 4.1G 0 100% /mnt

/dev/loop1 iso9660 4.1G 4.1G 0 100% /opt/centos

[root@xiandian ~]# cat /etc/yum.repos.d/local.repo

[centos]

name=centos

baseurl=file:///opt/centos

gpgcheck=0

enabled=1

4.Raid存储管理(40分)

使用提供的虚拟机,该虚拟机存在一块大小为20G的磁盘/dev/vdb,使用fdisk命令对该硬盘进形分区,要求分出三个大小为5G的分区。使用这三个分区,创建名为/dev/md5、raid级别为5的磁盘阵列。创建完成后使用xfs文件系统进形格式化,并挂载到/mnt目录下。将mdadm -D /dev/md5命令和返回结果;df -h命令和返回结果以文本形式提交到答题框。

- 因为我用的是CentOS7系统做的实验所以磁盘是sdb,CentOS6磁盘是vdb:

[root@xiandian ~]# fdisk /dev/sdb

Welcome to fdisk (util-linux 2.23.2).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Device does not contain a recognized partition table

Building a new DOS disklabel with disk identifier 0xb7634785.

Command (m for help): n

Partition type:

p primary (0 primary, 0 extended, 4 free)

e extended

Select (default p): p

Partition number (1-4, default 1): 1

First sector (2048-41943039, default 2048):

Using default value 2048

Last sector, +sectors or +size{K,M,G} (2048-41943039, default 41943039): +5G

Partition 1 of type Linux and of size 5 GiB is set

Command (m for help): n

Partition type:

p primary (1 primary, 0 extended, 3 free)

e extended

Select (default p): p

Partition number (2-4, default 2): 2

First sector (10487808-41943039, default 10487808):

Using default value 10487808

Last sector, +sectors or +size{K,M,G} (10487808-41943039, default 41943039): +5G

Partition 2 of type Linux and of size 5 GiB is set

Command (m for help): n

Partition type:

p primary (2 primary, 0 extended, 2 free)

e extended

Select (default p): p

Partition number (3,4, default 3): 3

First sector (20973568-41943039, default 20973568):

Using default value 20973568

Last sector, +sectors or +size{K,M,G} (20973568-41943039, default 41943039): +5G

Partition 3 of type Linux and of size 5 GiB is set

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

[root@xiandian ~]# yum install -y mdadm

[root@xiandian ~]# mdadm -Cv /dev/md5 -l5 -n3 /dev/sdb[1-3]

mdadm: layout defaults to left-symmetric

mdadm: layout defaults to left-symmetric

mdadm: chunk size defaults to 512K

mdadm: size set to 5237760K

mdadm: Fail create md5 when using /sys/module/md_mod/parameters/new_array

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md5 started.

[root@xiandian ~]# mkfs.xfs /dev/md5

meta-data=/dev/md5 isize=256 agcount=16, agsize=163712 blks

= sectsz=512 attr=2, projid32bit=1

= crc=0 finobt=0

data = bsize=4096 blocks=2618880, imaxpct=25

= sunit=128 swidth=256 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=0

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=8 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@xiandian ~]# mount /dev/md5 /mnt/

[root@xiandian ~]# df -Th

Filesystem Type Size Used Avail Use% Mounted on

/dev/sda3 xfs 246G 8.6G 237G 4% /

devtmpfs devtmpfs 3.9G 0 3.9G 0% /dev

tmpfs tmpfs 3.9G 0 3.9G 0% /dev/shm

tmpfs tmpfs 3.9G 8.6M 3.9G 1% /run

tmpfs tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup

/dev/sda1 xfs 509M 119M 391M 24% /boot

tmpfs tmpfs 781M 0 781M 0% /run/user/0

/dev/loop0 iso9660 4.1G 4.1G 0 100% /opt/centos

/dev/md5 xfs 10G 33M 10G 1% /mnt

[root@xiandian ~]# mdadm -D /dev/md5

/dev/md0:

Version : 1.2

Creation Time : Wed Oct 23 17:08:07 2019

Raid Level : raid5

Array Size : 5238784 (5.00 GiB 5.36 GB)

Used Dev Size : 5238784 (5.00 GiB 5.36 GB)

Raid Devices : 3

Total Devices : 3

Persistence : Superblock is persistent

Update Time : Wed Oct 23 17:13:37 2019

State : clean

Active Devices : 3

Working Devices : 3

Failed Devices : 0

Spare Devices : 0

Name : xiandian:0 (local to host xiandian)

UUID : 71123d35:b354bc98:2e36589d:f0ed3491

Events : 17

Number Major Minor RaidDevice State

0 253 17 0 active sync /dev/vdb1

1 253 18 1 active sync /dev/vdb2

2 253 19 2 active sync /dev/vdb3

[root@xiandian ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/vda1 41G 2.4G 39G 6% /

devtmpfs 3.9G 0 3.9G 0% /dev

tmpfs 3.9G 4.0K 3.9G 1% /dev/shm

tmpfs 3.9G 17M 3.9G 1% /run

tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup

/dev/loop0 2.8G 33M 2.8G 2% /swift/node

tmpfs 799M 0 799M 0% /run/user/0

/dev/md5 10.0G 33M 10.0G 1% /mnt

5.主从数据库管理(40分)

使用提供的两台虚拟机,在虚拟机上安装mariadb数据库,并配置为主从数据库,实现两个数据库的主从同步。配置完毕后,请在从节点上的数据库中执行“show slave status \G”命令查询从节点复制状态,将查询到的结果以文本形式提交到答题框。

- 上传gpmall-repo中有mariadb子文件的文件到/root目录下:

Mysql1:

[root@xiandian ~]# hostnamectl set-hostname mysql1

[root@mysql1 ~]# login

[root@mysql1 ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.1.111 mysql1

192.168.1.112 mysql2

[root@mysql1 ~]# systemctl stop firewalld

[root@mysql1 ~]# systemctl disable firewalld

[root@mysql1 ~]# setenforce 0

[root@mysql1 ~]# vim /etc/selinux/config

SELINUX=Permissive

[root@mysql1~]# vim /etc/yum.repos.d/local.repo

[centos]

name=centos

baseurl=file:///opt/centos

enabled=1

gpgcheck=0

[mariadb]

name=mariadb

baseurl=file:///root/gpmall-repo

enabled=1

gpgcheck=0

[root@mysql1 ~]# yum install -y mariadb mariadb-server

[root@mysql1 ~]# systemctl restart mariadb

[root@mysql1 ~]# mysql_secure_installation

[root@mysql1 ~]# vim /etc/my.cnf

# 注释:在[mysqld]下添加:

log_bin = mysql-bin

binlog_ignore_db = mysql

server_id = 10

[root@mysql1 ~]# mysql -uroot -p000000

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 3

Server version: 5.5.65-MariaDB MariaDB Server

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]> grant all privileges on *.* to 'root'@'%' identified by '000000';

Query OK, 0 rows affected (0.00 sec)

# 注释:如果你不想配置上面的host文件可以不使用主机名mysql2的形式,可以直接打IP地址,用户可以随意指定,只是一个用于连接的而已

MariaDB [(none)]> grant replication slave on *.* to 'user'@'mysql2' identified by '000000';

Query OK, 0 rows affected (0.00 sec)

Mysql2:

[root@xiandian ~]# hostnamectl set-hostname mysql2

[root@mysql2 ~]# login

[root@mysql2 ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.1.111 mysql1

192.168.1.112 mysql2

[root@mysql2 ~]# systemctl stop firewalld

[root@mysql2 ~]# systemctl disable firewalld

[root@mysql2 ~]# setenforce 0

[root@mysql2 ~]# vim /etc/selinux/config

SELINUX=Permissive

[root@mysql2~]# vim /etc/yum.repos.d/local.repo

[centos]

name=centos

baseurl=file:///opt/centos

enabled=1

gpgcheck=0

[mariadb]

name=mariadb

baseurl=file:///root/gpmall-repo

enabled=1

gpgcheck=0

[root@mysql2 ~]# yum install -y mariadb mariadb-server

[root@mysql2 ~]# systemctl restart mariadb

[root@mysql2 ~]# mysql_secure_installation

[root@mysql2 ~]# vim /etc/my.cnf

# 注释:在[mysqld]下添加:

log_bin = mysql-bin

binlog_ignore_db = mysql

server_id = 20

[root@mysql2 ~]# mysql -uroot -p000000

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 3

Server version: 5.5.65-MariaDB MariaDB Server

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

# 注释:如果你不想配置上面的host文件可以不使用主机名mysql1的形式,可以直接打IP地址,这里的用户,密码必须和上面mysql1配置的user一致

MariaDB [(none)]> change master to master_host='mysql1',master_user='user',master_password='000000';

Query OK, 0 rows affected (0.02 sec)

MariaDB [(none)]> start slave;

MariaDB [(none)]> show slave status\G

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: mysql1

Master_User: user

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: mysql-bin.000003

Read_Master_Log_Pos: 245

Relay_Log_File: mariadb-relay-bin.000005

Relay_Log_Pos: 529

Relay_Master_Log_File: mysql-bin.000003

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 0

Last_Error:

Skip_Counter: 0

Exec_Master_Log_Pos: 245

Relay_Log_Space: 1256

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: 0

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 0

Last_SQL_Error:

Replicate_Ignore_Server_Ids:

Master_Server_Id: 30

1 row in set (0.00 sec)

验证结果(主从是否同步):

Mysql1:

[root@mysql1 ~]# mysql -uroot -p000000

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 26

Server version: 5.5.65-MariaDB MariaDB Server

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]> create database test;

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> use test;

Database changed

MariaDB [test]> create table demotables(id int not null primary key,name varchar(10),addr varchar(20));

Query OK, 0 rows affected (0.01 sec)

MariaDB [test]> insert into demotables values(1,'zhangsan','lztd');

Query OK, 0 rows affected (0.00 sec)

MariaDB [test]> select * from demotables;

+----+----------+------+

| id | name | addr |

+----+----------+------+

| 1 | zhangsan | lztd |

+----+----------+------+

1 rows in set (0.00 sec)

Mysql2:

[root@mysql2 ~]# mysql -uroot -p000000

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 24

Server version: 5.5.65-MariaDB MariaDB Server

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| test |

+--------------------+

4 rows in set (0.00 sec)

MariaDB [(none)]> use test;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

MariaDB [test]> show tables;

+----------------+

| Tables_in_test |

+----------------+

| demotables |

+----------------+

1 row in set (0.00 sec)

MariaDB [test]> select * from demotables;

+----+----------+------+

| id | name | addr |

+----+----------+------+

| 1 | zhangsan | lztd |

+----+----------+------+

1 rows in set (0.00 sec)

6.应用商城系统(40分)

使用提供的软件包和提供的虚拟机,完成单节点应用系统部署。部署完成后,进行登录,(订单中填写的收货地址填写自己学校的地址,收货人填写自己的实际联系方式)最后使用curl命令去获取商城首页的返回信息,将curl http://你自己的商城IP/#/home获取到的结果以文本形式提交到答题框。

这是gpmall上传的开源项目,如果想了解的更加深入的可以访问这个地址 https://gitee.com/mic112/gpmall

- 将所需的zookeep,kafka和gpmall-repo的包上传到mall虚拟机:

[root@mall ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.1.111 mall

192.168.1.111 kafka.mall

192.168.1.111 redis.mall

192.168.1.111 mysql.mall

192.168.1.111 zookeeper.mall

[root@mall ~]# vim /etc/yum.repos.d/local.repo

[centos]

name=centos

baseurl=file:///opt/centos

enabled=1

gpgcheck=0

[gpmall]

name=gpmall

baseurl=file:///root/gpmall-repo

enabled=1

gpgcheck=0

[root@mall ~]# yum install -y java-1.8.0-openjdk java-1.8.0-openjdk-devel

[root@mall ~]# java -version

openjdk version "1.8.0_252"

OpenJDK Runtime Environment (build 1.8.0_252-b09)

OpenJDK 64-Bit Server VM (build 25.252-b09, mixed mode)

[root@mall ~]# yum install -y redis

[root@mall ~]# yum install -y nginx

[root@mall ~]# yum install -y mariadb mariadb-server

[root@mall ~]# tar -zvxf zookeeper-3.4.14.tar.gz

[root@mall ~]# cd zookeeper-3.4.14/conf

[root@mall conf]# mv zoo_sample.cfg zoo.cfg

[root@mall conf]# cd /root/zookeeper-3.4.14/bin/

[root@mall bin]# ./zkServer.sh start

[root@mall bin]# ./zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /root/zookeeper-3.4.14/bin/../conf/zoo.cfg

Mode: standalone

[root@mall bin]# cd

[root@mall ~]# tar -zvxf kafka_2.11-1.1.1.tgz

[root@mall ~]# cd kafka_2.11-1.1.1/bin/

[root@mall bin]# ./kafka-server-start.sh -daemon ../config/server.properties

[root@mall bin]# jps

7249 Kafka

17347 Jps

6927 QuorumPeerMain

[root@mall bin]# cd

[root@mall ~]# vim /etc/my.cnf

# This group is read both both by the client and the server

# use it for options that affect everything

#

[client-server]

#

# include all files from the config directory

#

!includedir /etc/my.cnf.d

[mysqld]

init_connect='SET collation_connection = utf8_unicode_ci'

init_connect='SET NAMES utf8'

character-set-server=utf8

collation-server=utf8_unicode_ci

skip-character-set-client-handshake

[root@mall ~]# systemctl restart mariadb

[root@mall ~]# systemctl enable mariadb

[root@mall ~]# mysqladmin -uroot password 123456

[root@mall ~]# mysql -uroot -p123456

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 9

Server version: 10.3.18-MariaDB MariaDB Server

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]> create database gpmall;

Query OK, 1 row affected (0.002 sec)

MariaDB [(none)]> grant all privileges on *.* to root@localhost identified by '123456' with grant option;

Query OK, 0 rows affected (0.001 sec)

MariaDB [(none)]> grant all privileges on *.* to root@'%' identified by '123456' with grant option;

Query OK, 0 rows affected (0.001 sec)

MariaDB [(none)]> use gpmall;

Database changed

MariaDB [gpmall]> source /root/gpmall-xiangmubao-danji/gpmall.sql

MariaDB [gpmall]> Ctrl-C -- exit!

[root@mall ~]# vim /etc/redis.conf

将bind 127.0.0.1这一行注释掉;将protected-mode yes 改为 protected-mode no

#bind 127.0.0.1

Protected-mode no

[root@mall ~]# systemctl restart redis

[root@mall ~]# systemctl enable redis

Created symlink from /etc/systemd/system/multi-user.target.wants/redis.service to /usr/lib/systemd/system/redis.service.

[root@mall ~]# rm -rf /usr/share/nginx/html/*

[root@mall ~]# cp -rf gpmall-xiangmubao-danji/dist/* /usr/share/nginx/html/

[root@mall ~]# vim /etc/nginx/conf.d/default.conf

# 注释:在server块中添加三个location块

server {

...

location /user {

proxy_pass http://127.0.0.1:8082;

}

location /shopping {

proxy_pass http://127.0.0.1:8081;

}

location /cashier {

proxy_pass http://127.0.0.1:8083;

}

...

}

[root@mall ~]# systemctl restart nginx

[root@mall ~]# systemctl enable nginx

Created symlink from /etc/systemd/system/multi-user.target.wants/nginx.service to /usr/lib/systemd/system/nginx.service.

[root@mall ~]# cd gpmall-xiangmubao-danji/

[root@mall gpmall-xiangmubao-danji]# nohup java -jar shopping-provider-0.0.1-SNAPSHOT.jar &

[1] 3531

[root@mall gpmall-xiangmubao-danji]# nohup: ignoring input and appending output to ‘nohup.out’

[root@mall gpmall-xiangmubao-danji]# nohup java -jar user-provider-0.0.1-SNAPSHOT.jar &

[2] 3571

[root@mall gpmall-xiangmubao-danji]# nohup: ignoring input and appending output to ‘nohup.out’

[root@mall gpmall-xiangmubao-danji]# nohup java -jar gpmall-shopping-0.0.1-SNAPSHOT.jar &

[3] 3639

[root@mall gpmall-xiangmubao-danji]# nohup: ignoring input and appending output to ‘nohup.out’

[root@mall gpmall-xiangmubao-danji]# nohup java -jar gpmall-user-0.0.1-SNAPSHOT.jar &

[4] 3676

[root@mall gpmall-xiangmubao-danji]# nohup: ignoring input and appending output to ‘nohup.out’

[root@mall gpmall-xiangmubao-danji]# jobs

[1] Running nohup java -jar shopping-provider-0.0.1-SNAPSHOT.jar &

[2] Running nohup java -jar user-provider-0.0.1-SNAPSHOT.jar &

[3]- Running nohup java -jar gpmall-shopping-0.0.1-SNAPSHOT.jar &

[4]+ Running nohup java -jar gpmall-user-0.0.1-SNAPSHOT.jar &

[root@mall gpmall-xiangmubao-danji]# curl http://192.168.1.111/#/home

<!DOCTYPE html><html><head><meta charset=utf-8><title>1+x-示例项目</title><meta name=keywords content=""><meta name=description content=""><meta http-equiv=X-UA-Compatible content="IE=Edge"><meta name=wap-font-scale content=no><link rel="shortcut icon " type=images/x-icon href=/static/images/favicon.ico><link href=/static/css/app.8d4edd335a61c46bf5b6a63444cd855a.css rel=stylesheet></head><body><div id=app></div><script type=text/javascript src=/static/js/manifest.2d17a82764acff8145be.js></script><script type=text/javascript src=/static/js/vendor.4f07d3a235c8a7cd4efe.js></script><script type=text/javascript src=/static/js/app.81180cbb92541cdf912f.js></script></body></html><style>body{

min-width:1250px;}</style>

7.Zookeeper集群(40分)

使用提供的三台虚拟机和软件包,完成Zookeeper集群的安装与配置,配置完成后,在相应的目录使用./zkServer.sh status命令查看三个Zookeeper节点的状态,将三个节点的状态以文本形式提交到答题框。

Zookeeper1:

[root@xiandian ~]# hostnamectl set-hostname zookeeper1

[root@xiandian ~]# bash

[root@zookeeper1 ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.1.10 zookeeper1

192.168.1.20 zookeeper2

192.168.1.30 zookeeper3

# 注释:在zookeeper1节点上传gpmall-repo,然后做vsftp进行共享,我上传到/opt

[root@zookeeper1 ~]# vim /etc/yum.repos.d/local.repo

[centos]

name=centos

baseurl=file:///opt/centos

enabled=1

gpgcheck=0

[gpmall]

name=gpmall

baseurl=file:///opt/gpmall-repo

enabled=1

gpgcheck=0

[root@zookeeper1 ~]# yum repolist

[root@zookeeper1 ~]# yum install -y vsftpd

[root@zookeeper1 ~]# vim /etc/vsftpd/vsftpd.conf

# 注释:添加:

anon_root=/opt

[root@zookeeper1 ~]# systemctl restart vsftpd

[root@zookeeper1 ~]# yum install -y java-1.8.0-openjdk java-1.8.0-openjdk-devel

[root@zookeeper1 ~]# java -version

openjdk version "1.8.0_252"

OpenJDK Runtime Environment (build 1.8.0_252-b09)

OpenJDK 64-Bit Server VM (build 25.252-b09, mixed mode)

# 注释:将zookeeper-3.4.14.tar.gz上传至三个节点或者设置nfs进行共享

[root@zookeeper1 ~]# tar -zvxf zookeeper-3.4.14.tar.gz

[root@zookeeper1 ~]# cd zookeeper-3.4.14/conf/

[root@zookeeper1 conf]# mv zoo_sample.cfg zoo.cfg

[root@zookeeper1 conf]# vim zoo.cfg

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/tmp/zookeeper

# the port at which the clients will connect

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

server.1=192.168.1.10:2888:3888

server.2=192.168.1.20:2888:3888

server.3=192.168.1.30:2888:3888

[root@zookeeper1 conf]# grep -n '^'[a-Z] zoo.cfg

2:tickTime=2000

5:initLimit=10

8:syncLimit=5

12:dataDir=/tmp/zookeeper

14:clientPort=2181

29:server.1=192.168.1.10:2888:3888

30:server.2=192.168.1.20:2888:3888

31:server.3=192.168.1.30:2888:3888

[root@zookeeper1 conf]# cd

[root@zookeeper1 ~]# mkdir /tmp/zookeeper

[root@zookeeper1 ~]# vim /tmp/zookeeper/myid

1

[root@zookeeper1 ~]# cat /tmp/zookeeper/myid

1

[root@zookeeper1 ~]# cd zookeeper-3.4.14/bin/

[root@zookeeper1 bin]# ./zkServer.sh start

zookeeper JMX enabled by default

Using config: /root/zookeeper-3.4.14/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[root@zookeeper1 bin]# ./zkServer.sh status

zookeeper JMX enabled by default

Using config: /root/zookeeper-3.4.14/bin/../conf/zoo.cfg

Mode: follower

Zookeeper2:

[root@xiandian ~]# hostnamectl set-hostname zookeeper2

[root@xiandian ~]# bash

[root@zookeeper2 ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.1.10 zookeeper1

192.168.1.20 zookeeper2

192.168.1.30 zookeeper3

[root@zookeeper2 ~]# vim /etc/yum.repos.d/local.repo

[centos]

name=centos

baseurl=file:///opt/centos

enabled=1

gpgcheck=0

[gpmall]

name=gpmall

baseurl=ftp://zookeeper1/gpmall-repo

enabled=1

gpgcheck=0

[root@zookeeper2 ~]# yum install -y java-1.8.0-openjdk java-1.8.0-openjdk-devel

[root@zookeeper2 ~]# java -version

openjdk version "1.8.0_252"

OpenJDK Runtime Environment (build 1.8.0_252-b09)

OpenJDK 64-Bit Server VM (build 25.252-b09, mixed mode)

# 注释:将zookeeper-3.4.14.tar.gz上传至三个节点或者设置nfs进行共享

[root@zookeeper2 ~]# tar -zvxf zookeeper-3.4.14.tar.gz

[root@zookeeper2 ~]# cd zookeeper-3.4.14/conf/

[root@zookeeper2 conf]# mv zoo_sample.cfg zoo.cfg

[root@zookeeper2 conf]# vim zoo.cfg

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/tmp/zookeeper

# the port at which the clients will connect

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

server.1=192.168.1.10:2888:3888

server.2=192.168.1.20:2888:3888

server.3=192.168.1.30:2888:3888

[root@zookeeper2 conf]# grep -n '^'[a-Z] zoo.cfg

2:tickTime=2000

5:initLimit=10

8:syncLimit=5

12:dataDir=/tmp/zookeeper

14:clientPort=2181

29:server.1=192.168.1.10:2888:3888

30:server.2=192.168.1.20:2888:3888

31:server.3=192.168.1.30:2888:3888

[root@zookeeper2 conf]# cd

[root@zookeeper2 ~]# mkdir /tmp/zookeeper

[root@zookeeper2 ~]# vim /tmp/zookeeper/myid

2

[root@zookeeper1 ~]# cat /tmp/zookeeper/myid

2

[root@zookeeper2 ~]# cd zookeeper-3.4.14/bin/

[root@zookeeper2 bin]# ./zkServer.sh start

zookeeper JMX enabled by default

Using config: /root/zookeeper-3.4.14/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[root@zookeeper2 bin]# ./zkServer.sh status

zookeeper JMX enabled by default

Using config: /root/zookeeper-3.4.14/bin/../conf/zoo.cfg

Mode: leader

Zookeeper3:

[root@xiandian ~]# hostnamectl set-hostname zookeeper3

[root@xiandian ~]# bash

[root@zookeeper3 ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.1.10 zookeeper1

192.168.1.20 zookeeper2

192.168.1.30 zookeeper3

[root@zookeeper3 ~]# vim /etc/yum.repos.d/local.repo

[centos]

name=centos

baseurl=file:///opt/centos

enabled=1

gpgcheck=0

[gpmall]

name=gpmall

baseurl=ftp://zookeeper1/gpmall-repo

enabled=1

gpgcheck=0

[root@zookeeper3 ~]# yum install -y java-1.8.0-openjdk java-1.8.0-openjdk-devel

[root@zookeeper3 ~]# java -version

openjdk version "1.8.0_252"

OpenJDK Runtime Environment (build 1.8.0_252-b09)

OpenJDK 64-Bit Server VM (build 25.252-b09, mixed mode)

# 注释:将zookeeper-3.4.14.tar.gz上传至三个节点或者设置nfs进行共享

[root@zookeeper3 ~]# tar -zvxf zookeeper-3.4.14.tar.gz

[root@zookeeper3 ~]# cd zookeeper-3.4.14/conf/

[root@zookeeper3 conf]# mv zoo_sample.cfg zoo.cfg

[root@zookeeper3 conf]# vim zoo.cfg

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/tmp/zookeeper

# the port at which the clients will connect

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

server.1=192.168.1.10:2888:3888

server.2=192.168.1.20:2888:3888

server.3=192.168.1.30:2888:3888

[root@zookeeper3 conf]# grep -n '^'[a-Z] zoo.cfg

2:tickTime=2000

5:initLimit=10

8:syncLimit=5

12:dataDir=/tmp/zookeeper

14:clientPort=2181

29:server.1=192.168.1.10:2888:3888

30:server.2=192.168.1.20:2888:3888

31:server.3=192.168.1.30:2888:3888

[root@zookeeper3 conf]# cd

[root@zookeeper3 ~]# mkdir /tmp/zookeeper

[root@zookeeper3 ~]# vim /tmp/zookeeper/myid

3

[root@zookeeper3 ~]# cat /tmp/zookeeper/myid

3

[root@zookeeper3 ~]# cd zookeeper-3.4.14/bin/

[root@zookeeper3 bin]# ./zkServer.sh start

zookeeper JMX enabled by default

Using config: /root/zookeeper-3.4.14/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[root@zookeeper3 bin]# ./zkServer.sh status

zookeeper JMX enabled by default

Using config: /root/zookeeper-3.4.14/bin/../conf/zoo.cfg

Mode: follower

8.Kafka集群(40分)

使用提供的三台虚拟机和软件包,完成Kafka集群的安装与配置,配置完成后,在相应的目录使用 ./kafka-topics.sh --create --zookeeper 你的IP:2181 --replication-factor 1 --partitions 1 --topic test创建topic,将输入命令后的返回结果以文本形式提交到答题框。

- 将kafka_2.11-1.1.1.tgz上传至三个节点:(可以在上体的基础上做kafka,因为kafka依赖于zookeeper)

Zookeeper1:

[root@zookeeper1 ~]# tar -zvxf kafka_2.11-1.1.1.tgz

[root@zookeeper1 ~]# cd kafka_2.11-1.1.1/config/

[root@zookeeper1 config]# vim server.properties

把broker.id=0和zookeeper.connect=localhost:2181使用#注释掉可以使用在vim中/加要搜索的名字,来查找,并添加三行新的内容:

#broker.id=0

#zookeeper.connect=localhost:2181

broker.id = 1

zookeeper.connect = 192.168.1.10:2181,192.168.1.20:2181,192.168.1.30:2181

listeners = PLAINTEXT://192.168.1.10:9092

[root@zookeeper1 config]# cd /root/kafka_2.11-1.1.1/bin/

[root@zookeeper1 bin]# ./kafka-server-start.sh -daemon ../config/server.properties

[root@zookeeper1 bin]# jps

17645 QuorumPeerMain

18029 Kafka

18093 Jps

# 注释:创建topic(下面的IP请设置为自己节点的IP):

[root@zookeeper1 bin]# ./kafka-topics.sh --create --zookeeper 192.168.1.10:2181 --replication-factor 1 --partitions 1 --topic test

Created topic "test".

# 注释:测试结果:

[root@zookeeper1 bin]# ./kafka-topics.sh --list --zookeeper 192.168.1.10:2181

test

Zookeeper2:

[root@zookeeper2 ~]# tar -zvxf kafka_2.11-1.1.1.tgz

[root@zookeeper2 ~]# cd kafka_2.11-1.1.1/config/

[root@zookeeper2 config]# vim server.properties

# 注释:把broker.id=0和zookeeper.connect=localhost:2181使用#注释掉可以使用在vim中/加要搜索的名字,来查找,并添加三行新的内容:

#broker.id=0

#zookeeper.connect=localhost:2181

broker.id = 2

zookeeper.connect = 192.168.1.10:2181,192.168.1.20:2181,192.168.1.30:2181

listeners = PLAINTEXT://192.168.1.20:9092

[root@zookeeper2config]# cd /root/kafka_2.11-1.1.1/bin/

[root@zookeeper2 bin]# ./kafka-server-start.sh -daemon ../config/server.properties

[root@zookeeper2 bin]# jps

3573 Kafka

3605 Jps

3178 QuorumPeerMain

# 注释:测试结果:

[root@zookeeper2 bin]# ./kafka-topics.sh --list --zookeeper 192.168.1.20:2181

test

Zookeeper3:

[root@zookeeper3 ~]# tar -zvxf kafka_2.11-1.1.1.tgz

[root@zookeeper3 ~]# cd kafka_2.11-1.1.1/config/

[root@zookeeper3 config]# vim server.properties

# 注释:把broker.id=0和zookeeper.connect=localhost:2181使用#注释掉可以使用在vim中/加要搜索的名字,来查找,并添加三行新的内容:

#broker.id=0

#zookeeper.connect=localhost:2181

broker.id = 3

zookeeper.connect = 192.168.1.10:2181,192.168.1.20:2181,192.168.1.30:2181

listeners = PLAINTEXT://192.168.1.30:9092

[root@zookeeper3 config]# cd /root/kafka_2.11-1.1.1/bin/

[root@zookeeper3 bin]# ./kafka-server-start.sh -daemon ../config/server.properties

[root@zookeeper3 bin]# jps

3904 QuorumPeerMain

4257 Kafka

4300 Jps

# 注释:测试结果:

[root@zookeeper3 bin]# ./kafka-topics.sh --list --zookeeper 192.168.1.30:2181

test

9.Zabbix-server节点搭建(40分)

使用提供的虚拟机和软件包,完成Zabbix监控系统server端的搭建,搭建完毕后启动服务,然后使用netstat -ntpl命令查看端口启动情况,将netstat -ntpl命令的返回结果以文本形式提交到答题框。

Zabbix-server:

- 将zabbix文件上传到zabbix-server节点配置yum源,安装vsftpd服务:

Zabbix-server:

# 注释:配置yum源,下面的vsftpd服务可配置也可以不配置,看其他节点是否需要即可。

[root@zabbix-server ~]# vim /etc/yum.repos.d/local.repo

[centos]

name=centos

baseurl=file:///opt/centos

enabled=1

gpgcheck=0

[zabbix]

name=zabbix

baseurl=file:///opt/zabbix

enabled=1

gpgcheck=0

[root@zabbix-server ~]# yum install -y vsftpd

[root@zabbix-server ~]# vim /etc/vsftpd/vsftpd.conf

# 注释:添加:

anon_root=/opt

[root@zabbix-server ~]# systemctl restart vsftpd

[root@zabbix-server ~]# yum install httpd -y

[root@zabbix-server ~]# yum install -y mariadb-server mariadb

[root@zabbix-server ~]# yum install -y zabbix-server-mysql zabbix-web-mysql zabbix-agent

mariadb-server

[root@zabbix-server ~]# systemctl restart httpd

[root@zabbix-server ~]# systemctl enable httpd

[root@zabbix-server ~]# systemctl restart mariadb

[root@zabbix-server ~]# systemctl enable mariadb

[root@zabbix-server ~]# mysql -uroot -p000000

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 18

Server version: 10.3.18-MariaDB MariaDB Server

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]> create database zabbix character set utf8 collate utf8_bin;

Query OK, 1 row affected (0.010 sec)

MariaDB [(none)]> grant all privileges on zabbix.* to zabbix@'%' identified by 'zabbix';

Query OK, 0 rows affected (0.031 sec)

MariaDB [(none)]> grant all privileges on zabbix.* to zabbix@localhost identified by 'zabbix';

Query OK, 0 rows affected (0.009 sec)

[root@zabbix-server ~]# cd /usr/share/doc/zabbix-server-mysql-3.4.15/

# 注释:在执行下一步前为了防止导入数据行太长的问题,先修改一下配置文件:

[root@zabbix-server zabbix-server-mysql-3.4.15]# vim /etc/my.cnf

# 注释:在[mysqld]中添加:

innodb_strict_mode = 0

[root@zabbix-server zabbix-server-mysql-3.4.15]# systemctl restart mariadb

[root@zabbix-server zabbix-server-mysql-3.4.15]# zcat create.sql.gz | mysql -uroot -p000000 zabbix

[root@zabbix-server ~]# vim /etc/php.ini

# 注释:在[Date]下添加一行:

date.timezone = PRC

[root@zabbix-server ~]# vim /etc/httpd/conf.d/zabbix.conf

# 注释:在<IfModule mod_php5.c>下添加一行:

php_value date.timezone Asia/Shanghai

[root@zabbix-server ~]# systemctl restart httpd

[root@zabbix-server ~]# vim /etc/zabbix/zabbix_server.conf

[root@zabbix-server ~]# grep -n '^'[a-Z] /etc/zabbix/zabbix_server.conf

38:LogFile=/var/log/zabbix/zabbix_server.log

49:LogFileSize=0

72:PidFile=/var/run/zabbix/zabbix_server.pid

82:SocketDir=/var/run/zabbix

91:DBHost=localhost

100:DBName=zabbix

116:DBUser=zabbix

124:DBPassword=zabbix

132:DBSocket=/var/lib/mysql/mysql.sock

329:SNMPTrapperFile=/var/log/snmptrap/snmptrap.log

445:Timeout=4

486:AlertScriptsPath=/usr/lib/zabbix/alertscripts

495:ExternalScripts=/usr/lib/zabbix/externalscripts

531:LogSlowQueries=3000

[root@zabbix-server ~]# systemctl restart zabbix-server

[root@zabbix-server ~]# netstat -ntpl

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:10051 0.0.0.0:* LISTEN 10611/zabbix_server

tcp 0 0 0.0.0.0:3306 0.0.0.0:* LISTEN 10510/mysqld

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 975/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 886/master

tcp6 0 0 :::10051 :::* LISTEN 10611/zabbix_server

tcp6 0 0 :::80 :::* LISTEN 10579/httpd

tcp6 0 0 :::21 :::* LISTEN 10015/vsftpd

tcp6 0 0 :::22 :::* LISTEN 975/sshd

tcp6 0 0 ::1:25 :::* LISTEN 886/master

10.Keystone管理(40分)

使用提供的“all-in-one”镜像,自行检查openstack中各服务的状态,若有问题自行排查。在keystone中创建用户testuser,密码为password,创建好之后,查看testuser的详细信息。将openstack user show testuser命令的返回结果以文本形式提交到答题框。

[root@xiandian~]# source /etc/keystone/admin-openrc.sh

[root@xiandian~]# openstack user create --domain xiandian --password password testuser

+-----------+----------------------------------+

| Field | Value |

+-----------+----------------------------------+

| domain_id | 9321f21a94ef4f85993e92a228892418 |

| enabled | True |

| id | 5ad593ca940d427886187a4a666a816d |

| name | testuser |

+-----------+----------------------------------+

[root@xiandian~]# openstack user show testuser

+-----------+----------------------------------+

| Field | Value |

+-----------+----------------------------------+

| domain_id | 9321f21a94ef4f85993e92a228892418 |

| enabled | True |

| id | 5ad593ca940d427886187a4a666a816d |

| name | testuser |

+-----------+----------------------------------+

11.Glance管理(40分)

使用提供的“all-in-one”镜像,自行检查openstack中各服务的状态,若有问题自行排查。使用提供的cirros-0.3.4-x86_64-disk.img镜像;使用glance命令将镜像上传,并命名为mycirros,最后将glance image-show id命令的返回结果以文本形式提交到答题框。

[root@xiandian~]# glance image-create --name mycirros --disk-format qcow2 --container-format bare --file /root/cirros-0.3.4-x86_64-disk.img

+------------------+--------------------------------------+

| Property | Value |

+------------------+--------------------------------------+

| checksum | ee1eca47dc88f4879d8a229cc70a07c6 |

| container_format | bare |

| created_at | 2020-05-15T17:18:21Z |

| disk_format | qcow2 |

| id | 935a7ac5-a6dd-4cb4-94b3-941d1b2ddd23 |

| min_disk | 0 |

| min_ram | 0 |

| name | mycirros |

| owner | f9ff39ba9daa4e5a8fee1fc50e2d2b34 |

| protected | False |

| size | 13287936 |

| status | active |

| tags | [] |

| updated_at | 2020-05-15T17:18:22Z |

| virtual_size | None |

| visibility | private |

+------------------+--------------------------------------+

[root@xiandian~]# glance image-show 935a7ac5-a6dd-4cb4-94b3-941d1b2ddd23

+------------------+--------------------------------------+

| Property | Value |

+------------------+--------------------------------------+

| checksum | ee1eca47dc88f4879d8a229cc70a07c6 |

| container_format | bare |

| created_at | 2020-05-15T17:18:21Z |

| disk_format | qcow2 |

| id | 935a7ac5-a6dd-4cb4-94b3-941d1b2ddd23 |

| min_disk | 0 |

| min_ram | 0 |

| name | mycirros |

| owner | f9ff39ba9daa4e5a8fee1fc50e2d2b34 |

| protected | False |

| size | 13287936 |

| status | active |

| tags | [] |

| updated_at | 2020-05-15T17:18:22Z |

| virtual_size | None |

| visibility | private |

+------------------+--------------------------------------+

12.Nova管理(40分)

使用提供的“all-in-one”镜像,自行检查openstack中各服务的状态,若有问题自行排查。通过nova的相关命令创建名为exam,ID为1234,内存为1024M,硬盘为20G,虚拟内核数量为2的云主机类型,查看exam的详细信息。将nova flavor-show id操作命令的返回结果以文本形式提交到答题框。

[root@xiandian~]# nova flavor-create exam 1234 1024 20 2

+------+------+-----------+------+-----------+------+-------+-------------+-----------+

| ID | Name | Memory_MB | Disk | Ephemeral | Swap | VCPUs | RXTX_Factor | Is_Public |

+------+------+-----------+------+-----------+------+-------+-------------+-----------+

| 1234 | exam | 1024 | 20 | 0 | | 2 | 1.0 | True |

+------+------+-----------+------+-----------+------+-------+-------------+-----------+

[root@xiandian~]# nova flavor-show 1234

+----------------------------+-------+

| Property | Value |

+----------------------------+-------+

| OS-FLV-DISABLED:disabled | False |

| OS-FLV-EXT-DATA:ephemeral | 0 |

| disk | 20 |

| extra_specs | {} |

| id | 1234 |

| name | exam |

| os-flavor-access:is_public | True |

| ram | 1024 |

| rxtx_factor | 1.0 |

| swap | |

| vcpus | 2 |

+----------------------------+-------+

13.Docker安装(40分)

使用提供的虚拟机和软件包,自行配置YUM源,安装docker-ce服务。安装完毕后执行docker info命令的返回结果以文本形式提交到答题框。

- 先上传Docker.tar.gz到/root目录,并解压:

[root@xiandian ~]# tar -zvxf Docker.tar.gz

[root@xiandian ~]# vim /etc/yum.repos.d/local.repo

[centos]

name=centos

baseurl=file:///opt/centos

enabled=1

gpgcheck=0

[docker]

name=docker

baseurl=file:///root/Docker

enabled=1

gpgcheck=0

[root@xiandian ~]# iptables -F

[root@xiandian ~]# iptables -X

[root@xiandian ~]# iptables -Z

[root@xiandian ~]# iptables-save

# Generated by iptables-save v1.4.21 on Fri May 15 02:00:29 2020

*filter

:INPUT ACCEPT [20:1320]

:FORWARD ACCEPT [0:0]

:OUTPUT ACCEPT [11:1092]

COMMIT

# Completed on Fri May 15 02:00:29 2020

[root@xiandian ~]# vim /etc/selinux/config

SELINUX=disabled

# 注释:关闭交换分区:

[root@xiandian ~]# vim /etc/fstab

#/dev/mapper/centos-swap swap swap defaults 0 0

[root@xiandian ~]# free -m

total used free shared buff/cache available

Mem: 1824 95 1591 8 138 1589

Swap: 0 0 0

# 注释:在配置路由转发前,先升级系统并重启,不然会有两条规则可能报错:

[root@xiandian ~]# yum upgrade -y

[root@xiandian ~]# reboot

[root@xiandian ~]# vim /etc/sysctl.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

[root@xiandian ~]# modprobe br_netfilter

[root@xiandian ~]# sysctl -p

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

[root@xiandian ~]# yum install -y yum-utils device-mapper-persistent-data

[root@xiandian ~]# yum install -y docker-ce-18.09.6 docker-ce-cli-18.09.6 containerd.io

[root@xiandian ~]# systemctl daemon-reload

[root@xiandian ~]# systemctl restart docker

[root@xiandian ~]# systemctl enable docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

[root@xiandian ~]# docker info

Containers: 0

Running: 0

Paused: 0

Stopped: 0

Images: 0

Server Version: 18.09.6

Storage Driver: devicemapper

Pool Name: docker-253:0-100765090-pool

Pool Blocksize: 65.54kB

Base Device Size: 10.74GB

Backing Filesystem: xfs

Udev Sync Supported: true

Data file: /dev/loop0

Metadata file: /dev/loop1

Data loop file: /var/lib/docker/devicemapper/devicemapper/data

Metadata loop file: /var/lib/docker/devicemapper/devicemapper/metadata

Data Space Used: 11.73MB

Data Space Total: 107.4GB

Data Space Available: 24.34GB

Metadata Space Used: 17.36MB

Metadata Space Total: 2.147GB

Metadata Space Available: 2.13GB

Thin Pool Minimum Free Space: 10.74GB

Deferred Removal Enabled: true

Deferred Deletion Enabled: true

Deferred Deleted Device Count: 0

Library Version: 1.02.164-RHEL7 (2019-08-27)

Logging Driver: json-file

Cgroup Driver: cgroupfs

Plugins:

Volume: local

Network: bridge host macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file local logentries splunk syslog

Swarm: inactive

Runtimes: runc

Default Runtime: runc

Init Binary: docker-init

containerd version: b34a5c8af56e510852c35414db4c1f4fa6172339

runc version: 3e425f80a8c931f88e6d94a8c831b9d5aa481657

init version: fec3683

Security Options:

seccomp

Profile: default

Kernel Version: 3.10.0-1127.8.2.el7.x86_64

Operating System: CentOS Linux 7 (Core)

OSType: linux

Architecture: x86_64

CPUs: 2

Total Memory: 1.777GiB

Name: xiandian

ID: OUR6:6ERV:3UCH:WJCM:TDLL:5ATV:E7IQ:HLAR:JKQB:OBK2:HZ7G:JC3Q

Docker Root Dir: /var/lib/docker

Debug Mode (client): false

Debug Mode (server): false

Registry: https://index.docker.io/v1/

Labels:

Experimental: false

Insecure Registries:

127.0.0.0/8

Live Restore Enabled: false

Product License: Community Engine

WARNING: the devicemapper storage-driver is deprecated, and will be removed in a future release.

WARNING: devicemapper: usage of loopback devices is strongly discouraged for production use.

Use `--storage-opt dm.thinpooldev` to specify a custom block storage device.

14.部署Swarm集群(40分)

使用提供的虚拟机和软件包,安装好docker-ce。部署Swarm集群,并安装Portainer图形化管理工具,部署完成后,使用浏览器登录ip:9000界面,进入Swarm控制台。将curl swarm ip:9000返回的结果以文本形式提交到答题框。

Master:

[root@master ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.1.111 master

192.168.1.112 node

[root@master ~]# yum install -y chrony

[root@master ~]# vim /etc/chrony.conf

# 注释:注释前面的四条server,并找个空白的地方写入以下内容:

#server 0.centos.pool.ntp.org iburst

#server 1.centos.pool.ntp.org iburst

#server 2.centos.pool.ntp.org iburst

#server 3.centos.pool.ntp.org iburst

local stratum 10

server master iburst

allow all

[root@master ~]# systemctl restart chronyd

[root@master ~]# systemctl enable chronyd

[root@master ~]# timedatectl set-ntp true

[root@master ~]# vim /lib/systemd/system/docker.service

# 注释:将

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

# 注释:修改为

ExecStart=/usr/bin/dockerd -H tcp://0.0.0.0:2375 -H unix:///var/run/docker.sock

[root@master ~]# systemctl daemon-reload

[root@master ~]# systemctl restart docker

[root@master ~]# ./image.sh

[root@master ~]# docker swarm init --advertise-addr 192.168.1.111

Swarm initialized: current node (vclsb89nhs306kei93iv3rwa5) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-6d9c93ecv0e1ux4u8z5wj4ybhbkt2iadlnh74omjipyr3dwk4u-euf7iax6ubmta5qbcrbg4t3j4 192.168.1.111:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

[root@master ~]# docker swarm join-token worker

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-6d9c93ecv0e1ux4u8z5wj4ybhbkt2iadlnh74omjipyr3dwk4u-euf7iax6ubmta5qbcrbg4t3j4 192.168.1.111:2377

[root@master ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

vclsb89nhs306kei93iv3rwa5 * master Ready Active Leader 18.09.6

j98yunqmdkh1ztr7thhbzumcw node Ready Active 18.09.6

[root@master ~]# docker volume create portainer_data

portainer_data

[root@master ~]# docker service create --name portainer --publish 9000:9000 --replicas=1 --constraint 'node.role == manager' --mount type=bind,src=//var/run/docker.sock,dst=/var/run/docker.sock --mount

type=volume,src=portainer_data,dst=/data portainer/portainer -H unix:///var/run/docker.sock

k77m7aydf2idm1x02j60cmwsj

overall progress: 1 out of 1 tasks

1/1: running

verify: Service converged

Node:

[root@node ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.1.111 master

192.168.1.112 node

[root@node ~]# yum install -y chronyc

[root@node ~]# vim /etc/chrony.conf

# 注释:注释前面的四条server,并找个空白的地方写入以下内容:

#server 0.centos.pool.ntp.org iburst

#server 1.centos.pool.ntp.org iburst

#server 2.centos.pool.ntp.org iburst

#server 3.centos.pool.ntp.org iburst

server 192.168.1.111 iburst

local stratum 10

server master iburst

allow all

[root@node ~]# systemctl restart chronyd

[root@node ~]# systemctl enable chronyd

[root@node ~]# chronyc sources

210 Number of sources = 1

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^* master 11 6 177 42 +17us[ +60us] +/- 52ms

[root@node ~]# vim /lib/systemd/system/docker.service

# 注释:将

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

# 注释:修改为

ExecStart=/usr/bin/dockerd -H tcp://0.0.0.0:2375 -H unix:///var/run/docker.sock

[root@master ~]# systemctl daemon-reload

[root@master ~]# systemctl restart docker

[root@node ~]# docker swarm join --token SWMTKN-1-6d9c93ecv0e1ux4u8z5wj4ybhbkt2iadlnh74omjipyr3dwk4u-euf7iax6ubmta5qbcrbg4t3j4 192.168.1.111:2377

This node joined a swarm as a worker.

[[root@master ~]# curl 192.168.1.111:9000

<!DOCTYPE html><html lang="en" ng-app="portainer">

<head>

<meta charset="utf-8">

<title>Portainer</title>

<meta name="description" content="">

<meta name="author" content="Portainer.io">

15.Shell脚本补全(40分)

下面有一段脚本,作用是自动配置redis服务,由于工程师的失误,将脚本中的某些代码删除了,但注释还在,请根据注释,填写代码。最后将填写的代码按照顺序以文本形式提交至答题框。 redis(){ cd #修改redis的配置文件,将bind 127.0.0.1注释 sed -i (此处填写) /etc/redis.conf #修改redis的配置文件,将protected-mode yes改为protected-mode no sed -i (此处填写) /etc/redis.conf #启动redis服务 systemctl start redis #设置开机自启 systemctl enable redis if [ $? -eq 0 ] then sleep 3 echo -e "\033[36m==========redis启动成功==========\033[0m" else echo -e "\033[31mredis启动失败,请检查\033[0m" exit 1 fi sleep 2 }

sed -i 's/bind 127.0.0.1/#bind 127.0.0.1/g' /etc/redis.conf

sed -i 's/protected-mode yes/protected-mode no/g' /etc/redis.conf

©版权声明著作权归作者所有:如需转载,请注明出处,谢谢。

更多推荐

已为社区贡献5条内容

已为社区贡献5条内容

所有评论(0)