springboot整合seata

一、简介之前用多线程模拟2PC事务提交的博客。自己用多线程去解决分布式事务是比较痛苦的。这里介绍一款比较流行的分布式事务框架,阿里的seata。本文主要介绍分布式框架的seata(1.4.2)在项目开发中的简单使用。seata 有四种模式,分别是AT、TCC、Sage、XA。本文使用的是AT模式,其他模式感兴趣的小伙伴可以自己去研究下。本文项目结构:springboot 2.2.4.RELEASE

一、简介

之前用多线程模拟2PC事务提交的博客。自己用多线程去解决分布式事务是比较痛苦的。这里介绍一款比较流行的分布式事务框架,阿里的seata。

- 本文主要介绍分布式框架的seata(1.4.2)在项目开发中的简单使用。

- seata 有四种模式,分别是AT、TCC、Sage、XA。本文使用的是AT模式,其他模式感兴趣的小伙伴可以自己去研究下。

- 本文项目结构:springboot 2.2.4.RELEASE + nacos 1.4.2 + seata 1.4.2 + mysql 5.6.45

二、安装和配置

1. nacos下载、安装

nacos的下载安装在这里就不赘述了。下面附上我使用的nacos版本下载地址

nacos 下载地址 https://github.com/alibaba/nacos/releases/tag/1.4.2

2. seata服务安装、配置

2.1 安装

- seata下载地址 https://github.com/seata/seata/releases/tag/v1.4.2

- 下载后解压目录如下

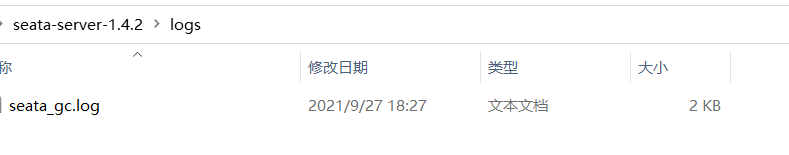

- 安装就是这么简单。注意:解压后的logs目录下需要新增一个 seata_gc.log 文件

至此seata的安装已经完成。

2.2 配置

配置seata 我们可以在安装目录的conf文件夹下找到 README-zh.md 文件,这里分别有 server、client、config-center 三部分的脚本存放路径。

2.2.1 seata-server

- 访问 server 的脚本存放路径

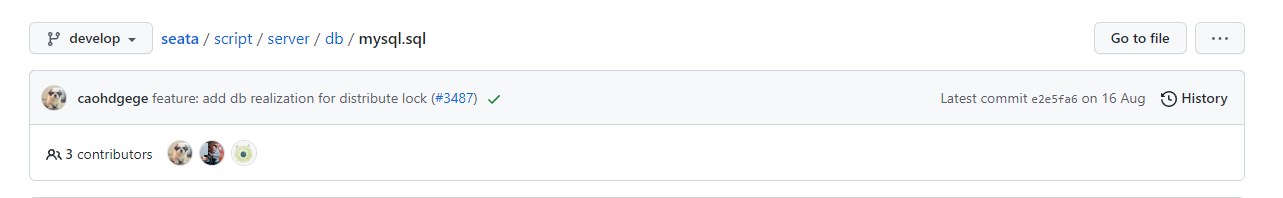

因为我们使用的是mysql,所以取mysql目录下的sql脚本。

因为我们使用的是mysql,所以取mysql目录下的sql脚本。 - 在自己的mysql中新建数据库seata,将上面拿到的sql脚本到数据库中执行即可。

2.2.2 config-center

2.2.2.1 file.conf修改

file.conf文件打开如下:

## transaction log store, only used in seata-server

store {

## store mode: file、db、redis

mode = "file"

## rsa decryption public key

publicKey = ""

## file store property

file {

## store location dir

dir = "sessionStore"

# branch session size , if exceeded first try compress lockkey, still exceeded throws exceptions

maxBranchSessionSize = 16384

# globe session size , if exceeded throws exceptions

maxGlobalSessionSize = 512

# file buffer size , if exceeded allocate new buffer

fileWriteBufferCacheSize = 16384

# when recover batch read size

sessionReloadReadSize = 100

# async, sync

flushDiskMode = async

}

## database store property

db {

## the implement of javax.sql.DataSource, such as DruidDataSource(druid)/BasicDataSource(dbcp)/HikariDataSource(hikari) etc.

datasource = "druid"

## mysql/oracle/postgresql/h2/oceanbase etc.

dbType = "mysql"

driverClassName = "com.mysql.jdbc.Driver"

## if using mysql to store the data, recommend add rewriteBatchedStatements=true in jdbc connection param

url = "jdbc:mysql://127.0.0.1:3306/seata?rewriteBatchedStatements=true"

user = "mysql"

password = "mysql"

minConn = 5

maxConn = 100

globalTable = "global_table"

branchTable = "branch_table"

lockTable = "lock_table"

queryLimit = 100

maxWait = 5000

}

## redis store property

redis {

## redis mode: single、sentinel

mode = "single"

## single mode property

single {

host = "127.0.0.1"

port = "6379"

}

## sentinel mode property

sentinel {

masterName = ""

## such as "10.28.235.65:26379,10.28.235.65:26380,10.28.235.65:26381"

sentinelHosts = ""

}

password = ""

database = "0"

minConn = 1

maxConn = 10

maxTotal = 100

queryLimit = 100

}

}

这里将 mode = "file" 改为 mode = "db",再将db中的配置替换为自己的数据库连接信息

## database store property

db {

## the implement of javax.sql.DataSource, such as DruidDataSource(druid)/BasicDataSource(dbcp)/HikariDataSource(hikari) etc.

datasource = "druid"

## mysql/oracle/postgresql/h2/oceanbase etc.

dbType = "mysql"

driverClassName = "com.mysql.jdbc.Driver"

## if using mysql to store the data, recommend add rewriteBatchedStatements=true in jdbc connection param

url = "jdbc:mysql://127.0.0.1:3306/seata?rewriteBatchedStatements=true"

user = "root"

password = "123456"

minConn = 5

maxConn = 100

globalTable = "global_table"

branchTable = "branch_table"

lockTable = "lock_table"

queryLimit = 100

maxWait = 5000

}

2.2.2.2 registry.conf修改

registry.conf文件打开如下:

registry {

# file 、nacos 、eureka、redis、zk、consul、etcd3、sofa

type = "file"

nacos {

application = "seata-server"

serverAddr = "127.0.0.1:8848"

group = "SEATA_GROUP"

namespace = ""

cluster = "default"

username = ""

password = ""

}

eureka {

serviceUrl = "http://localhost:8761/eureka"

application = "default"

weight = "1"

}

redis {

serverAddr = "localhost:6379"

db = 0

password = ""

cluster = "default"

timeout = 0

}

zk {

cluster = "default"

serverAddr = "127.0.0.1:2181"

sessionTimeout = 6000

connectTimeout = 2000

username = ""

password = ""

}

consul {

cluster = "default"

serverAddr = "127.0.0.1:8500"

aclToken = ""

}

etcd3 {

cluster = "default"

serverAddr = "http://localhost:2379"

}

sofa {

serverAddr = "127.0.0.1:9603"

application = "default"

region = "DEFAULT_ZONE"

datacenter = "DefaultDataCenter"

cluster = "default"

group = "SEATA_GROUP"

addressWaitTime = "3000"

}

file {

name = "file.conf"

}

}

config {

# file、nacos 、apollo、zk、consul、etcd3

type = "file"

nacos {

serverAddr = "127.0.0.1:8848"

namespace = ""

group = "SEATA_GROUP"

username = ""

password = ""

dataId = "seataServer.properties"

}

consul {

serverAddr = "127.0.0.1:8500"

aclToken = ""

}

apollo {

appId = "seata-server"

## apolloConfigService will cover apolloMeta

apolloMeta = "http://192.168.1.204:8801"

apolloConfigService = "http://192.168.1.204:8080"

namespace = "application"

apolloAccesskeySecret = ""

cluster = "seata"

}

zk {

serverAddr = "127.0.0.1:2181"

sessionTimeout = 6000

connectTimeout = 2000

username = ""

password = ""

nodePath = "/seata/seata.properties"

}

etcd3 {

serverAddr = "http://localhost:2379"

}

file {

name = "file.conf"

}

}

这里因为我们使用的注册中心和配置中心是nacos,seata推荐的也是nacos type = "nacos" 所以这里不用修改。只要修改下我们的nacos信息就好了,下面是我修改后的

registry {

# file 、nacos 、eureka、redis、zk、consul、etcd3、sofa

type = "nacos"

nacos {

application = "seata-server"

serverAddr = "127.0.0.1:8848"

group = "SEATA_GROUP"

namespace = "17603f04-caab-4789-8bd4-e3f74637d7e9"

cluster = "default"

username = "nacos"

password = "nacos"

}

}

config {

# file、nacos 、apollo、zk、consul、etcd3

type = "nacos"

nacos {

serverAddr = "127.0.0.1:8848"

namespace = "17603f04-caab-4789-8bd4-e3f74637d7e9"

group = "SEATA_GROUP"

username = "nacos"

password = "nacos"

dataId = "seataServer.properties"

}

}

2.2.2.3 config.txt

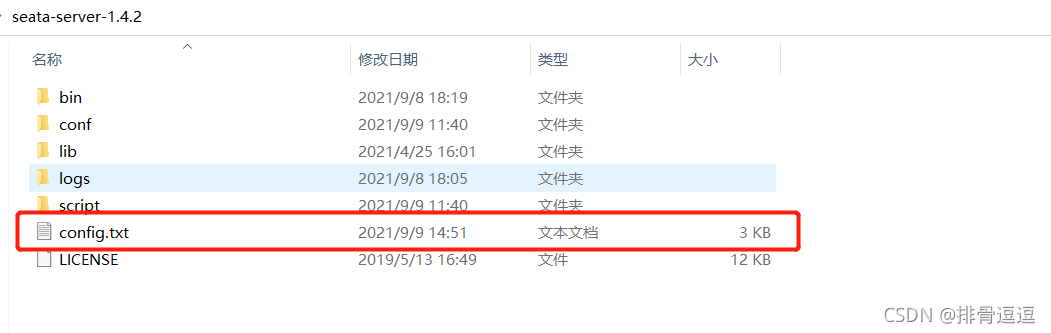

seata1.4.2 中是没有config.txt文件的,需要去 这里取,获取到config.txt后放到如下位置

这个文件需要修改的地方是 store部分,下面是我修改后的store部分

transport.type=TCP

transport.server=NIO

transport.heartbeat=true

transport.enableClientBatchSendRequest=true

transport.threadFactory.bossThreadPrefix=NettyBoss

transport.threadFactory.workerThreadPrefix=NettyServerNIOWorker

transport.threadFactory.serverExecutorThreadPrefix=NettyServerBizHandler

transport.threadFactory.shareBossWorker=false

transport.threadFactory.clientSelectorThreadPrefix=NettyClientSelector

transport.threadFactory.clientSelectorThreadSize=1

transport.threadFactory.clientWorkerThreadPrefix=NettyClientWorkerThread

transport.threadFactory.bossThreadSize=1

transport.threadFactory.workerThreadSize=default

transport.shutdown.wait=3

service.vgroupMapping.my_test_tx_group=default

service.default.grouplist=127.0.0.1:8091

service.enableDegrade=false

service.disableGlobalTransaction=false

client.rm.asyncCommitBufferLimit=10000

client.rm.lock.retryInterval=10

client.rm.lock.retryTimes=30

client.rm.lock.retryPolicyBranchRollbackOnConflict=true

client.rm.reportRetryCount=5

client.rm.tableMetaCheckEnable=false

client.rm.tableMetaCheckerInterval=60000

client.rm.sqlParserType=druid

client.rm.reportSuccessEnable=false

client.rm.sagaBranchRegisterEnable=false

client.rm.tccActionInterceptorOrder=-2147482648

client.tm.commitRetryCount=5

client.tm.rollbackRetryCount=5

client.tm.defaultGlobalTransactionTimeout=60000

client.tm.degradeCheck=false

client.tm.degradeCheckAllowTimes=10

client.tm.degradeCheckPeriod=2000

client.tm.interceptorOrder=-2147482648

store.mode=db

store.file.dir=file_store/data

store.file.maxBranchSessionSize=16384

store.file.maxGlobalSessionSize=512

store.file.fileWriteBufferCacheSize=16384

store.file.flushDiskMode=async

store.file.sessionReloadReadSize=100

store.db.datasource=druid

store.db.dbType=mysql

store.db.driverClassName=com.mysql.jdbc.Driver

store.db.url=jdbc:mysql://sel-mysql:3306/seata?useUnicode=true&rewriteBatchedStatements=true

store.db.user=root

store.db.password=xcr101030

store.db.minConn=5

store.db.maxConn=30

store.db.globalTable=global_table

store.db.branchTable=branch_table

store.db.distributedLockTable=distributed_lock

store.db.queryLimit=100

store.db.lockTable=lock_table

store.db.maxWait=5000

server.recovery.committingRetryPeriod=1000

server.recovery.asynCommittingRetryPeriod=1000

server.recovery.rollbackingRetryPeriod=1000

server.recovery.timeoutRetryPeriod=1000

server.maxCommitRetryTimeout=-1

server.maxRollbackRetryTimeout=-1

server.rollbackRetryTimeoutUnlockEnable=false

server.distributedLockExpireTime=10000

client.undo.dataValidation=true

client.undo.logSerialization=jackson

client.undo.onlyCareUpdateColumns=true

server.undo.logSaveDays=7

server.undo.logDeletePeriod=86400000

client.undo.logTable=undo_log

client.undo.compress.enable=true

client.undo.compress.type=zip

client.undo.compress.threshold=64k

log.exceptionRate=100

transport.serialization=seata

transport.compressor=none

metrics.enabled=false

metrics.registryType=compact

metrics.exporterList=prometheus

metrics.exporterPrometheusPort=9898

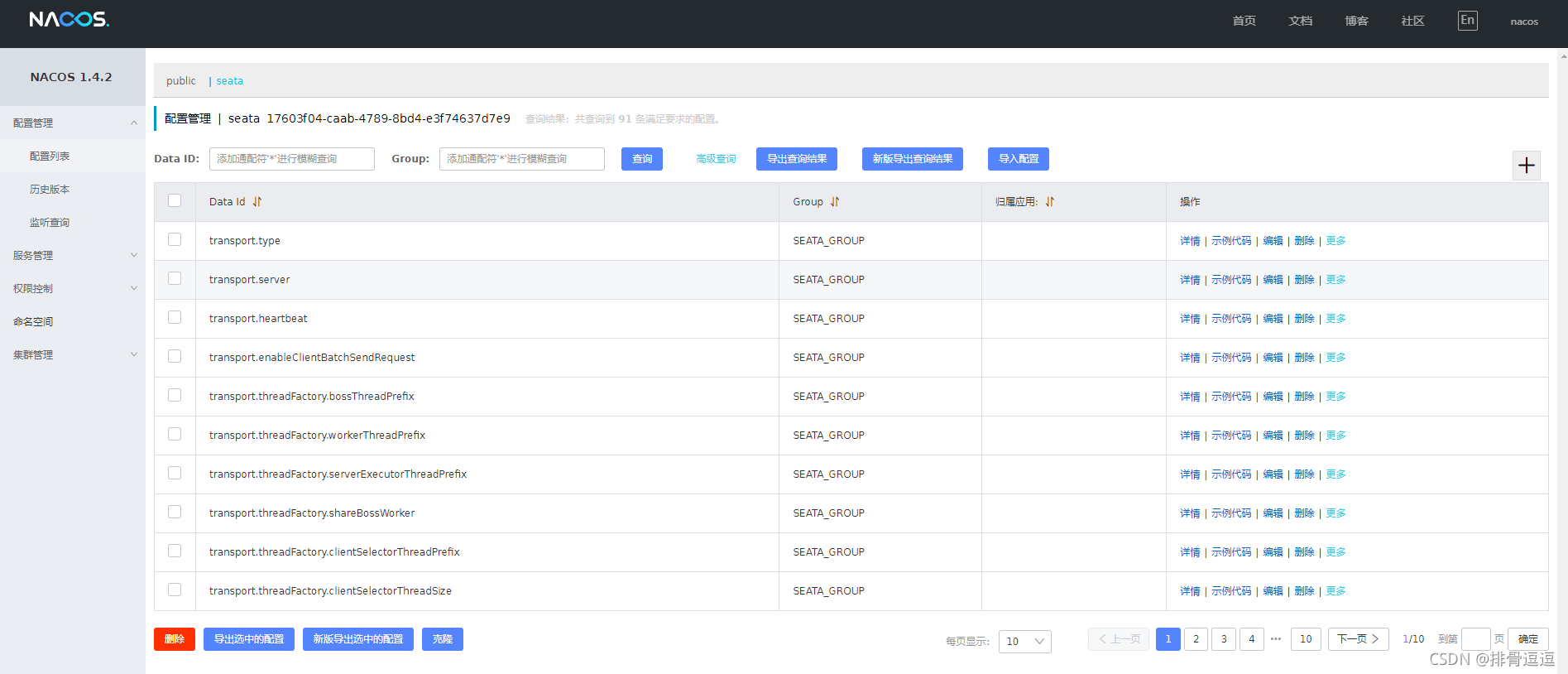

2.2.2.4 推送配置到nacos

下载 nacos-config.sh 到conf文件夹下,右击空白处打开 Git Base,输入sh nacos-config.sh -h localhost -p 8848 -g SEATA_GROUP -t 0af6e97b-a684-4647-b696-7c6d42aecce7 -u nacos -w nacos 回车即可。

命令注释:-h 后面的是nacos的地址,-p 后面是nacos的端口,-g 后面是组名,-t后面是命名空间的id,在上面修改registry.conf文件的时候都写过,记录一下。 -u -w指定nacos的用户名和密码。

见到下图表示seata配置成功。

打开我们的nacos可以看到seata的配置信息已经添加完成。

2.3 启动seata

到这里我们的seata配置已经完成,有人可能会好奇,不是还有client没有配置吗?别着急,客户端在我们后面用到的时候再去添加。

双击bin/seata-server.bat文件可以看到,seata服务已经启动,去nacos注册中心看看seata服务是否注册到了nacos。

至此,seata的安装、配置已经完成。

3. 项目整合

- pom依赖

<!-- seata的依赖 -->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-starter-alibaba-seata</artifactId>

<version>2.2.0.RELEASE</version>

<exclusions>

<exclusion>

<groupId>io.seata</groupId>

<artifactId>seata-spring-boot-starter</artifactId>

</exclusion>

<exclusion>

<groupId>io.seata</groupId>

<artifactId>seata-all</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>io.seata</groupId>

<artifactId>seata-spring-boot-starter</artifactId>

<version>1.4.0</version>

</dependency>

<dependency>

<groupId>io.seata</groupId>

<artifactId>seata-all</artifactId>

<version>1.4.0</version>

</dependency>

- 配置文件

# seata配置

seata:

enabled: true

enable-auto-data-source-proxy: true

tx-service-group: my_test_tx_group

registry:

type: nacos

nacos:

application: seata-server

server-addr: 127.0.0.1:8848

namespace: 17603f04-caab-4789-8bd4-e3f74637d7e9

group: SEATA_GROUP

username: nacos

password: nacos

config:

type: nacos

nacos:

server-addr: 127.0.0.1:8848

group: SEATA_GROUP

username: nacos

password: nacos

namespace: 17603f04-caab-4789-8bd4-e3f74637d7e9

service:

vgroup-mapping:

my_test_tx_group: default

disable-global-transaction: false

client:

rm:

report-success-enable: false

- 业务代码

@Override

@GlobalTransactional(rollbackFor = Exception.class)

public Integer killUser(Integer num) {

log.error("一共要杀" + num + "个人");

int ceil = (int)Math.ceil(num / 2);

IPage<User> page = new Page<>(1,ceil);

page = userMapper.selectPage(page, new QueryWrapper<>());

List<Integer> ids = page.getRecords().stream().map(User::getId).collect(Collectors.toList());

int currentKillNum = userMapper.deleteBatchIds(ids);

log.error("自己杀了" + currentKillNum + "个人");

Integer securityKillNum = esUserClient.killUser(ceil);

log.error("调用别的服务杀了" + securityKillNum + "个人");

if(1>0){

log.error("杀错数量了,需要回滚从新来");

System.out.println(1/0);

}

return currentKillNum + securityKillNum;

}

注意:

1、与我们以前的@Transactional注解不同,这里使用的是@GlobalTransactional注解

2、在每个参与事务的子服务中都需要在数据库加上undo_log这张表,并且需要添加seata的依赖、配置。

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)