Pytorch Bert+BiLstm文本分类

文章目录前言一、运行环境二、数据三、模型结构四、训练五、测试及预测前言昨天按照该文章(自然语言处理(NLP)Bert与Lstm结合)跑bert+bilstm分类的时候,没成功跑起来,于是自己修改了一下,成功运行后,记录在这篇博客中。一、运行环境python==3.7pandas==1.3.0numpy==1.20.3scikit-learn==0.24.2torch==1.9.0transform

·

前言

昨天按照该文章(自然语言处理(NLP)Bert与Lstm结合)跑bert+bilstm分类的时候,没成功跑起来,于是自己修改了一下,成功运行后,记录在这篇博客中。

一、运行环境

- python==3.7

- pandas==1.3.0

- numpy==1.20.3

- scikit-learn==0.24.2

- torch==1.9.0

- transformers==4.8.2

二、数据

1、Bert下载地址bert-base-chinese

注意:

- 只需要下载三个文件:

pytorch_model.bin

vocab.txt

config.json - 如果下载下来的pytorch_model.bin是一串乱七八糟的字母,将其文件名改为pytorch_model.bin即可

2、完整代码与数据集链接

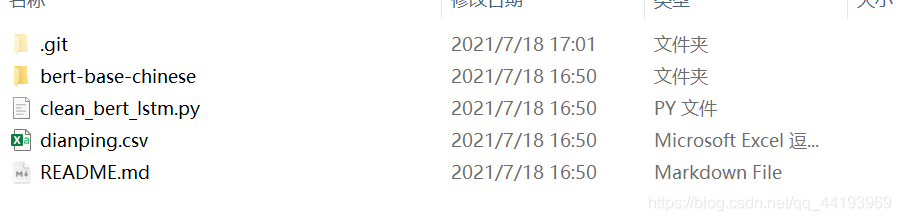

3、目录结构

三、模型结构

代码如下:

class bert_lstm(nn.Module):

def __init__(self, bertpath, hidden_dim, output_size,n_layers,bidirectional=True, drop_prob=0.5):

super(bert_lstm, self).__init__()

self.output_size = output_size

self.n_layers = n_layers

self.hidden_dim = hidden_dim

self.bidirectional = bidirectional

#Bert ----------------重点,bert模型需要嵌入到自定义模型里面

self.bert=BertModel.from_pretrained(bertpath)

for param in self.bert.parameters():

param.requires_grad = True

# LSTM layers

self.lstm = nn.LSTM(768, hidden_dim, n_layers, batch_first=True,bidirectional=bidirectional)

# dropout layer

self.dropout = nn.Dropout(drop_prob)

# linear and sigmoid layers

if bidirectional:

self.fc = nn.Linear(hidden_dim*2, output_size)

else:

self.fc = nn.Linear(hidden_dim, output_size)

#self.sig = nn.Sigmoid()

def forward(self, x, hidden):

batch_size = x.size(0)

#生成bert字向量

x=self.bert(x)[0] #bert 字向量

# lstm_out

#x = x.float()

lstm_out, (hidden_last,cn_last) = self.lstm(x, hidden)

#print(lstm_out.shape) #[32,100,768]

#print(hidden_last.shape) #[4, 32, 384]

#print(cn_last.shape) #[4, 32, 384]

#修改 双向的需要单独处理

if self.bidirectional:

#正向最后一层,最后一个时刻

hidden_last_L=hidden_last[-2]

#print(hidden_last_L.shape) #[32, 384]

#反向最后一层,最后一个时刻

hidden_last_R=hidden_last[-1]

#print(hidden_last_R.shape) #[32, 384]

#进行拼接

hidden_last_out=torch.cat([hidden_last_L,hidden_last_R],dim=-1)

#print(hidden_last_out.shape,'hidden_last_out') #[32, 768]

else:

hidden_last_out=hidden_last[-1] #[32, 384]

# dropout and fully-connected layer

out = self.dropout(hidden_last_out)

#print(out.shape) #[32,768]

out = self.fc(out)

return out

def init_hidden(self, batch_size):

weight = next(self.parameters()).data

number = 1

if self.bidirectional:

number = 2

if (USE_CUDA):

hidden = (weight.new(self.n_layers*number, batch_size, self.hidden_dim).zero_().float().cuda(),

weight.new(self.n_layers*number, batch_size, self.hidden_dim).zero_().float().cuda()

)

else:

hidden = (weight.new(self.n_layers*number, batch_size, self.hidden_dim).zero_().float(),

weight.new(self.n_layers*number, batch_size, self.hidden_dim).zero_().float()

)

return hidden

1、其实就是将embedding层换成了bert,所以Lstm的input_size 为bert的输出size,所以Lstm的第一个参数是768

2、由于是使用BiLstm,所以需要将最后时刻的正向最后一层与反向最后一层拼接起来,相应的Linear层的输入维度应是拼接后的维度

3、由于是2分类,所以output_size为2

四、训练

代码如下:

def train_model(config, data_train):

net = bert_lstm(config.bert_path,

config.hidden_dim,

config.output_size,

config.n_layers,

config.bidirectional)

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(net.parameters(), lr=config.lr)

if(config.use_cuda):

net.cuda()

net.train()

for e in range(config.epochs):

# initialize hidden state

h = net.init_hidden(config.batch_size)

counter = 0

# batch loop

for inputs, labels in data_train:

counter += 1

if(config.use_cuda):

inputs, labels = inputs.cuda(), labels.cuda()

h = tuple([each.data for each in h])

net.zero_grad()

output= net(inputs, h)

loss = criterion(output.squeeze(), labels.long())

loss.backward()

optimizer.step()

# loss stats

if counter % config.print_every == 0:

net.eval()

with torch.no_grad():

val_h = net.init_hidden(config.batch_size)

val_losses = []

for inputs, labels in valid_loader:

val_h = tuple([each.data for each in val_h])

if(config.use_cuda):

inputs, labels = inputs.cuda(), labels.cuda()

output = net(inputs, val_h)

val_loss = criterion(output.squeeze(), labels.long())

val_losses.append(val_loss.item())

net.train()

print("Epoch: {}/{}, ".format(e+1, config.epochs),

"Step: {}, ".format(counter),

"Loss: {:.6f}, ".format(loss.item()),

"Val Loss: {:.6f}".format(np.mean(val_losses)))

torch.save(net.state_dict(), config.save_path)

Epoch: 1/10, Step: 10, Loss: 0.684742, Val Loss: 0.688432

Epoch: 1/10, Step: 20, Loss: 0.664885, Val Loss: 0.671069

Epoch: 1/10, Step: 30, Loss: 0.613591, Val Loss: 0.622387

Epoch: 1/10, Step: 40, Loss: 0.571192, Val Loss: 0.562263

Epoch: 2/10, Step: 10, Loss: 0.283182, Val Loss: 0.421199

Epoch: 2/10, Step: 20, Loss: 0.385077, Val Loss: 0.361812

Epoch: 2/10, Step: 30, Loss: 0.348373, Val Loss: 0.318632

Epoch: 2/10, Step: 40, Loss: 0.597140, Val Loss: 0.314847

Epoch: 3/10, Step: 10, Loss: 0.194882, Val Loss: 0.273278

Epoch: 3/10, Step: 20, Loss: 0.123732, Val Loss: 0.343172

Epoch: 3/10, Step: 30, Loss: 0.115506, Val Loss: 0.313013

Epoch: 3/10, Step: 40, Loss: 0.170411, Val Loss: 0.282829

Epoch: 4/10, Step: 10, Loss: 0.150081, Val Loss: 0.263128

Epoch: 4/10, Step: 20, Loss: 0.353257, Val Loss: 0.326907

Epoch: 4/10, Step: 30, Loss: 0.037445, Val Loss: 0.342072

Epoch: 4/10, Step: 40, Loss: 0.096485, Val Loss: 0.331090

Epoch: 5/10, Step: 10, Loss: 0.062844, Val Loss: 0.321690

Epoch: 5/10, Step: 20, Loss: 0.031070, Val Loss: 0.316013

Epoch: 5/10, Step: 30, Loss: 0.058623, Val Loss: 0.318129

Epoch: 5/10, Step: 40, Loss: 0.046849, Val Loss: 0.335890

Epoch: 6/10, Step: 10, Loss: 0.147990, Val Loss: 0.446133

Epoch: 6/10, Step: 20, Loss: 0.324785, Val Loss: 0.448294

Epoch: 6/10, Step: 30, Loss: 0.034576, Val Loss: 0.390784

Epoch: 6/10, Step: 40, Loss: 0.018516, Val Loss: 0.446345

Epoch: 7/10, Step: 10, Loss: 0.041855, Val Loss: 0.340792

Epoch: 7/10, Step: 20, Loss: 0.140505, Val Loss: 0.433969

Epoch: 7/10, Step: 30, Loss: 0.015936, Val Loss: 0.537444

Epoch: 7/10, Step: 40, Loss: 0.061285, Val Loss: 0.688956

Epoch: 8/10, Step: 10, Loss: 0.027597, Val Loss: 0.383043

Epoch: 8/10, Step: 20, Loss: 0.020746, Val Loss: 0.344770

Epoch: 8/10, Step: 30, Loss: 0.066156, Val Loss: 0.418557

Epoch: 8/10, Step: 40, Loss: 0.011359, Val Loss: 0.434401

Epoch: 9/10, Step: 10, Loss: 0.014871, Val Loss: 0.450156

Epoch: 9/10, Step: 20, Loss: 0.011917, Val Loss: 0.455503

Epoch: 9/10, Step: 30, Loss: 0.139435, Val Loss: 0.496916

Epoch: 9/10, Step: 40, Loss: 0.015576, Val Loss: 0.499172

Epoch: 10/10, Step: 10, Loss: 0.006573, Val Loss: 0.585796

Epoch: 10/10, Step: 20, Loss: 0.144999, Val Loss: 0.514712

Epoch: 10/10, Step: 30, Loss: 0.037525, Val Loss: 0.445692

Epoch: 10/10, Step: 40, Loss: 0.010959, Val Loss: 0.382745

我的GPU为Quadro RTX 6000,没几分钟就训练完成了,还是比较快的。

五、测试及预测

def test_model(config, data_test):

net = bert_lstm(config.bert_path,

config.hidden_dim,

config.output_size,

config.n_layers,

config.bidirectional)

net.load_state_dict(torch.load(config.save_path))

net.cuda()

criterion = nn.CrossEntropyLoss()

test_losses = [] # track loss

num_correct = 0

# init hidden state

h = net.init_hidden(config.batch_size)

net.eval()

# iterate over test data

for inputs, labels in data_test:

h = tuple([each.data for each in h])

if(USE_CUDA):

inputs, labels = inputs.cuda(), labels.cuda()

output = net(inputs, h)

test_loss = criterion(output.squeeze(), labels.long())

test_losses.append(test_loss.item())

output=torch.nn.Softmax(dim=1)(output)

pred=torch.max(output, 1)[1]

# compare predictions to true label

correct_tensor = pred.eq(labels.long().view_as(pred))

correct = np.squeeze(correct_tensor.numpy()) if not USE_CUDA else np.squeeze(correct_tensor.cpu().numpy())

num_correct += np.sum(correct)

print("Test loss: {:.3f}".format(np.mean(test_losses)))

# accuracy over all test data

test_acc = num_correct/len(data_test.dataset)

print("Test accuracy: {:.3f}".format(test_acc))

def predict(test_comment_list, config):

net = bert_lstm(config.bert_path,

config.hidden_dim,

config.output_size,

config.n_layers,

config.bidirectional)

net.load_state_dict(torch.load(config.save_path))

net.cuda()

result_comments=pretreatment(test_comment_list) #预处理去掉标点符号

#转换为字id

tokenizer = BertTokenizer.from_pretrained(config.bert_path)

result_comments_id = tokenizer(result_comments,

padding=True,

truncation=True,

max_length=120,

return_tensors='pt')

tokenizer_id = result_comments_id['input_ids']

# print(tokenizer_id.shape)

inputs = tokenizer_id

batch_size = inputs.size(0)

# batch_size = 32

# initialize hidden state

h = net.init_hidden(batch_size)

if(USE_CUDA):

inputs = inputs.cuda()

net.eval()

with torch.no_grad():

# get the output from the model

output= net(inputs, h)

output=torch.nn.Softmax(dim=1)(output)

pred=torch.max(output, 1)[1]

# printing output value, before rounding

print('预测概率为: {:.6f}'.format(torch.max(output, 1)[0].item()))

if(pred.item()==1):

print("预测结果为:正向")

else:

print("预测结果为:负向")

Test loss: 0.390

Test accuracy: 0.837

predict :

test_comments = ['这个菜真不错']

预测概率为: 0.981275

预测结果为:正向

完整代码与数据集链接:Pytorch Bert+BiLstm二分类

更多推荐

已为社区贡献5条内容

已为社区贡献5条内容

所有评论(0)