动手学强化学习第七章(DQN算法)

文章目录DQN算法1.理论部分1.1 简介1.2 经验回放1.3 目标网络2.实践部分DQN算法1.理论部分1.1 简介简而言之,DQN就是解决Q-learning只能应用于离散obs,离散act的状况,当obs连续时再用一个Q表格来记录Q值不再可能,于是引入神经网络来近似表示从连续输入到离散输出之间的函数关系。Q-learning中的更新公式是:Q(s,a)←Q(s,a)+α[r+γmaxa′

DQN算法

文章转载自《动手学强化学习》https://hrl.boyuai.com/chapter/intro

1.理论部分

1.1 简介

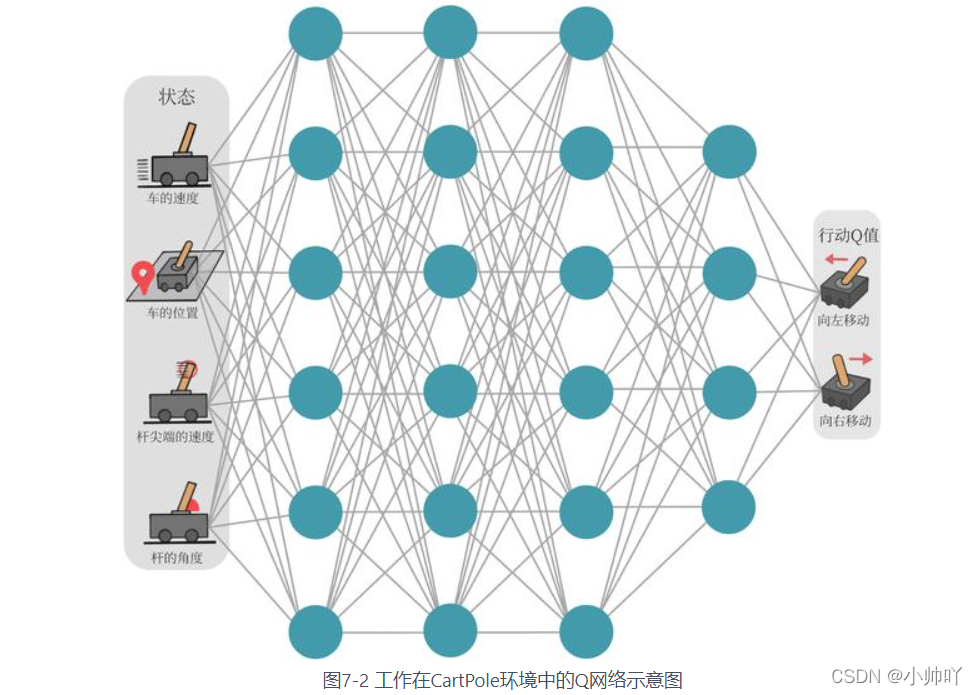

简而言之,DQN就是解决Q-learning只能应用于离散obs,离散act的状况,当obs连续时再用一个Q表格来记录Q值不再可能,于是引入神经网络来近似表示从连续输入到离散输出之间的函数关系。

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-dmmO8ix0-1651054585871)(C:\Users\admin\AppData\Roaming\Typora\typora-user-images\image-20220427180138925.png)]](https://i-blog.csdnimg.cn/blog_migrate/98d06d773dad1270638aa916788223b1.png)

Q-learning中的更新公式是:

Q

(

s

,

a

)

←

Q

(

s

,

a

)

+

α

[

r

+

γ

max

a

′

∈

A

Q

(

s

′

,

a

′

)

−

Q

(

s

,

a

)

]

Q(s, a) \leftarrow Q(s, a)+\alpha\left[r+\gamma \max _{a^{\prime} \in \mathcal{A}} Q\left(s^{\prime}, a^{\prime}\right)-Q(s, a)\right]

Q(s,a)←Q(s,a)+α[r+γa′∈AmaxQ(s′,a′)−Q(s,a)]

在这个公式中更新的目标是使Q(s, a)更加接近包含部分真实信息的:

r

+

γ

max

a

′

∈

A

Q

(

s

′

,

a

′

)

r+\gamma \max _{a^{\prime} \in \mathcal{A}} Q\left(s^{\prime}, a^{\prime}\right)

r+γa′∈AmaxQ(s′,a′)

于是,借鉴监督学习部分损失函数的定义:

ω

∗

=

arg

min

ω

1

2

N

∑

i

=

1

N

[

Q

ω

(

s

i

,

a

i

)

−

(

r

i

+

γ

max

a

′

Q

ω

(

s

i

′

,

a

′

)

)

]

2

\omega^{*}=\arg \min _{\omega} \frac{1}{2 N} \sum_{i=1}^{N}\left[Q_{\omega}\left(s_{i}, a_{i}\right)-\left(r_{i}+\gamma \max _{a^{\prime}} Q_{\omega}\left(s_{i}^{\prime}, a^{\prime}\right)\right)\right]^{2}

ω∗=argωmin2N1i=1∑N[Qω(si,ai)−(ri+γa′maxQω(si′,a′))]2

至此,我们就可以将 Q-learning 扩展到神经网络形式——深度 Q 网络(deep Q network,DQN)算法。由于 DQN 是离线策略算法,因此我们在收集数据的时候可以使用一个-贪婪策略来平衡探索与利用,将收集到的数据存储起来,在后续的训练中使用。

1.2 经验回放

为了更好地将 Q-learning 和深度神经网络结合,DQN 算法采用了经验回放(experience replay)方法,具体做法为维护一个回放缓冲区,将每次从环境中采样得到的四元组数据(状态、动作、奖励、下一状态)存储到回放缓冲区中,训练 Q 网络的时候再从回放缓冲区中随机采样若干数据来进行训练。这么做可以起到以下两个作用,一个是使样本满足独立同分布,另一个是提高样本利用率。

1.3 目标网络

在简介中我们可以看到我们想更新

Q

ω

(

s

,

a

)

Q_{\omega}(s, a)

Qω(s,a)

中的参数w使得它离目标

r

+

γ

max

a

′

∈

A

Q

(

s

′

,

a

′

)

r+\gamma \max _{a^{\prime} \in \mathcal{A}} Q\left(s^{\prime}, a^{\prime}\right)

r+γa′∈AmaxQ(s′,a′)

更近,但是目标中它和目标共用一个网络会导致高估的问题,在更新参数的过程中,我们的目标也会变化,这就相当于你在打一个移动的靶子,难度比较高。

因此我们可以引入target network(目标网络)来解决这个问题。实际上就是固定目标网络,每隔一段时间将训练网络的参数更新到目标网络。

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-BoVXwXld-1651054585872)(C:\Users\admin\AppData\Roaming\Typora\typora-user-images\image-20220427181530250.png)]](https://i-blog.csdnimg.cn/blog_migrate/c73a232f85ddf954e3308ecb83241368.png)

2.实践部分

代码参考自动手学强化学习(jupyter notebook版本):https://github.com/boyu-ai/Hands-on-RL

使用pycharm打开的请查看:https://github.com/zxs-000202/dsx-rl

方便的话给个star~

创建文件rl_utils.py

from tqdm import tqdm

import numpy as np

import torch

import collections

import random

class ReplayBuffer:

def __init__(self, capacity):

self.buffer = collections.deque(maxlen=capacity)

def add(self, state, action, reward, next_state, done):

self.buffer.append((state, action, reward, next_state, done))

def sample(self, batch_size):

transitions = random.sample(self.buffer, batch_size)

state, action, reward, next_state, done = zip(*transitions)

return np.array(state), action, reward, np.array(next_state), done

def size(self):

return len(self.buffer)

def moving_average(a, window_size):

cumulative_sum = np.cumsum(np.insert(a, 0, 0))

middle = (cumulative_sum[window_size:] - cumulative_sum[:-window_size]) / window_size

r = np.arange(1, window_size - 1, 2)

begin = np.cumsum(a[:window_size - 1])[::2] / r

end = (np.cumsum(a[:-window_size:-1])[::2] / r)[::-1]

return np.concatenate((begin, middle, end))

def train_on_policy_agent(env, agent, num_episodes):

return_list = []

for i in range(10):

with tqdm(total=int(num_episodes / 10), desc='Iteration %d' % i) as pbar:

for i_episode in range(int(num_episodes / 10)):

episode_return = 0

transition_dict = {'states': [], 'actions': [], 'next_states': [], 'rewards': [], 'dones': []}

state = env.reset()

done = False

while not done:

action = agent.take_action(state)

next_state, reward, done, _ = env.step(action)

transition_dict['states'].append(state)

transition_dict['actions'].append(action)

transition_dict['next_states'].append(next_state)

transition_dict['rewards'].append(reward)

transition_dict['dones'].append(done)

state = next_state

episode_return += reward

return_list.append(episode_return)

agent.update(transition_dict)

if (i_episode + 1) % 10 == 0:

pbar.set_postfix({'episode': '%d' % (num_episodes / 10 * i + i_episode + 1),

'return': '%.3f' % np.mean(return_list[-10:])})

pbar.update(1)

return return_list

def train_off_policy_agent(env, agent, num_episodes, replay_buffer, minimal_size, batch_size):

return_list = []

for i in range(10):

with tqdm(total=int(num_episodes / 10), desc='Iteration %d' % i) as pbar:

for i_episode in range(int(num_episodes / 10)):

episode_return = 0

state = env.reset()

done = False

while not done:

action = agent.take_action(state)

next_state, reward, done, _ = env.step(action)

replay_buffer.add(state, action, reward, next_state, done)

state = next_state

episode_return += reward

if replay_buffer.size() > minimal_size:

b_s, b_a, b_r, b_ns, b_d = replay_buffer.sample(batch_size)

transition_dict = {'states': b_s, 'actions': b_a, 'next_states': b_ns, 'rewards': b_r,

'dones': b_d}

agent.update(transition_dict)

return_list.append(episode_return)

if (i_episode + 1) % 10 == 0:

pbar.set_postfix({'episode': '%d' % (num_episodes / 10 * i + i_episode + 1),

'return': '%.3f' % np.mean(return_list[-10:])})

pbar.update(1)

return return_list

def compute_advantage(gamma, lmbda, td_delta):

td_delta = td_delta.detach().numpy()

advantage_list = []

advantage = 0.0

for delta in td_delta[::-1]:

advantage = gamma * lmbda * advantage + delta

advantage_list.append(advantage)

advantage_list.reverse()

return torch.tensor(advantage_list, dtype=torch.float)

import random

import gym

import numpy as np

import collections

from tqdm import tqdm

import torch

import torch.nn.functional as F

import matplotlib.pyplot as plt

import rl_utils

class ReplayBuffer:

''' 经验回放池 '''

def __init__(self, capacity):

self.buffer = collections.deque(maxlen=capacity) # 队列,先进先出

def add(self, state, action, reward, next_state, done): # 将数据加入buffer

self.buffer.append((state, action, reward, next_state, done))

def sample(self, batch_size): # 从buffer中采样数据,数量为batch_size

transitions = random.sample(self.buffer, batch_size)

state, action, reward, next_state, done = zip(*transitions)

return np.array(state), action, reward, np.array(next_state), done

def size(self): # 目前buffer中数据的数量

return len(self.buffer)

class Qnet(torch.nn.Module):

''' 只有一层隐藏层的Q网络 '''

def __init__(self, state_dim, hidden_dim, action_dim):

super(Qnet, self).__init__()

self.fc1 = torch.nn.Linear(state_dim, hidden_dim)

self.fc2 = torch.nn.Linear(hidden_dim, action_dim)

def forward(self, x):

x = F.relu(self.fc1(x)) # 隐藏层使用ReLU激活函数

return self.fc2(x)

class DQN:

''' DQN算法 '''

def __init__(self, state_dim, hidden_dim, action_dim, learning_rate, gamma,

epsilon, target_update, device):

self.action_dim = action_dim

self.q_net = Qnet(state_dim, hidden_dim,

self.action_dim).to(device) # Q网络

# 目标网络

self.target_q_net = Qnet(state_dim, hidden_dim,

self.action_dim).to(device)

# 使用Adam优化器

self.optimizer = torch.optim.Adam(self.q_net.parameters(),

lr=learning_rate)

self.gamma = gamma # 折扣因子

self.epsilon = epsilon # epsilon-贪婪策略

self.target_update = target_update # 目标网络更新频率

self.count = 0 # 计数器,记录更新次数

self.device = device

def take_action(self, state): # epsilon-贪婪策略采取动作

if np.random.random() < self.epsilon:

action = np.random.randint(self.action_dim)

else:

state = torch.tensor([state], dtype=torch.float).to(self.device)

action = self.q_net(state).argmax().item()

return action

def update(self, transition_dict):

states = torch.tensor(transition_dict['states'],

dtype=torch.float).to(self.device)

actions = torch.tensor(transition_dict['actions']).view(-1, 1).to(

self.device)

rewards = torch.tensor(transition_dict['rewards'],

dtype=torch.float).view(-1, 1).to(self.device)

next_states = torch.tensor(transition_dict['next_states'],

dtype=torch.float).to(self.device)

dones = torch.tensor(transition_dict['dones'],

dtype=torch.float).view(-1, 1).to(self.device)

q_values = self.q_net(states).gather(1, actions) # Q值

# 下个状态的最大Q值

max_next_q_values = self.target_q_net(next_states).max(1)[0].view(

-1, 1)

q_targets = rewards + self.gamma * max_next_q_values * (1 - dones

) # TD误差目标

dqn_loss = torch.mean(F.mse_loss(q_values, q_targets)) # 均方误差损失函数

self.optimizer.zero_grad() # PyTorch中默认梯度会累积,这里需要显式将梯度置为0

dqn_loss.backward() # 反向传播更新参数

self.optimizer.step()

if self.count % self.target_update == 0:

self.target_q_net.load_state_dict(

self.q_net.state_dict()) # 更新目标网络

self.count += 1

lr = 2e-3

num_episodes = 500

hidden_dim = 128

gamma = 0.98

epsilon = 0.01

target_update = 10

buffer_size = 10000

minimal_size = 500

batch_size = 64

device = torch.device("cuda") if torch.cuda.is_available() else torch.device(

"cpu")

env_name = 'CartPole-v0'

env = gym.make(env_name)

random.seed(0)

np.random.seed(0)

env.seed(0)

torch.manual_seed(0)

replay_buffer = ReplayBuffer(buffer_size)

state_dim = env.observation_space.shape[0]

action_dim = env.action_space.n

agent = DQN(state_dim, hidden_dim, action_dim, lr, gamma, epsilon,

target_update, device)

return_list = []

for i in range(10):

with tqdm(total=int(num_episodes / 10), desc='Iteration %d' % i) as pbar:

for i_episode in range(int(num_episodes / 10)):

episode_return = 0

state = env.reset()

done = False

while not done:

action = agent.take_action(state)

next_state, reward, done, _ = env.step(action)

replay_buffer.add(state, action, reward, next_state, done)

state = next_state

episode_return += reward

# 当buffer数据的数量超过一定值后,才进行Q网络训练

if replay_buffer.size() > minimal_size:

b_s, b_a, b_r, b_ns, b_d = replay_buffer.sample(batch_size)

transition_dict = {

'states': b_s,

'actions': b_a,

'next_states': b_ns,

'rewards': b_r,

'dones': b_d

}

agent.update(transition_dict)

return_list.append(episode_return)

if (i_episode + 1) % 10 == 0:

pbar.set_postfix({

'episode':

'%d' % (num_episodes / 10 * i + i_episode + 1),

'return':

'%.3f' % np.mean(return_list[-10:])

})

pbar.update(1)

episodes_list = list(range(len(return_list)))

plt.plot(episodes_list, return_list)

plt.xlabel('Episodes')

plt.ylabel('Returns')

plt.title('DQN on {}'.format(env_name))

plt.show()

mv_return = rl_utils.moving_average(return_list, 9)

plt.plot(episodes_list, mv_return)

plt.xlabel('Episodes')

plt.ylabel('Returns')

plt.title('DQN on {}'.format(env_name))

plt.show()

更多推荐

已为社区贡献8条内容

已为社区贡献8条内容

所有评论(0)