Keras实现RNN和LSTM做回归预测(python)

学习了RNN和LSTM的理论知识,下面再来使用Keras实现一下这些模型。理论知识:循环神经网络(RNN)LSTM神经网络和GRUKeras实现神经网络:Keras实现全连接神经网络(python)Keras的安装过程:Tensorflow和Keras版本对照及环境安装1. 环境准备import matplotlib.pyplot as pltfrom math import sqrtfrom m

学习了RNN和LSTM的理论知识,下面再来使用Keras实现一下这些模型。

理论知识:

Keras实现神经网络:

- Keras实现全连接神经网络(python)

Keras的安装过程:

1. 环境准备

import matplotlib.pyplot as plt

from math import sqrt

from matplotlib import pyplot

import pandas as pd

import numpy as np

from numpy import concatenate

from sklearn.preprocessing import MinMaxScaler

from sklearn.metrics import mean_absolute_error, mean_squared_error, r2_score

from keras.models import Sequential

from keras.layers.core import Dense, Dropout, Activation

from keras import layers

from keras.optimizers import Adam

2. 加载数据及数据预处理

数据集为26个特征变量及一个预测值。数据集总共14122条数据,其中训练集取前15年数据,共10066条,后5年做预测,共4055条。

将数据集进行划分,然后将训练集和测试集划分为输入和输出变量,最终将输入(X)改造为RNN,LSTM的输入格式,即[samples,timesteps,features]。

filename = 'data.csv'

data = pd.read_csv(filename)

values = data.values

# # 原始数据标准化,为了加速收敛

scaler = MinMaxScaler(feature_range=(0, 1))

scaled = scaler.fit_transform(values)

Y = scaled[:, -1]

X = scaled[:, 0:-1]

train_x = X[0: 10066]

train_y = Y[0: 10066]

test_x = X[10066: 14122]

test_y = Y[10066: 14122]

train_x, train_y = np.array(train_x), np.array(train_y)

test_x, test_y = np.array(test_x), np.array(test_y)

"""

输入要求:[送入样本数, 循环核时间展开步数, 每个时间步输入特征个数]

"""

train_x = np.reshape(train_x, (train_x.shape[0], 1, 26))

test_x = np.reshape(test_x, (test_x.shape[0], 1, 26))

我的数据集,由于每年的数据不一样,无法按年划分,没有时间序列性。因此我的循环核时间展开步数为1。

一般的时间序列,如使用60天的股票预测第61天的股票,他的时间序列就是60。具体可参考:深度学习100例-循环神经网络(RNN)实现股票预测

"""

使用前60天的开盘价作为输入特征x_train

第61天的开盘价作为输入标签y_train

for循环共构建2426-300-60=2066组训练数据。

共构建300-60=260组测试数据

"""

for i in range(60, len(training_set)):

x_train.append(training_set[i - 60:i, 0])

y_train.append(training_set[i, 0])

for i in range(60, len(test_set)):

x_test.append(test_set[i - 60:i, 0])

y_test.append(test_set[i, 0])

x_train, y_train = np.array(x_train), np.array(y_train) # x_train形状为:(2066, 60, 1)

x_test, y_test = np.array(x_test), np.array(y_test)

"""

输入要求:[送入样本数, 循环核时间展开步数, 每个时间步输入特征个数]

"""

x_train = np.reshape(x_train, (x_train.shape[0], 60, 1))

x_test = np.reshape(x_test, (x_test.shape[0], 60, 1))

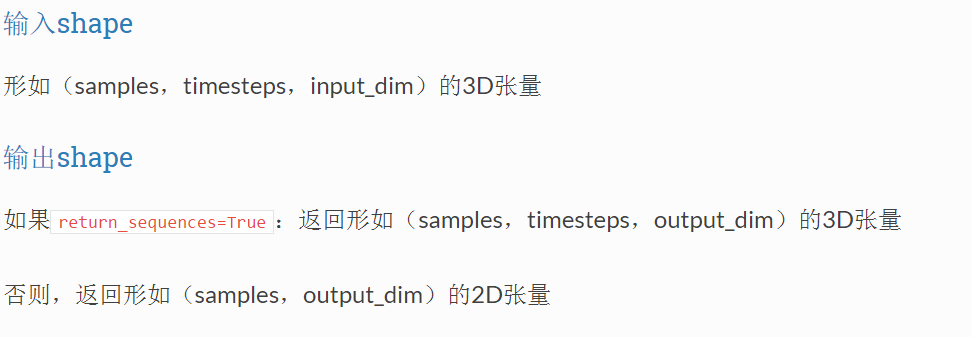

其中,在所有的RNN中,包括simpleRNN, LSTM, GRU等等,输入输出数据格式如下:

2. 构建、编译模型

RNN模型是调用layers.SimpleRNN,LSTM模型是调用layers.LSTM

RNN模型:

# 创建模型

model = Sequential()

# RNN神经网络

# 隐藏层100

model.add(layers.SimpleRNN(units=100, return_sequences=True))

model.add(Activation('relu'))

# Dropout层用于防止过拟合

model.add(Dropout(0.1))

# 隐藏层100

model.add(layers.SimpleRNN(units=100))

model.add(Activation('relu'))

model.add(Dropout(0.1))

model.add(Dense(1))

LSTM模型:

# 创建模型

model = Sequential()

# LSTM神经网络

# 隐藏层100

model.add(layers.LSTM(units=100, activation='relu', return_sequences=True))

# 隐藏层100

model.add(layers.LSTM(units=100, activation='relu'))

model.add(Dense(1))

# 编译模型

# 使用高效的ADAM优化算法以及优化的最小均方误差损失函数

model.compile(loss='mean_squared_error', optimizer=Adam())

3. 训练模型

# 训练模型

history = model.fit(train_x, train_y, epochs=30, batch_size=64,

validation_data=(test_x, test_y), verbose=2)

model.summary() # 查看你的神经网络的架构和参数量等信息

4. 模型预测及可视化

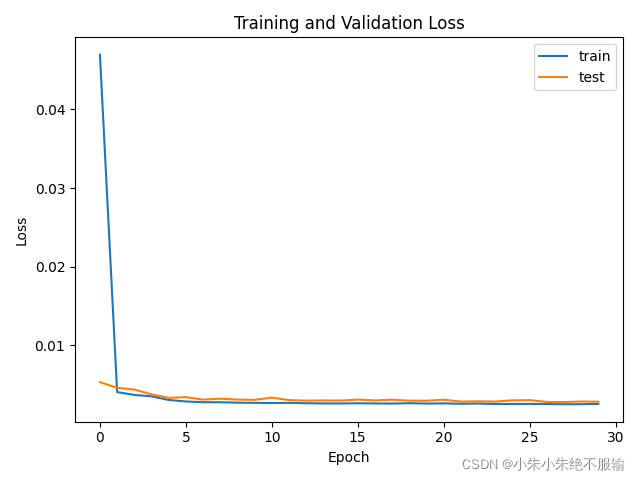

# loss曲线

pyplot.plot(history.history['loss'], label='train')

pyplot.plot(history.history['val_loss'], label='test')

pyplot.title('Model loss')

pyplot.ylabel('Loss')

pyplot.xlabel('Epoch')

plt.title('Training and Validation Loss')

pyplot.legend()

pyplot.show()

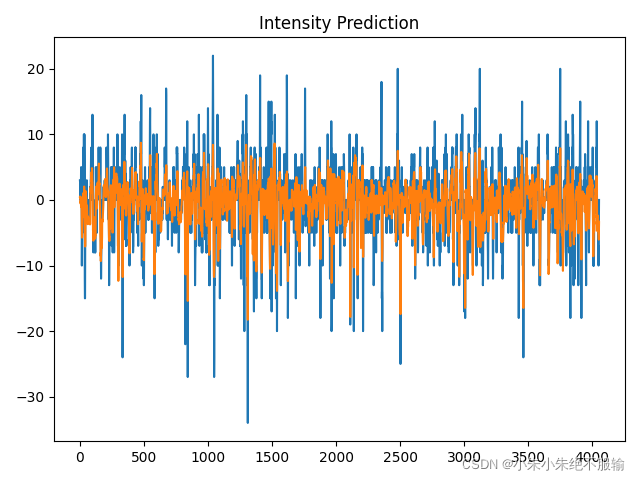

# 预测

yhat = model.predict(test_x)

test_x = np.reshape(test_x, (test_x.shape[0], 26))

# 预测y 逆标准化

inv_yhat0 = concatenate((test_x, yhat), axis=1)

inv_yhat1 = scaler.inverse_transform(inv_yhat0)

inv_yhat = inv_yhat1[:, -1]

# 原始y逆标准化

test_y = test_y.reshape(len(test_y), 1)

inv_y0 = concatenate((test_x, test_y), axis=1)

inv_y1 = scaler.inverse_transform(inv_y0)

inv_y = inv_y1[:, -1]

print(inv_y)

# 计算 R2

r_2 = r2_score(inv_y, inv_yhat)

print('Test r_2: %.3f' % r_2)

# 计算MAE

mae = mean_absolute_error(inv_y, inv_yhat)

print('Test MAE: %.3f' % mae)

# 计算RMSE

rmse = sqrt(mean_squared_error(inv_y, inv_yhat))

print('Test RMSE: %.3f' % rmse)

plt.plot(inv_y)

plt.plot(inv_yhat)

plt.title('Intensity Prediction')

plt.show()

5. 预测结果

Train on 10066 samples, validate on 4055 samples

Epoch 1/30

- 1s - loss: 0.0438 - val_loss: 0.0046

Epoch 2/30

- 0s - loss: 0.0070 - val_loss: 0.0047

Epoch 3/30

- 0s - loss: 0.0057 - val_loss: 0.0036

Epoch 4/30

- 0s - loss: 0.0050 - val_loss: 0.0042

Epoch 5/30

- 0s - loss: 0.0046 - val_loss: 0.0037

Epoch 6/30

- 0s - loss: 0.0044 - val_loss: 0.0036

Epoch 7/30

- 0s - loss: 0.0042 - val_loss: 0.0036

Epoch 8/30

- 0s - loss: 0.0041 - val_loss: 0.0037

Epoch 9/30

- 0s - loss: 0.0040 - val_loss: 0.0034

Epoch 10/30

- 0s - loss: 0.0040 - val_loss: 0.0032

Epoch 11/30

- 0s - loss: 0.0039 - val_loss: 0.0030

Epoch 12/30

- 0s - loss: 0.0038 - val_loss: 0.0030

Epoch 13/30

- 0s - loss: 0.0037 - val_loss: 0.0033

Epoch 14/30

- 0s - loss: 0.0036 - val_loss: 0.0031

Epoch 15/30

- 0s - loss: 0.0036 - val_loss: 0.0030

Epoch 16/30

- 0s - loss: 0.0035 - val_loss: 0.0034

Epoch 17/30

- 0s - loss: 0.0034 - val_loss: 0.0029

Epoch 18/30

- 0s - loss: 0.0033 - val_loss: 0.0037

Epoch 19/30

- 0s - loss: 0.0033 - val_loss: 0.0029

Epoch 20/30

- 0s - loss: 0.0033 - val_loss: 0.0029

Epoch 21/30

- 0s - loss: 0.0031 - val_loss: 0.0029

Epoch 22/30

- 0s - loss: 0.0032 - val_loss: 0.0028

Epoch 23/30

- 0s - loss: 0.0031 - val_loss: 0.0029

Epoch 24/30

- 0s - loss: 0.0030 - val_loss: 0.0033

Epoch 25/30

- 0s - loss: 0.0030 - val_loss: 0.0029

Epoch 26/30

- 0s - loss: 0.0030 - val_loss: 0.0029

Epoch 27/30

- 0s - loss: 0.0029 - val_loss: 0.0029

Epoch 28/30

- 0s - loss: 0.0028 - val_loss: 0.0028

Epoch 29/30

- 0s - loss: 0.0029 - val_loss: 0.0028

Epoch 30/30

- 0s - loss: 0.0028 - val_loss: 0.0028

Model: "sequential_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

simple_rnn_1 (SimpleRNN) (None, 1, 100) 12700

_________________________________________________________________

activation_1 (Activation) (None, 1, 100) 0

_________________________________________________________________

dropout_1 (Dropout) (None, 1, 100) 0

_________________________________________________________________

simple_rnn_2 (SimpleRNN) (None, 100) 20100

_________________________________________________________________

activation_2 (Activation) (None, 100) 0

_________________________________________________________________

dropout_2 (Dropout) (None, 100) 0

_________________________________________________________________

dense_1 (Dense) (None, 1) 101

=================================================================

Total params: 32,901

Trainable params: 32,901

Non-trainable params: 0

_________________________________________________________________

[ 3. 3. 0. ... -5. -2. 0.]

Test r_2: 0.566

Test MAE: 2.452

Test RMSE: 3.371

Process finished with exit code 0

6. Keras实现RNN全部代码:

我使用RNN来做普通的回归预测,并没有时间序列,可以简单的参考一下。如果想参考时间序列的回归预测,可以参考上面的股票预测。

import matplotlib.pyplot as plt

from math import sqrt

from matplotlib import pyplot

import pandas as pd

import numpy as np

from numpy import concatenate

from sklearn.preprocessing import MinMaxScaler

from sklearn.metrics import mean_absolute_error, mean_squared_error, r2_score

from keras.models import Sequential

from keras.layers.core import Dense, Dropout, Activation

from keras import layers

from keras.optimizers import Adam

'''Keras实现神经网络回归模型'''

# 读取数据

filename = 'data.csv'

data = pd.read_csv(filename)

values = data.values

# # 原始数据标准化,为了加速收敛

scaler = MinMaxScaler(feature_range=(0, 1))

scaled = scaler.fit_transform(values)

Y = scaled[:, -1]

X = scaled[:, 0:-1]

train_x = X[0: 10066]

train_y = Y[0: 10066]

test_x = X[10066: 14122]

test_y = Y[10066: 14122]

input = X.shape[1]

time_steps = input

train_x, train_y = np.array(train_x), np.array(train_y)

test_x, test_y = np.array(test_x), np.array(test_y)

print(train_x.shape)

"""

输入要求:[送入样本数, 循环核时间展开步数, 每个时间步输入特征个数]

"""

train_x = np.reshape(train_x, (train_x.shape[0], 1, 26))

test_x = np.reshape(test_x, (test_x.shape[0], 1, 26))

# 创建模型

model = Sequential()

# 循环神经网络

# 隐藏层100

model.add(layers.SimpleRNN(units=100, return_sequences=True))

model.add(Activation('relu'))

# Dropout层用于防止过拟合

model.add(Dropout(0.1))

# 隐藏层100

model.add(layers.SimpleRNN(units=100))

model.add(Activation('relu'))

model.add(Dropout(0.1))

model.add(Dense(1))

# 编译模型

# 使用高效的ADAM优化算法以及优化的最小均方误差损失函数

model.compile(loss='mean_squared_error', optimizer=Adam())

# 训练模型

history = model.fit(train_x, train_y, epochs=30, batch_size=64,

validation_data=(test_x, test_y), verbose=2)

model.summary() # 查看你的神经网络的架构和参数量等信息

# loss曲线

pyplot.plot(history.history['loss'], label='train')

pyplot.plot(history.history['val_loss'], label='test')

pyplot.title('Model loss')

pyplot.ylabel('Loss')

pyplot.xlabel('Epoch')

plt.title('Training and Validation Loss')

pyplot.legend()

pyplot.show()

# 预测

yhat = model.predict(test_x)

test_x = np.reshape(test_x, (test_x.shape[0], 26))

# 预测y 逆标准化

inv_yhat0 = concatenate((test_x, yhat), axis=1)

inv_yhat1 = scaler.inverse_transform(inv_yhat0)

inv_yhat = inv_yhat1[:, -1]

# 原始y逆标准化

test_y = test_y.reshape(len(test_y), 1)

inv_y0 = concatenate((test_x, test_y), axis=1)

inv_y1 = scaler.inverse_transform(inv_y0)

inv_y = inv_y1[:, -1]

print(inv_y)

# 计算 R2

r_2 = r2_score(inv_y, inv_yhat)

print('Test r_2: %.3f' % r_2)

# 计算MAE

mae = mean_absolute_error(inv_y, inv_yhat)

print('Test MAE: %.3f' % mae)

# 计算RMSE

rmse = sqrt(mean_squared_error(inv_y, inv_yhat))

print('Test RMSE: %.3f' % rmse)

plt.plot(inv_y)

plt.plot(inv_yhat)

plt.title('Intensity Prediction')

plt.show()

参考:

深度学习100例-循环神经网络(RNN)实现股票预测

深度学习100例-循环神经网络(LSTM)实现股票预测

更多推荐

已为社区贡献6条内容

已为社区贡献6条内容

所有评论(0)