logistic/softmax回归梯度下降法公式推导与代码实现

本文对logistic回归和softmax回归的梯度公式进行了推导,并用代码实现了梯度下降算法更新参数

logistic回归

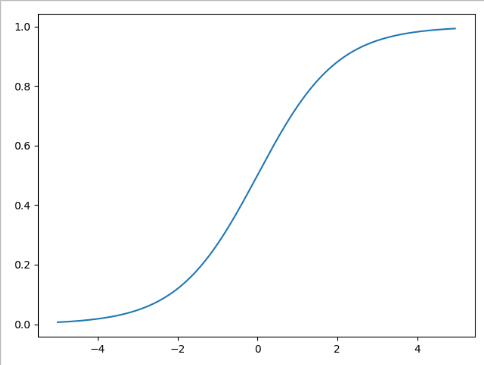

logistic回归是一种线性回归分析模型,常用于二分类问题,具体公式如下:对于样本,它被分类到正样本的置信度为

其中,和

为待求参数,为了后续求解方便,我们令

,令

,这样,logistic公式便可写成如下形式

logistic参数求解

logistic回归分析中最重要的是参数的求解,这里我使用交叉熵函数和均方误差函数作为损失函数来进行梯度下降

交叉熵函数

对于二分类问题,其交叉熵损失函数定义如下

接下来只需要对其求的导数即可,在这之前,我们先求出

以便后续计算

利用该公式,可以求得

均方误差损失函数

我们同样可以用均方误差损失函数来求它的参数

参数更新

在求得梯度后,设学习率为,则参数更新规则如下

softmax回归

由于logsitic回归只适用于二分类问题,为了使其能应用于多分类问题,在其基础上,使用softmax函数替代 sigmoid函数即可将输出映射到多维向量,在softmax回归中,样本属于类别

的概率为

于是,softmax的为以下形式

它的参数为

softmax参数求解

我们仍然使用交叉熵和均方误差损失函数来进行梯度下降,在多分类问题中,标签不再是0或者1这种标量,而是向量,在标签向量中,样本类别对应的标签向量的分量为1,其余为0

交叉熵损失函数

对于softmax这种多分类问题,交叉熵定义如下

其中表示向量

的第

个分量,对某一个参数

其进行求导

所以

均方误差损失函数

softmax的均方误差损失函数定义如下

对某一个参数其进行求导

代码实现

import numpy as np

import random

def sigmoid(x):

return 1/(1+np.exp(-x))

class logistic_regression:

def __init__(self,input_size):

self.input_size=input_size+1

self.w=np.zeros((self.input_size,1))

def predict(self,x):

"""

输出预测值

:param x: (size,) 输入向量

:return: float 输出值

"""

return sigmoid(self.w.T@np.hstack((x,1)))

def fit_crossEntropy(self,X,Y,valX,valY,lr=0.01,Epoch=30):

"""

用交叉熵损失函数训练

:param X: (num,size) 训练集样本

:param Y: (num,1) 训练集标签

:param valX: (numVal,size) 验证机样本

:param valY: (numVal,1) 验证集标签

:param lr: float 学习率

:param Epoch: int 训练轮数

:return: 训练过程的loss列表

"""

num=X.shape[0]

numVal=valX.shape[0]

Loss=[]

ValLoss=[]

train_precision=[]

train_recall=[]

train_f1=[]

val_precision=[]

val_recall=[]

val_f1=[]

#训练

for epoch in range(Epoch):

loss=0

bestValloss=np.inf

bestW=self.w

TP=0

TN=0

FP=0

FN=0

for i in range(num):

y=self.predict(X[i])

if y>=0.5 and Y[i]>=0.5:

TP+=1

elif y<0.5 and Y[i]>=0.5:

FN+=1

elif y>=0.5 and Y[i]<0.5:

FP+=1

else:

TN+=1

#loss计算 交叉熵

loss-=Y[i]*np.log(y)+(1-Y[i])*np.log(1-y)

#梯度计算

dw = (y - Y[i]) * np.hstack((X[i], 1))

dw.resize((self.input_size, 1))

#参数更新

self.w-=lr*dw

#记录训练结果

Loss.append(loss[0]/num)

train_precision.append(TP/(TP+FP))

train_recall.append(TP/(TP+FN))

train_f1.append((2*train_precision[-1]*train_recall[-1])/(train_precision[-1]+train_recall[-1]))

#验证

valLoss=0

TP=0

TN=0

FP=0

FN=0

for i in range(numVal):

y=self.predict(valX[i])

if y>=0.5 and valY[i]>=0.5:

TP+=1

elif y<0.5 and valY[i]>=0.5:

FN+=1

elif y>=0.5 and valY[i]<0.5:

FP+=1

else:

TN+=1

#loss计算

valLoss-=valY[i]*np.log(y)+(1-valY[i])*np.log(1-y)

#保存验证结果

ValLoss.append(valLoss[0]/numVal)

val_precision.append(TP / (TP + FP))

val_recall.append(TP / (TP + FN))

val_f1.append((2 * val_precision[-1] * val_recall[-1]) / (val_precision[-1] + val_recall[-1]))

if ValLoss[-1]<bestValloss:

bestW=self.w.copy()

bestValloss=ValLoss[-1]

print(">> 第{}轮训练 trainLoss:{:.3f} 准确率:{:.3f} 召回率:{:.3f} f1:{:.3f} \n 验证 "

"valLoss:{:.3f} 准确率:{:.3f} 召回率:{:.3f} f1:{:.3f} ".format(epoch+1,Loss[-1],train_precision[-1],train_recall[-1],

train_f1[-1],ValLoss[-1],val_precision[-1],val_recall[-1],

val_f1[-1]))

#选择验证集表现最好的参数

self.w=bestW.copy()

return Loss,ValLoss

def test_crossEntropy(self,testX,testY):

"""

交叉熵损失函数测试

:param testX: (num,size) 测试集输入

:param testY: (num,1) 测试集标签

:return:

"""

numTest=testX.shape[0]

testLoss=0

TP = 0

TN = 0

FP = 0

FN = 0

for i in range(numTest):

y = self.predict(testX[i])

if y >= 0.5 and testY[i] >= 0.5:

TP += 1

elif y < 0.5 and testY[i] >= 0.5:

FN += 1

elif y >= 0.5 and testY[i] < 0.5:

FP += 1

else:

TN += 1

#loss计算

testLoss -= testY[i] * np.log(y) + (1 - testY[i]) * np.log(1 - y)

precision=TP / (TP + FP)

recall=TP / (TP + FN)

f1=2 * precision * recall / (precision + recall)

return testLoss/numTest,precision,recall,f1

def fit_MSE(self, X, Y, valX, valY, lr=0.01, Epoch=30):

"""

用均方误差损失函数训练

:param X: (num,size) 训练集样本

:param Y: (num,1) 训练集标签

:param valX: (numVal,size) 验证机样本

:param valY: (numVal,1) 验证集标签

:param lr: float 学习率

:param Epoch: int 训练轮数

:return: 训练过程的loss列表

"""

num = X.shape[0]

numVal = valX.shape[0]

Loss = []

ValLoss = []

train_precision = []

train_recall = []

train_f1 = []

val_precision = []

val_recall = []

val_f1 = []

# 训练

for epoch in range(Epoch):

loss = 0

bestValloss = np.inf

bestW = self.w

TP = 0

TN = 0

FP = 0

FN = 0

for i in range(num):

y = self.predict(X[i])

if y >= 0.5 and Y[i] >= 0.5:

TP += 1

elif y < 0.5 and Y[i] >= 0.5:

FN += 1

elif y >= 0.5 and Y[i] < 0.5:

FP += 1

else:

TN += 1

#loss计算 MSE

loss += (y-Y[i])**2

#梯度计算 MSE

dw = (y - Y[i]) * np.hstack((X[i], 1))*2*y*(1-y)

dw.resize((self.input_size, 1))

self.w -= lr * dw

# 记录训练结果

Loss.append(loss[0]/num)

train_precision.append(TP / (TP + FP))

train_recall.append(TP / (TP + FN))

train_f1.append((2 * train_precision[-1] * train_recall[-1]) / (train_precision[-1] + train_recall[-1]))

# 验证

valLoss = 0

TP = 0

TN = 0

FP = 0

FN = 0

for i in range(numVal):

y = self.predict(valX[i])

if y >= 0.5 and valY[i] >= 0.5:

TP += 1

elif y < 0.5 and valY[i] >= 0.5:

FN += 1

elif y >= 0.5 and valY[i] < 0.5:

FP += 1

else:

TN += 1

valLoss += (y-valY[i])**2

ValLoss.append(valLoss[0]/numVal)

val_precision.append(TP / (TP + FP))

val_recall.append(TP / (TP + FN))

val_f1.append((2 * val_precision[-1] * val_recall[-1]) / (val_precision[-1] + val_recall[-1]))

if ValLoss[-1] < bestValloss:

bestW = self.w.copy()

bestValloss = ValLoss[-1]

print(">> 第{}轮训练 trainLoss:{:.3f} 准确率:{:.3f} 召回率:{:.3f} f1:{:.3f} \n 验证 "

"valLoss:{:.3f} 准确率:{:.3f} 召回率:{:.3f} f1:{:.3f} ".format(epoch + 1, Loss[-1], train_precision[-1],

train_recall[-1],

train_f1[-1], ValLoss[-1], val_precision[-1],

val_recall[-1],

val_f1[-1]))

self.w = bestW.copy()

return Loss, ValLoss

def test_MSE(self, testX, testY):

"""

均方误差损失函数测试

:param testX: (num,size) 测试集输入

:param testY: (num,1) 测试集标签

:return:

"""

numTest = testX.shape[0]

testLoss = 0

TP = 0

TN = 0

FP = 0

FN = 0

for i in range(numTest):

y = self.predict(testX[i])

if y >= 0.5 and testY[i] >= 0.5:

TP += 1

elif y < 0.5 and testY[i] >= 0.5:

FN += 1

elif y >= 0.5 and testY[i] < 0.5:

FP += 1

else:

TN += 1

#loss计算 MSE

testLoss += (y-testY[i])**2

precision = TP / (TP + FP)

recall = TP / (TP + FN)

f1 = 2 * precision * recall / (precision + recall)

return testLoss, precision, recall, f1

class softmax_regression:

def __init__(self,input_size,output_size):

"""

:param input_size: 输入尺寸

:param output_size: 输出尺寸

"""

self.input_size=input_size+1

self.output_size=output_size

self.w=np.zeros((self.input_size,self.output_size))

def predict(self,x):

x=np.hstack((x,1))

result=np.zeros(self.output_size)

for k in range(self.output_size):

result[k]=np.exp(self.w[:, k] @ x)

result=result/np.sum(result)

return result

def fit_crossEntropy(self, X, Y, valX, valY, lr=0.01, Epoch=30):

"""

用交叉熵损失函数训练

:param X: (num,size) 训练集样本

:param Y: (num,1) 训练集标签

:param valX: (numVal,size) 验证机样本

:param valY: (numVal,1) 验证集标签

:param lr: float 学习率

:param Epoch: int 训练轮数

:return: 训练过程的loss列表

"""

num = X.shape[0]

numVal = valX.shape[0]

Loss = []

ValLoss = []

acc=[]

Valacc=[]

# 训练

for epoch in range(Epoch):

loss = 0

bestValloss = np.inf

bestW = self.w

accNum=0

for i in range(num):

y = self.predict(X[i])

#判断是否预测正确

if np.argmax(y) == int(Y[i]):

accNum+=1

onehot_Y=np.zeros(self.output_size)

onehot_Y[int(Y[i])]=1

#loss计算

loss -= onehot_Y@np.log(y)

#梯度计算

temp1=np.hstack((X[i], 1))

temp1.resize((temp1.size,1))

temp2=onehot_Y-y

temp2.resize((temp2.size,1))

dw = -temp1@temp2.T

# dw.resize((self.input_size, self.output_size))

self.w -= lr * dw

# 记录训练结果

Loss.append(loss / num)

acc.append(accNum/num)

# 验证

valLoss = 0

accNum=0

for i in range(numVal):

y = self.predict(valX[i])

if np.argmax(y) == int(valY[i]):

accNum+=1

onehot_Y=np.zeros(self.output_size)

onehot_Y[int(valY[i])]=1

valLoss -= onehot_Y@np.log(y)

ValLoss.append(valLoss / numVal)

Valacc.append(accNum/numVal)

if ValLoss[-1] < bestValloss:

bestW = self.w.copy()

bestValloss = ValLoss[-1]

print(">> 第{}轮训练 trainLoss:{:.3f} acc:{:.3f} \n 验证 "

"valLoss:{:.3f} acc:{:.3f} ".format(epoch + 1, Loss[-1], acc[-1], ValLoss[-1], Valacc[-1]))

self.w = bestW.copy()

return Loss, ValLoss

def test_crossEntropy(self, testX, testY):

"""

交叉熵损失函数测试

:param testX: (num,size) 测试集输入

:param testY: (num,1) 测试集标签

:return:

"""

numTest = testX.shape[0]

testLoss = 0

accNum=0

for i in range(numTest):

y = self.predict(testX[i])

if np.argmax(y) == int(testY[i]):

accNum += 1

onehot_Y = np.zeros(self.output_size)

onehot_Y[int(testY[i])] = 1

testLoss -= onehot_Y @ np.log(y)

return testLoss / numTest, accNum/numTest

def fit_MSE(self, X, Y, valX, valY, lr=0.005, Epoch=30):

"""

用交叉熵损失函数训练

:param X: (num,size) 训练集样本

:param Y: (num,1) 训练集标签

:param valX: (numVal,size) 验证机样本

:param valY: (numVal,1) 验证集标签

:param lr: float 学习率

:param Epoch: int 训练轮数

:return: 训练过程的loss列表

"""

num = X.shape[0]

numVal = valX.shape[0]

Loss = []

ValLoss = []

acc=[]

Valacc=[]

# 训练

for epoch in range(Epoch):

loss = 0

bestValloss = np.inf

bestW = self.w

accNum=0

for i in range(num):

y = self.predict(X[i])

if np.argmax(y) == int(Y[i]):

accNum+=1

onehot_Y=np.zeros(self.output_size)

onehot_Y[int(Y[i])]=1

#loss计算

loss += np.sum((onehot_Y-y)**2)

dw=np.zeros((self.input_size, self.output_size))

#梯度计算

for j in range(self.output_size):

dw[:,j]=2*y[j]*(sum([(y[k]-onehot_Y[k])*y[k] if k != j

else (y[k]-onehot_Y[k])*(1-y[k]) for k in range(self.output_size)]))*np.hstack((X[i], 1))

self.w -= lr * dw

# 记录训练结果

Loss.append(loss / num)

acc.append(accNum/num)

# 验证

valLoss = 0

accNum=0

for i in range(numVal):

y = self.predict(valX[i])

if np.argmax(y) == int(valY[i]):

accNum+=1

onehot_Y=np.zeros(self.output_size)

onehot_Y[int(valY[i])]=1

valLoss += np.sum((onehot_Y-y)**2)

ValLoss.append(valLoss / numVal)

Valacc.append(accNum/numVal)

if ValLoss[-1] < bestValloss:

bestW = self.w.copy()

bestValloss = ValLoss[-1]

print(">> 第{}轮训练 trainLoss:{:.3f} acc:{:.3f} \n 验证 "

"valLoss:{:.3f} acc:{:.3f} ".format(epoch + 1, Loss[-1], acc[-1], ValLoss[-1], Valacc[-1]))

self.w = bestW.copy()

return Loss, ValLoss

def test_MSE(self, testX, testY):

"""

交叉熵损失函数测试

:param testX: (num,size) 测试集输入

:param testY: (num,1) 测试集标签

:return:

"""

numTest = testX.shape[0]

testLoss = 0

accNum=0

for i in range(numTest):

y = self.predict(testX[i])

if np.argmax(y) == int(testY[i]):

accNum += 1

onehot_Y = np.zeros(self.output_size)

onehot_Y[int(testY[i])] = 1

testLoss += np.sum((onehot_Y-y)**2)

return testLoss / numTest, accNum/numTest

def split_data(X, Y, split_ratio=None):

"""

数据集划分函数

:param X: (num,size) 样本

:param Y: (num,1) 标签

:param split_ratio:切分比例,默认为6:2:2

:return: trainX,trainY,valX,valY,testX,testY

"""

if split_ratio is None:

split_ratio = [0.6, 0.2, 0.2]

num=X.shape[0]

sizeX=X.shape[1]

sizeY=Y.shape[1]

train_num=int(num*split_ratio[0])

val_num=int(num*split_ratio[1])

test_num=num-train_num-val_num

randList=random.sample(range(num),num)

trainX=np.zeros((train_num,sizeX))

valX=np.zeros((val_num,sizeX))

testX=np.zeros((test_num,sizeX))

trainY=np.zeros((train_num,sizeY))

valY=np.zeros((val_num,sizeY))

testY=np.zeros((test_num,sizeY))

k=0

for i in randList:

if k<train_num:

trainX[k,:]=X[i,:]

trainY[k,:]=Y[i,:]

elif k<train_num+val_num:

valX[k-train_num,:]=X[i,:]

valY[k-train_num,:]=Y[i,:]

else:

testX[k-train_num-val_num,:]=X[i,:]

testY[k-train_num-val_num,:]=Y[i,:]

k+=1

return trainX,trainY,valX,valY,testX,testY

实验测试

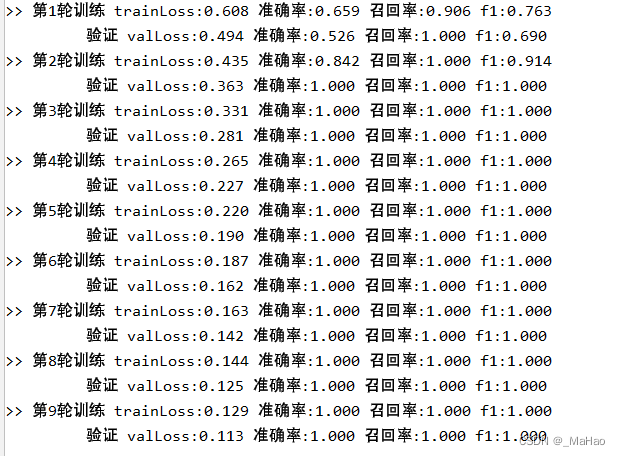

在学习时,代码中运用的是随机梯度下降,与公式推导的批量梯度下降有略微不同,使用鸢尾花数据集进行测试,先是logistic回归,由于是二分类器,所以选取其中两类样本共100个样本,首先使用交叉熵损失函数

model = logistic_regression(4)

import sklearn.datasets as skdata

data=skdata.load_iris()

X=data.data[:100]

Y=data.target[:100]

Y.resize((Y.size,1))

trainX,trainY,valX,valY,testX,testY=split_data(X,Y)

model.fit_crossEntropy(trainX,trainY,valX,valY)

print("测试集准确率:",model.test_crossEntropy(testX,testY)[1])

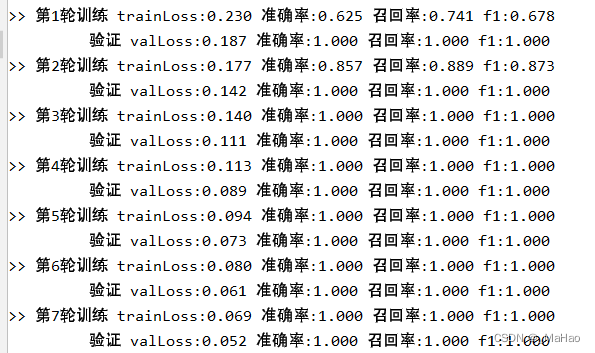

很快就收敛了,接下来是均方误差

model = logistic_regression(4)

import sklearn.datasets as skdata

data=skdata.load_iris()

X=data.data[:100]

Y=data.target[:100]

Y.resize((Y.size,1))

trainX,trainY,valX,valY,testX,testY=split_data(X,Y)

model.fit_MSE(trainX,trainY,valX,valY)

print("测试集准确率:",model.test_MSE(testX,testY)[1])

同样收敛得很快,下面是softmax回归,将鸢尾花的150个样本共三类都取出来

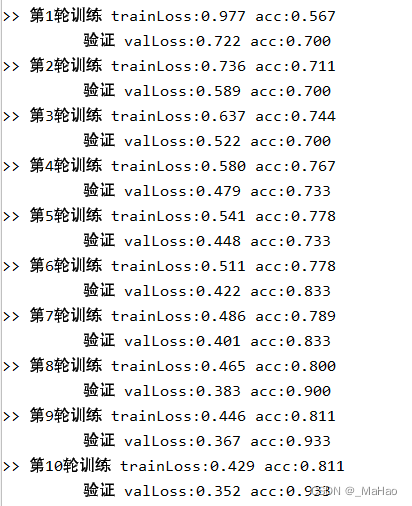

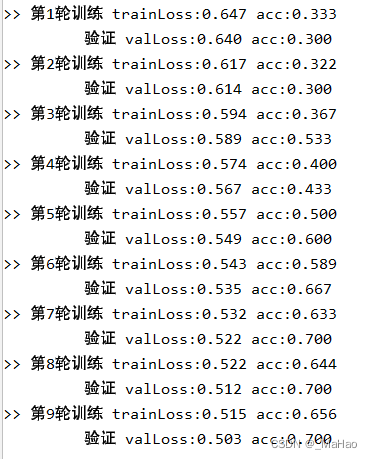

首先测试交叉熵损失函数

model = softmax_regression(4,3)

import sklearn.datasets as skdata

data=skdata.load_iris()

X=data.data

Y=data.target

Y.resize((Y.size,1))

trainX,trainY,valX,valY,testX,testY=split_data(X,Y)

model.fit_crossEntropy(trainX,trainY,valX,valY)

print("测试集准确率:",model.test_crossEntropy(testX,testY)[1])

均方误差损失函数

model = softmax_regression(4,3)

import sklearn.datasets as skdata

data=skdata.load_iris()

X=data.data

Y=data.target

Y.resize((Y.size,1))

trainX,trainY,valX,valY,testX,testY=split_data(X,Y)

model.fit_MSE(trainX,trainY,valX,valY)

print("测试集准确率:",model.test_MSE(testX,testY)[1])

![]()

均方误差损失函数在这个情况下表现得不算太好 ,具体原因应该是陷入了局部最优,观察一下MSE损失函数可以发现MSE损失函数的极值点有很多个,而交叉熵损失函数有且仅有一个极值点,这就导致使用MSE损失函数训练时无法保证能找到最优解。

更多推荐

已为社区贡献2条内容

已为社区贡献2条内容

所有评论(0)