多目标跟踪(三) ByteTrack —— 利用低分检测框信息Byte算法

系列文章目录文章目录系列文章目录前言一、pandas是什么?二、使用步骤1.引入库2.读入数据总结前言多目标跟踪自DeepSort后,有一段时间类似FairMoT这种统一了识别和检测的网络被研究的比较热门。不过就在去年的年底发布的ByteTrack,其则是DeepSort的上位替代,整体实现思想也是十分简单,但十分work的算法。基于作者发布的代码其实是在YOLOX的基础上魔改的,然后个人认为比较

目录

前言

多目标跟踪自DeepSort后,有一段时间类似FairMoT这种统一了识别和检测的网络被研究的比较热门。不过就在去年的年底发布的ByteTrack,其则是DeepSort的上位替代,整体实现思想也是十分简单,但十分work的算法。

基于作者发布的代码其实是在YOLOX的基础上魔改的,然后个人认为比较冗余,而且如果就使用和学习来说不太方便,所以这里也放一个自己整理后的版本(只有det部分)

零、代码使用

基本的使用方式已经在Readme里写了,应该是没有太大问题的,这里主要讲讲怎么去更换检测器。我的代码里和官方用的都是YOLOX,如果想要更换为像YOLOV5的话,只需要将YOLOV5检测出来的结果送入跟踪器中更新即可。跟踪器的输入支持像YOLOX中[x1,y1,x2,y2,score1,score2,class]和一般YOLO中的[x1,y1,x2,y2,score,class]的格式

伪代码如下

# im为经过处理后输入网络的图片,im0s为原始图片,(800, 1440)则为输入网络时的尺寸

for im,im0s in dataset:

outputs = yolo.detect_bounding_box(im, im0s)

# 取0为网络输出格式原因

online_targets = tracker.update(outputs[0], [im0s.shape[0], im0s.shape[1]], (800, 1440))

.......

二、Byte算法

Byte的思想我个人认为则是ByteTrack中最精华的部分了,下面讲讲我对此的理解。

其他传统算法的弊端

在Byte之前,用的比较多的则为Sort和DeepSort算法,两者都为基于卡尔曼滤波器的跟踪器算法,DeepSort相较与Sort的最大改进则在与DeepSort中引进了RE-ID网络来解决ID-SW的问题。他们两者的共同思想,简单的说则为利用上游检测网络检测出来结果,然后送入卡尔曼滤波器中进行跟踪。

这里提出的问题在与,上游网络一般设置的检测置信度阈值为0.5左右,即当检测框检测出来的置信度低于0.5时则会之间被丢弃。这里如果仅仅对于目标检测来说是没有问题,但对于图片流来说,我们相对于拥有了更多先验信息,如果仅仅凭借一个置信度阈值就丢弃检测的框,会不会显得不够合理。

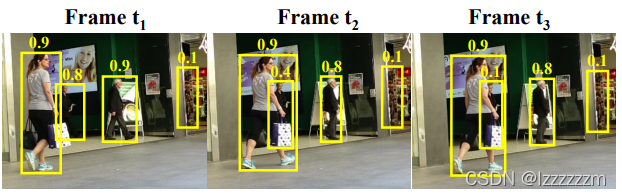

以上图举例的话,这里我们假设把阈值设置为0.3,在第一帧和第二帧中都可以将图片中的三个行人正确识别,但当第三帧中出现了遮挡现象,其中一个行人的置信度则变为0.1,这样这个行人则会在遮挡的情况下,失去了跟踪信息,但对与目标检测来说,他确实被检测出来了,只是置信度较低而已。

Byte算法则是用来解决如何充分利用,由于遮挡导致置信度变低的得分框问题。

简单但Work的Byte

Byte的整体思想十分简单,既然得分低的框是有用的,那么我就在目标检测中把得分低的框也保留下来,再做处理。(所以在ByteTrack中对与目标检测网络的预测置信度阈值设置为了0.001)

具体的处理方法则为下面这个伪代码图

Byte中,将得分框按照一定阈值划分为高分框和低分框。对于高分框来说按照正常的方法送入跟踪器,并使用IOU计算代价矩阵,然后利用匈牙利算法进行分配。

而对于低分框,则利用未匹配上的框(未匹配上就说明上一帧是匹配上的)和低分的框进行IOU匹配,然后同样利用匈牙利算法进行分配。

整体的思想就是这么的简单,我个人认为他之所以Work的原因在与,作者假设:对于视频或者图片流来说,未匹配上的框,大概率是这帧这个物体被遮挡或者走出画面了导致的。所以作者利用低分框和未匹配上的框进行再匹配,来减缓遮挡导致目标丢失的问题。

对于高分框来说,作者也说,其实也可以加入像DeepSort中的RE-ID网络,来进行apperance匹配,而不是仅仅利用IOU进行匹配。

整段最关键的代码如下,也是十分的易读好理解的。

def update(self, output_results, img_info, img_size):

self.frame_id += 1

activated_starcks = []

refind_stracks = []

lost_stracks = []

removed_stracks = []

# outputbox【batchsize, x1, y1,x2, y2, score, class】

if output_results.shape[1] == 5:

scores = output_results[:, 4]

bboxes = output_results[:, :4]

# outputbox【batchsize, x1, y1,x2, y2, score1,score2, class】

else:

output_results = output_results.cpu().numpy()

scores = output_results[:, 4] * output_results[:, 5]

bboxes = output_results[:, :4] # x1y1x2y2

img_h, img_w = img_info[0], img_info[1]

scale = min(img_size[0] / float(img_h), img_size[1] / float(img_w))

bboxes /= scale

# 根据框的得分进行划分

# 高分框

remain_inds = scores > self.args.track_thresh

# 低分框

inds_low = scores > 0.1

inds_high = scores < self.args.track_thresh

inds_second = np.logical_and(inds_low, inds_high)

dets_second = bboxes[inds_second]

dets = bboxes[remain_inds]

scores_keep = scores[remain_inds]

scores_second = scores[inds_second]

if len(dets) > 0:

'''Detections'''

detections = [STrack(STrack.tlbr_to_tlwh(tlbr), s) for

(tlbr, s) in zip(dets, scores_keep)]

else:

detections = []

''' Add newly detected tracklets to tracked_stracks'''

unconfirmed = []

tracked_stracks = [] # type: list[STrack]

for track in self.tracked_stracks:

if not track.is_activated:

unconfirmed.append(track)

else:

tracked_stracks.append(track)

''' Step 2: First association, with high score detection boxes'''

strack_pool = joint_stracks(tracked_stracks, self.lost_stracks)

# Predict the current location with KF

STrack.multi_predict(strack_pool)

# IOU+linear_assignment

dists = matching.iou_distance(strack_pool, detections)

if not self.args.mot20:

dists = matching.fuse_score(dists, detections)

matches, u_track, u_detection = matching.linear_assignment(dists, thresh=self.args.match_thresh)

for itracked, idet in matches:

track = strack_pool[itracked]

det = detections[idet]

if track.state == TrackState.Tracked:

track.update(detections[idet], self.frame_id)

activated_starcks.append(track)

else:

track.re_activate(det, self.frame_id, new_id=False)

refind_stracks.append(track)

''' Step 3: Second association, with low score detection boxes'''

# association the untrack to the low score detections

if len(dets_second) > 0:

'''Detections'''

detections_second = [STrack(STrack.tlbr_to_tlwh(tlbr), s) for

(tlbr, s) in zip(dets_second, scores_second)]

else:

detections_second = []

r_tracked_stracks = [strack_pool[i] for i in u_track if strack_pool[i].state == TrackState.Tracked]

# IOU+linear_assignment

dists = matching.iou_distance(r_tracked_stracks, detections_second)

matches, u_track, u_detection_second = matching.linear_assignment(dists, thresh=0.5)

for itracked, idet in matches:

track = r_tracked_stracks[itracked]

det = detections_second[idet]

if track.state == TrackState.Tracked:

track.update(det, self.frame_id)

activated_starcks.append(track)

else:

track.re_activate(det, self.frame_id, new_id=False)

refind_stracks.append(track)

for it in u_track:

track = r_tracked_stracks[it]

if not track.state == TrackState.Lost:

track.mark_lost()

lost_stracks.append(track)

'''Deal with unconfirmed tracks, usually tracks with only one beginning frame'''

detections = [detections[i] for i in u_detection]

dists = matching.iou_distance(unconfirmed, detections)

if not self.args.mot20:

dists = matching.fuse_score(dists, detections)

matches, u_unconfirmed, u_detection = matching.linear_assignment(dists, thresh=0.7)

for itracked, idet in matches:

unconfirmed[itracked].update(detections[idet], self.frame_id)

activated_starcks.append(unconfirmed[itracked])

for it in u_unconfirmed:

track = unconfirmed[it]

track.mark_removed()

removed_stracks.append(track)

""" Step 4: Init new stracks"""

for inew in u_detection:

track = detections[inew]

if track.score < self.det_thresh:

continue

track.activate(self.kalman_filter, self.frame_id)

activated_starcks.append(track)

""" Step 5: Update state"""

for track in self.lost_stracks:

if self.frame_id - track.end_frame > self.max_time_lost:

track.mark_removed()

removed_stracks.append(track)

# print('Ramained match {} s'.format(t4-t3))

self.tracked_stracks = [t for t in self.tracked_stracks if t.state == TrackState.Tracked]

self.tracked_stracks = joint_stracks(self.tracked_stracks, activated_starcks)

self.tracked_stracks = joint_stracks(self.tracked_stracks, refind_stracks)

self.lost_stracks = sub_stracks(self.lost_stracks, self.tracked_stracks)

self.lost_stracks.extend(lost_stracks)

self.lost_stracks = sub_stracks(self.lost_stracks, self.removed_stracks)

self.removed_stracks.extend(removed_stracks)

self.tracked_stracks, self.lost_stracks = remove_duplicate_stracks(self.tracked_stracks, self.lost_stracks)

# get scores of lost tracks

output_stracks = [track for track in self.tracked_stracks if track.is_activated]

return output_stracks实验结果

可以看到,13号框,在被遮挡的情况下,对于这个框的信息都没有出现丢失再重新追踪的情况,很好的解决了低分框利用的问题。

总结

整篇文章较短,原因也在与ByteTrack确实也十分简单但work,在深度学习模型日趋庞大且复杂的现在,也许像ByteTrack或者最近ConvNext这种简单的想法,但work又会走向主流。

更多推荐

已为社区贡献9条内容

已为社区贡献9条内容

所有评论(0)