如何把数据集划分成训练集和测试集

如何把数据集划分成训练集和测试集

·

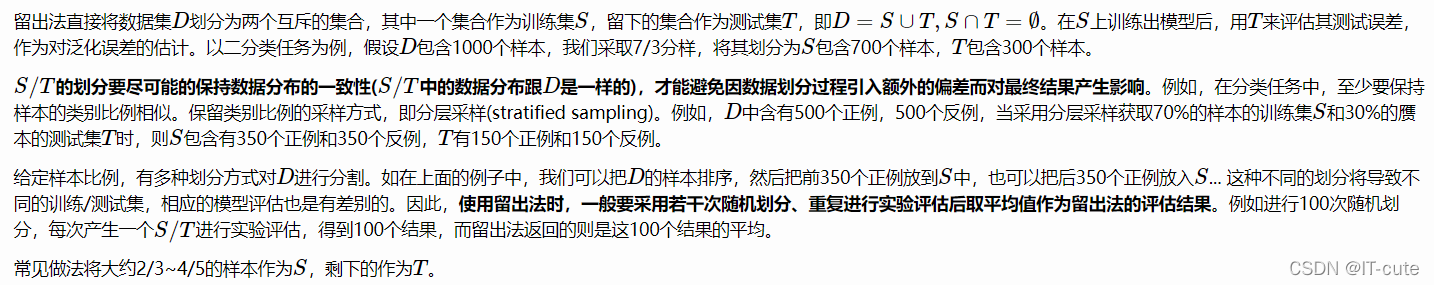

一、留出法(hold-out)

通过调用sklearn.model_selection.train_test_split按比例划分训练集和测试集:

import numpy as np

from sklearn.model_selection import train_test_split

X, Y = np.arange(10).reshape((5, 2)), range(5)

print("X=", X)

print("Y=", Y)

X_train, X_test, Y_train, Y_test = train_test_split(

X, Y, test_size=0.30, random_state=42)

print("X_train=", X_train)

print("X_test=", X_test)

print("Y_train=", Y_train)

print("Y_test=", Y_test)

其中test_size=0.30表示T占30%, 那么S占70%。运行结果:

X= [[0 1]

[2 3]

[4 5]

[6 7]

[8 9]]

Y= range(0, 5)

X_train= [[4 5]

[0 1]

[6 7]]

X_test= [[2 3]

[8 9]]

Y_train= [2, 0, 3]

Y_test= [1, 4]

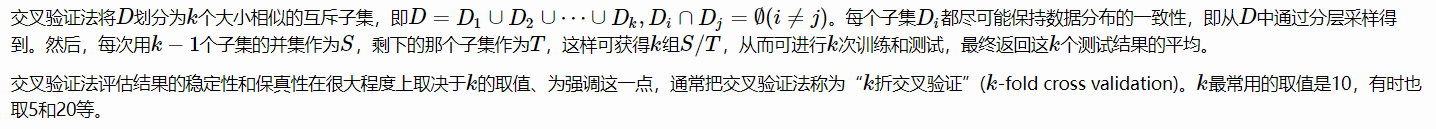

二、交叉验证法(cross validation)

通过调用sklearn.model_selection.KFold按k折交叉划分训练集和测试集:

import numpy as np

from sklearn.model_selection import KFold

X= np.arange(10).reshape((5, 2))

print("X=", X)

kf = KFold(n_splits=2)

for train_index, test_index in kf.split(X):

print('X_train:%s ' % X[train_index])

print('X_test: %s ' % X[test_index])

其中n_splits=2表示k=2。运行结果:

X= [[0 1]

[2 3]

[4 5]

[6 7]

[8 9]]

X_train:[[6 7]

[8 9]]

X_test: [[0 1]

[2 3]

[4 5]]

X_train:[[0 1]

[2 3]

[4 5]]

X_test: [[6 7]

[8 9]]

当D包含m个样本,令k=m,则得到交叉验证法的一个特例——留一法(Leave-One-Out,简称LOO)。留一法使用的S只比D少一个样本,所以在绝大多数情况下,实际评估结果与用D训练的模型相似。因此,留一法被认为比较准确。但留一法对于大数据集,计算开销太大;另外也不见得永远比其他方法准确。

通过调用sklearn.model_selection.LeaveOneOut按留一法划分训练集和测试集:

import numpy as np

from sklearn.model_selection import LeaveOneOut

X= np.arange(10).reshape((5, 2))

print("X=", X)

loo = LeaveOneOut()

for train_index, test_index in loo.split(X):

print('X_train:%s ' % X[train_index])

print('X_test: %s ' % X[test_index])

运行结果:

X= [[0 1]

[2 3]

[4 5]

[6 7]

[8 9]]

X_train:[[2 3]

[4 5]

[6 7]

[8 9]]

X_test: [[0 1]]

X_train:[[0 1]

[4 5]

[6 7]

[8 9]]

X_test: [[2 3]]

X_train:[[0 1]

[2 3]

[6 7]

[8 9]]

X_test: [[4 5]]

X_train:[[0 1]

[2 3]

[4 5]

[8 9]]

X_test: [[6 7]]

X_train:[[0 1]

[2 3]

[4 5]

[6 7]]

X_test: [[8 9]]

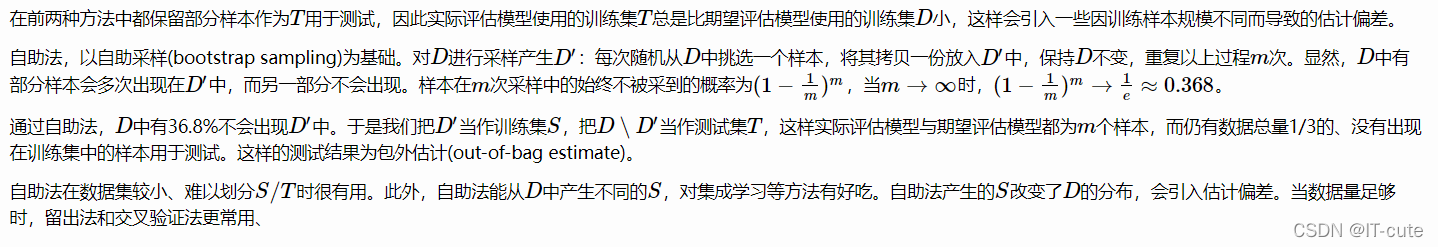

三、自助法(bootstrapping)

更多推荐

已为社区贡献3条内容

已为社区贡献3条内容

所有评论(0)