【PyTorch总结】tqdm的使用

文章目录介绍安装使用方法1.传入可迭代对象使用`trange`2.为进度条设置描述3.手动控制进度4.tqdm的write方法5.手动设置处理的进度6.自定义进度条显示信息在深度学习中如何使用介绍Tqdm是 Python 进度条库,可以在 Python长循环中添加一个进度提示信息。用户只需要封装任意的迭代器,是一个快速、扩展性强的进度条工具库。安装pip install tqdm使用方法1.传入可

·

介绍

Tqdm 是 Python 进度条库,可以在 Python 长循环中添加一个进度提示信息。用户只需要封装任意的迭代器,是一个快速、扩展性强的进度条工具库。

安装

pip install tqdm

使用方法

1.传入可迭代对象

import time

from tqdm import *

for i in tqdm(range(1000)):

time.sleep(.01) #进度条每0.01s前进一次,总时间为1000*0.01=10s

# 运行结果如下

100%|██████████| 1000/1000 [00:10<00:00, 93.21it/s]

使用trange

trange(i) 是 tqdm(range(i)) 的简单写法

from tqdm import trange

for i in trange(1000):

time.sleep(.01)

# 运行结果如下

100%|██████████| 1000/1000 [00:10<00:00, 93.21it/s]

2.为进度条设置描述

在for循环外部初始化tqdm,可以打印其他信息:

import time

from tqdm import tqdm

pbar = tqdm(["a","b","c","d"])

for char in pbar:

pbar.set_description("Processing %s" % char) # 设置描述

time.sleep(1) # 每个任务分配1s

# 结果如下

0%| | 0/4 [00:00<?, ?it/s]

Processing a: 0%| | 0/4 [00:00<?, ?it/s]

Processing a: 25%|██▌ | 1/4 [00:01<00:03, 1.01s/it]

Processing b: 25%|██▌ | 1/4 [00:01<00:03, 1.01s/it]

Processing b: 50%|█████ | 2/4 [00:02<00:02, 1.01s/it]

Processing c: 50%|█████ | 2/4 [00:02<00:02, 1.01s/it]

Processing c: 75%|███████▌ | 3/4 [00:03<00:01, 1.01s/it]

Processing d: 75%|███████▌ | 3/4 [00:03<00:01, 1.01s/it]

Processing d: 100%|██████████| 4/4 [00:04<00:00, 1.01s/it]

3.手动控制进度

import time

from tqdm import tqdm

with tqdm(total=200) as pbar:

for i in range(20):

pbar.update(10)

time.sleep(.1)

# 结果如下,一共更新了20次

0%| | 0/200 [00:00<?, ?it/s]

10%|█ | 20/200 [00:00<00:00, 199.48it/s]

15%|█▌ | 30/200 [00:00<00:01, 150.95it/s]

20%|██ | 40/200 [00:00<00:01, 128.76it/s]

25%|██▌ | 50/200 [00:00<00:01, 115.72it/s]

30%|███ | 60/200 [00:00<00:01, 108.84it/s]

35%|███▌ | 70/200 [00:00<00:01, 104.22it/s]

40%|████ | 80/200 [00:00<00:01, 101.42it/s]

45%|████▌ | 90/200 [00:00<00:01, 98.83it/s]

50%|█████ | 100/200 [00:00<00:01, 97.75it/s]

55%|█████▌ | 110/200 [00:01<00:00, 97.00it/s]

60%|██████ | 120/200 [00:01<00:00, 96.48it/s]

65%|██████▌ | 130/200 [00:01<00:00, 96.05it/s]

70%|███████ | 140/200 [00:01<00:00, 95.25it/s]

75%|███████▌ | 150/200 [00:01<00:00, 94.94it/s]

80%|████████ | 160/200 [00:01<00:00, 95.08it/s]

85%|████████▌ | 170/200 [00:01<00:00, 93.52it/s]

90%|█████████ | 180/200 [00:01<00:00, 94.28it/s]

95%|█████████▌| 190/200 [00:01<00:00, 94.43it/s]

100%|██████████| 200/200 [00:02<00:00, 94.75it/s]

4.tqdm的write方法

bar = trange(10)

for i in bar:

time.sleep(0.1)

if not (i % 3):

tqdm.write("Done task %i" % i)

# 结果如下

Done task 0

0%| | 0/10 [00:10<?, ?it/s]

0%| | 0/10 [00:00<?, ?it/s]

10%|████████▎ | 1/10 [00:00<00:01, 8.77it/s]

20%|████████████████▌ | 2/10 [00:00<00:00, 9.22it/s]

Done task 3

0%| | 0/10 [00:10<?, ?it/s]

30%|████████████████████████▉ | 3/10 [00:00<00:01, 6.91it/s]

40%|█████████████████████████████████▏ | 4/10 [00:00<00:00, 9.17it/s]

50%|█████████████████████████████████████████▌ | 5/10 [00:00<00:00, 9.28it/s]

Done task 6

0%| | 0/10 [00:10<?, ?it/s]

60%|█████████████████████████████████████████████████▊ | 6/10 [00:00<00:00, 7.97it/s]

70%|██████████████████████████████████████████████████████████ | 7/10 [00:00<00:00, 9.25it/s]

80%|██████████████████████████████████████████████████████████████████▍ | 8/10 [00:00<00:00, 9.31it/s]

Done task 9

0%| | 0/10 [00:11<?, ?it/s]

90%|██████████████████████████████████████████████████████████████████████████▋ | 9/10 [00:01<00:00, 8.37it/s]

100%|██████████████████████████████████████████████████████████████████████████████████| 10/10 [00:01<00:00, 9.28it/s]

5.手动设置处理的进度

通过update方法可以控制每次进度条更新的进度:

from tqdm import tqdm

import time

#total参数设置进度条的总长度

with tqdm(total=100) as pbar:

for i in range(100):

time.sleep(0.1)

pbar.update(1) #每次更新进度条的长度

#结果

0%| | 0/100 [00:00<?, ?it/s]

1%| | 1/100 [00:00<00:09, 9.98it/s]

2%|▏ | 2/100 [00:00<00:09, 9.83it/s]

3%|▎ | 3/100 [00:00<00:10, 9.65it/s]

4%|▍ | 4/100 [00:00<00:10, 9.53it/s]

5%|▌ | 5/100 [00:00<00:09, 9.55it/s]

...

100%|██████████| 100/100 [00:10<00:00, 9.45it/s]

除了使用with之外,还可以使用另外一种方法实现上面的效果:

from tqdm import tqdm

import time

#total参数设置进度条的总长度

pbar = tqdm(total=100)

for i in range(100):

time.sleep(0.05)

#每次更新进度条的长度

pbar.update(1)

#别忘了关闭占用的资源

pbar.close()

6.自定义进度条显示信息

通过set_description和set_postfix方法设置进度条显示信息:

from tqdm import trange

from random import random,randint

import time

with trange(10) as t:

for i in t:

#设置进度条左边显示的信息

t.set_description("GEN %i"%i)

#设置进度条右边显示的信息

t.set_postfix(loss=random(),gen=randint(1,999),str="h",lst=[1,2])

time.sleep(0.1)

在深度学习中如何使用

下面是一段手写数字识别代码

import torch

import torch.nn as nn

import torch.optim as optim

import torch.nn.functional as F

from torch.utils.data import DataLoader

import torchvision.datasets as datasets

import torchvision.transforms as transforms

from tqdm import tqdm

class CNN(nn.Module):

def __init__(self,in_channels=1,num_classes=10):

super().__init__()

self.conv1 = nn.Conv2d(in_channels=1,out_channels=8,kernel_size=(3,3),stride=(1,1),padding=(1,1))

self.pool = nn.MaxPool2d(kernel_size=(2,2),stride=(2,2))

self.conv2 = nn.Conv2d(in_channels=8,out_channels=16,kernel_size=(3,3),stride=(1,1),padding=(1,1))

self.fc1 = nn.Linear(16*7*7,num_classes)

def forward(self,x):

x = F.relu(self.conv1(x))

x = self.pool(x)

x = F.relu(self.conv2(x))

x = self.pool(x)

x = x.reshape(x.shape[0],-1)

x = self.fc1(x)

return x

# Set device

device = torch.device("cuda"if torch.cuda.is_available() else "cpu")

print(device)

# Hyperparameters

in_channels = 1

num_classes = 10

learning_rate = 0.001

batch_size = 64

num_epochs = 5

# Load Data

train_dataset = datasets.MNIST(root="dataset/",train=True,transform=transforms.ToTensor(),download=True)

train_loader = DataLoader(dataset=train_dataset,batch_size=batch_size,shuffle=True)

test_dataset = datasets.MNIST(root="dataset/",train=False,transform=transforms.ToTensor(),download=True)

test_loader = DataLoader(dataset=train_dataset,batch_size=batch_size,shuffle=True)

# Initialize network

model = CNN().to(device)

# Loss and optimizer

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(),lr=learning_rate)

# Train Network

for epoch in range(num_epochs):

# for data,targets in tqdm(train_loadr,leave=False) # 进度显示在一行

for data,targets in tqdm(train_loader):

# Get data to cuda if possible

data = data.to(device=device)

targets = targets.to(device=device)

# forward

scores = model(data)

loss = criterion(scores,targets)

# backward

optimizer.zero_grad()

loss.backward()

# gardient descent or adam step

optimizer.step()

如果不给train_loader加上tqdm会什么也不现实,加入后显示如下:

100%|██████████| 938/938 [00:06<00:00, 152.23it/s]

100%|██████████| 938/938 [00:06<00:00, 153.74it/s]

100%|██████████| 938/938 [00:06<00:00, 155.11it/s]

100%|██████████| 938/938 [00:06<00:00, 153.08it/s]

100%|██████████| 938/938 [00:06<00:00, 153.57it/s]

对应5个eopch的5个进度显示

如果我们想要它显示在一行,在tqdm中添加leave=False参数即可

for data,targets in tqdm(train_loadr,leave=False) # 进度显示在一行

注意

我们将tqdm加到train_loader无法得到索引,要如何得到索引呢?可以使用下面的代码

for index,(data,targets) in tqdm(enumerate(train_loader),total=len(train_loader),leave = True):

我们觉得还有点不太满足现在的进度条,我们得给他加上我们需要的信息,比如准确率,loss值,如何加呢?

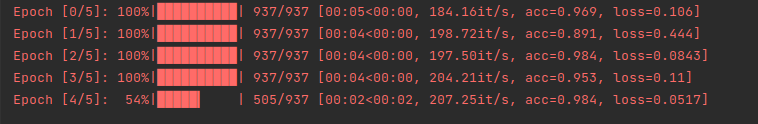

for epoch in range(num_epochs):

losses = []

accuracy = []

# for data,targets in tqdm(train_loadr,leave=False) # 进度显示在一行

loop = tqdm((train_loader), total = len(train_loader))

for data,targets in loop:

# Get data to cuda if possible

data = data.to(device=device)

targets = targets.to(device=device)

# forward

scores = model(data)

loss = criterion(scores,targets)

losses.append(loss)

# backward

optimizer.zero_grad()

loss.backward()

_,predictions = scores.max(1)

num_correct = (predictions == targets).sum()

running_train_acc = float(num_correct) / float(data.shape[0])

accuracy.append(running_train_acc)

# gardient descent or adam step

optimizer.step()

loop.set_description(f'Epoch [{epoch}/{num_epochs}]')

loop.set_postfix(loss = loss.item(),acc = running_train_acc)

可以看到我们的acc和epoch 还有loss都打在了控制台中。以上就是相关tqdm的使用

更多推荐

已为社区贡献6条内容

已为社区贡献6条内容

所有评论(0)