【pytorch】torch.mm,torch.bmm以及torch.matmul的使用

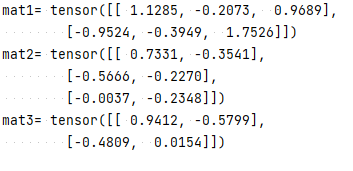

torch.mmtorch.mm是两个矩阵相乘,即两个二维的张量相乘如下面的例子mat1 = torch.randn(2,3)print("mat1=", mat1)mat2 = torch.randn(3,2)print("mat2=", mat2)mat3 = torch.mm(mat1, mat2)print("mat3=", mat3)但是如果维度超过二维,则会报错。RuntimeErro

torch.mm

torch.mm是两个矩阵相乘,即两个二维的张量相乘

如下面的例子

mat1 = torch.randn(2,3)

print("mat1=", mat1)

mat2 = torch.randn(3,2)

print("mat2=", mat2)

mat3 = torch.mm(mat1, mat2)

print("mat3=", mat3)

但是如果维度超过二维,则会报错。RuntimeError: self must be a matrix

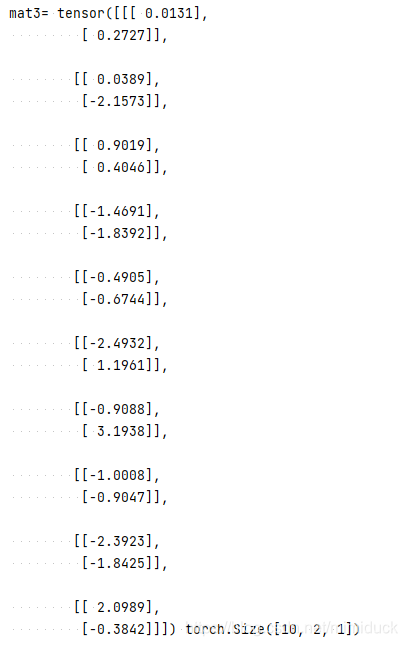

torch.bmm

它其实就是加了一维batch,所以第一位为batch,并且要两个Tensor的batch相等。

第二维和第三维就是mm运算了,同上了。

示例代码如下:

mat1 = torch.randn(10, 2, 4)

# print("mat1=", mat1)

mat2 = torch.randn(10, 4, 1)

# print("mat2=", mat2)

mat3 = torch.matmul(mat1, mat2)

print("mat3=", mat3, mat3.shape)

torch.matmul

torch.mm仅仅是供矩阵相乘使用,使用范围较为狭窄。

而torch.matmul使用的场合就比较多了。

如官方文档所介绍,有如下几种:

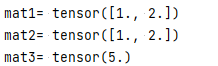

- If both tensors are 1-dimensional, the dot product (scalar) is returned.

如果两个tensor都是一维的,则为点乘运算,即每个元素对应相乘求和

如下:

mat1 = torch.Tensor([1,2])

print("mat1=", mat1)

mat2 = torch.Tensor([1,2])

print("mat2=", mat2)

mat3 = torch.matmul(mat1, mat2)

print("mat3=", mat3)

公式如下:

A

=

[

a

1

,

a

2

,

.

.

.

,

a

n

]

B

=

[

b

1

,

b

2

,

.

.

.

,

b

n

]

m

a

t

m

u

l

(

A

,

B

)

=

∑

i

a

i

b

i

A=[a_1,a_2 ,..., a_n] \newline B=[b_1,b_2 ,..., b_n] \newline matmul(A,B)=\sum_{i}a_ib_i

A=[a1,a2,...,an]B=[b1,b2,...,bn]matmul(A,B)=i∑aibi

样例代码如下:

-

If both arguments are 2-dimensional, the matrix-matrix product is returned.

如果两个都是二维的,那么就如同torch.mm -

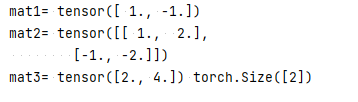

If the first argument is 1-dimensional and the second argument is 2-dimensional, a 1 is prepended to its dimension for the purpose of the matrix multiply. After the matrix multiply, the prepended dimension is removed.

如果第一个入参是一维的,第二个入参是二维的,则第一个参数增加一个一维,做矩阵乘法,结果然后去掉一维

如, 第一参数A shape为(2),第二参数B shape(2,2),

先讲A增加一维,shape为(1,2),然后计算矩阵相乘

结果为(1,2),再去掉第一维,改为(2)

代码样例如下:

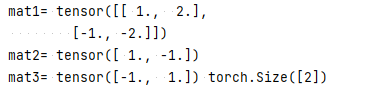

mat1 = torch.Tensor([1,-1])

print("mat1=", mat1)

mat2 = torch.Tensor([[1,2],[-1,-2]])

print("mat2=", mat2)

mat3 = torch.matmul(mat1, mat2)

print("mat3=", mat3, mat3.shape)

- If the first argument is 2-dimensional and the second argument is 1-dimensional, the matrix-vector product is returned.

如果第一个入参是二维的,第二个是一维,则将第二个参数扩展一维,做矩阵乘法。

如, 第一参数A shape为(2,2),第二参数B shape(2),

先将B增加一维,shape为(2,1),然后计算矩阵相乘

结果为(2,1),再去掉第一维,改为(2)

代码样例如下:

mat1 = torch.Tensor([[1,2],[-1,-2]])

print("mat1=", mat1)

mat2 = torch.Tensor([1,-1])

print("mat2=", mat2)

mat3 = torch.matmul(mat1, mat2)

print("mat3=", mat3, mat3.shape)

- If both arguments are at least 1-dimensional and at least one argument is N-dimensional (where N > 2), then a batched matrix multiply is returned. If the first argument is 1-dimensional, a 1 is prepended to its dimension for the purpose of the batched matrix multiply and removed after. If the second argument is 1-dimensional, a 1 is appended to its dimension for the purpose of the batched matrix multiple and removed after. The non-matrix (i.e. batch) dimensions are broadcasted (and thus must be broadcastable). For example, if input is a (j \times 1 \times n \times n)(j×1×n×n) tensor and other is a (k \times n \times n)(k×n×n) tensor, out will be a (j \times k \times n \times n)(j×k×n×n) tensor

最后一个看的很复杂,实际上需要明白2点:

一,两边shape的rank要相同,最后两维是用来做mm计算的

二,如果shape的rank不同,则可以通过“broadcast”,即+1维。 如shape(10,4,2)matmul shape(2,3)则变为 shape(10,4,2)matmul shape(1,2,3)

但是要注意:

- 如果是shape(10,4,2)matmul shape(2,2,3)会报错,因为batch不匹配

- 如果是shape(2,3, 2, 4)matmul shape(2, 4, 1),也会报错。因为batch (2,3)不匹配 (2)

- 但是shape(2,3, 2, 4)matmul shape(3, 4, 1) 是可以的,因为 shape(3, 4, 1) 可以通过broadcast转为shape(1,3, 4, 1)

对比numpy

相对numpy,有类似函数tensordot,具体可以参考我这一篇,但是个人觉得还是numpy的实现更灵活一些

更多推荐

已为社区贡献6条内容

已为社区贡献6条内容

所有评论(0)