利用Docker-Compose部署ELK系统

本文档是搭建一个较为简易的ELK系统的教程,并不涉及ELK较深的使用方法,可当作一个初学者搭建历程的日志记录

ELK日志系统搭建

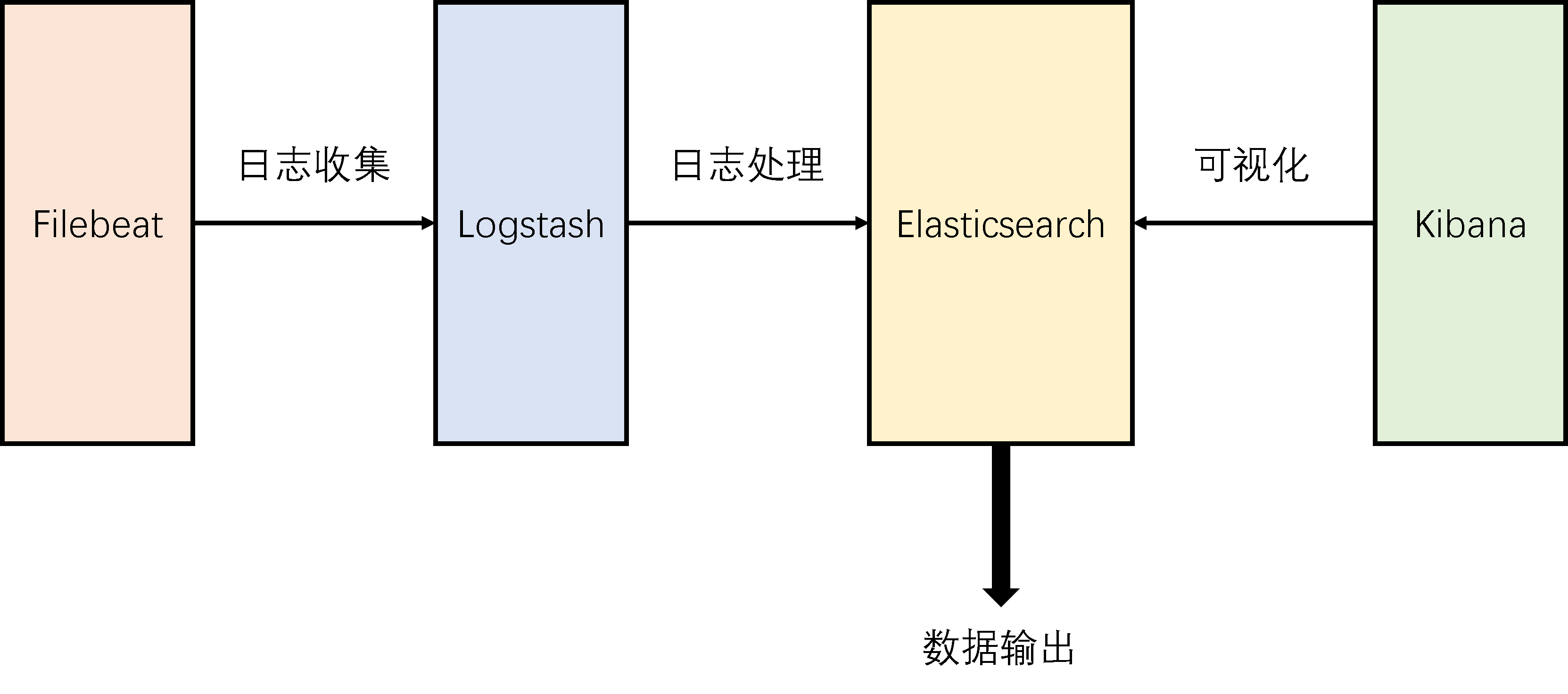

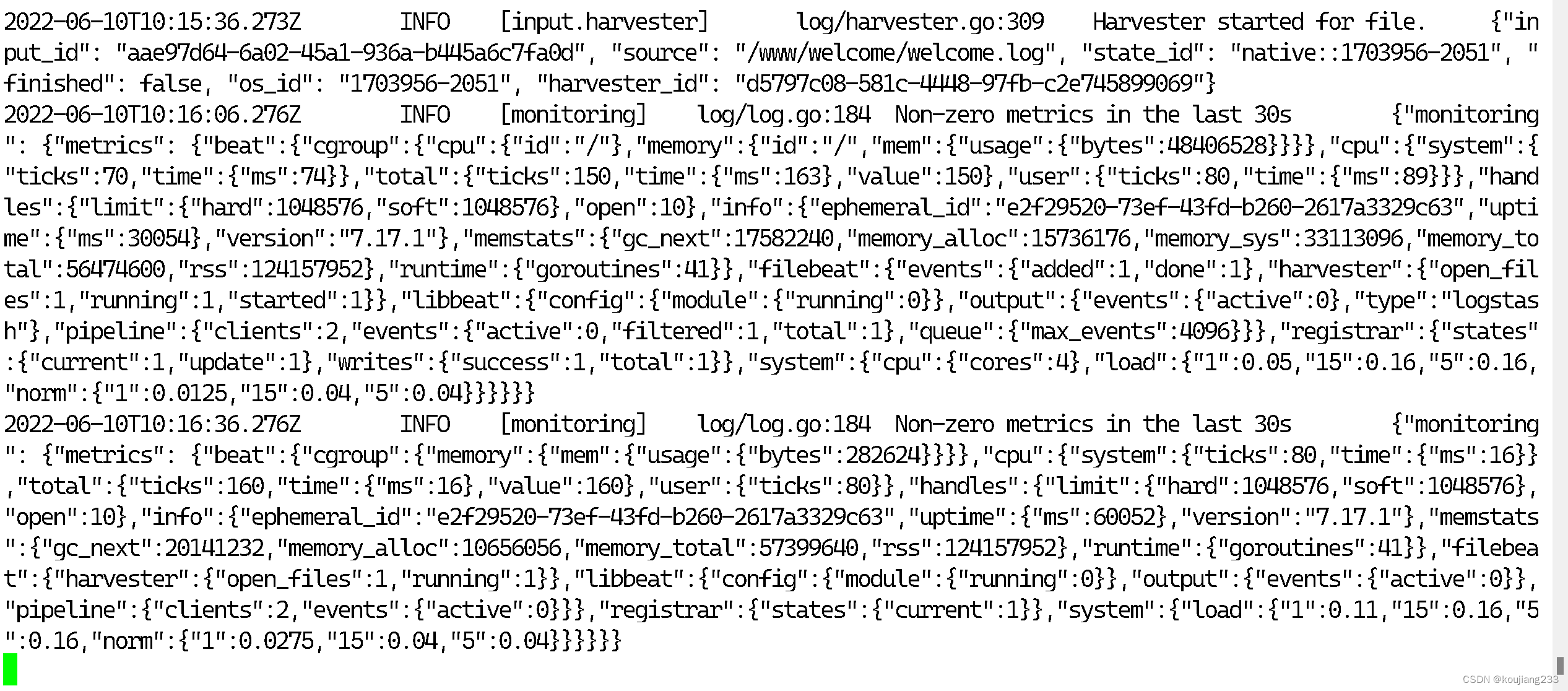

一、ELK日志系统简介

ELK是三个开源软件的缩写,分别表示:Elasticsearch , Logstash, Kibana , 它们都是开源软件。目前新增了一个FileBeat,它是一个轻量级的日志收集处理工具,Filebeat占用资源少,适合于在各个服务器上搜集日志后传输给Logstash,当前,官方也推荐使用此工具。

目前Filebeat组件集成了Logstash所需的大部分功能,在某些情况下可以直接取代Logstash,该组件可以将收集到的数据进行加工并生成索引直接传给Elasticsearch。本文档依旧采用四个组件进行搭建。本文档搭建的ELK系统是一个较为简易的系统,并不涉及ELK较深的使用方法,可当作一个初学者搭建历程的日志记录,如有错误,还请各位大佬指正!

| 名称 | 版本 |

| Ubuntu | ubuntu-22.04-desktop-amd64 |

| Elasticsearch | 7.17.1 |

| Kibana | 7.17.1 |

| Logstash | 7.17.1 |

| FileBeat | 7.17.1 |

二、ELK日志系统搭建

1、环境搭建

#安装docker#

sudo apt install docker.io

#检查docker版本信息#

sudo docker --version

Docker version 20.10.12, build 20.10.12-0ubuntu4#安装docker-compose#

sudo apt install docker-compose

#检查docker-compose版本信息#

sudo docker-compose --version

docker-compose version 1.29.2, build unknown#查看www文件夹的树图结构#

sudo tree /www

/www

├── apps

│ ├── elk_all.yml

│ ├── elk_elasticsearch.yml

│ ├── elk_filebeat.yml

│ ├── elk_kibana.yml

│ └── elk_logstash.yml

├── elasticsearch

│ ├── conf

│ │ └── elasticsearch.yml

│ ├── data

│ └── logs

├── filebeat

│ ├── conf

│ │ └── filebeat.yml

│ ├── data

│ └── logs

├── kibana

│ └── conf

│ └── kibana.yml

├── logstash

│ └── conf

│ └── logstash.conf

└── welcome

└── welcome.log

2、Elasticsearch的搭建与配置

创建elasticsearch所需的文件夹和docker-compose执行文件:

sudo mkdir -p /www/apps

#写入elasticsearch的docker-compose配置文件#

sudo vim /www/apps/elk_elasticsearch.yml

elk_elasticsearch.yml的配置如下所示:

version: "3"

services:

elasticsearch:

container_name: elasticsearch

hostname: elasticsearch

image: registry.cn-hangzhou.aliyuncs.com/koujiang-images/elasticsearch:7.17.1

restart: always

user: root

ports:

- 9200:9200

volumes:

- /www/elasticsearch/conf/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml #elasticsearch配置文件的映射

- /www/elasticsearch/data:/usr/share/elasticsearch/data #映射数据文件方便后期调试(需提前在相应文件家内创建)

- /www/elasticsearch/logs:/usr/share/elasticsearch/logs #映射数据文件方便后期调试(需提前在相应文件家内创建)

environment:

- "discovery.type=single-node" #设置elasticsearch为单节点

- "TAKE_FILE_OWNERSHIP=true"

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

- "TZ=Asia/Shanghai"创建elasticsearch所需的文件夹和配置文件:

sudo mkdir -p /www/elasticsearch/data

sudo mkdir -p /www/elasticsearch/logs

sudo mkdir -p /www/elasticsearch/conf#写入elasticsearch的配置信息#

sudo vim /www/elasticsearch/conf/elasticsearch.yml

elasticsearch.yml的配置如下所示:

# 集群名称

cluster.name: elasticsearch-cluster

network.host: 0.0.0.0

# 支持跨域访问

http.cors.enabled: true

http.cors.allow-origin: "*"

# 安全认证

xpack.security.enabled: false

#http.cors.allow-headers: "Authorization"创建 elasticsearch容器:

sudo docker-compose -f /www/apps/elk_elasticsearch.yml up -d

#检测 elasticsearch是否创建成功#

sudo curl 127.0.0.1:9200

展示如下信息则表示 elasticsearch创建成功:

{

"name" : "elasticsearch",

"cluster_name" : "elasticsearch-cluster",

"cluster_uuid" : "AG_lAnGBT06VnTJDHTSfEQ",

"version" : {

"number" : "7.17.1",

"build_flavor" : "default",

"build_type" : "docker",

"build_hash" : "e5acb99f822233d62d6444ce45a4543dc1c8059a",

"build_date" : "2022-02-23T22:20:54.153567231Z",

"build_snapshot" : false,

"lucene_version" : "8.11.1",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}

3、Kibana的搭建与配置

创建Kibana所需的docker-compose执行文件:

#写入Kibana的docker-compose配置文件#

sudo vim /www/apps/elk_kibana.yml

elk_kibana.yml的配置如下:

version: "3"

services:

kibana:

container_name: kibana

hostname: kibana

image: registry.cn-hangzhou.aliyuncs.com/koujiang-images/kibana:7.17.1

restart: always

ports:

- 5601:5601

volumes:

- /www/kibana/conf/kibana.yml:/usr/share/kibana/config/kibana.yml #配置文件的映射

environment:

- elasticsearch.hosts=http://本机IP地址:9200

- "TZ=Asia/Shanghai"创建Kibana所需的文件夹和配置文件:

sudo mkdir -p /www/kibana/conf

sudo vim /www/kibana/conf/kibana.yml

kibana.yml的配置如下:

# 服务端口

server.port: 5601

# 服务IP

server.host: "0.0.0.0"

# ES

elasticsearch.hosts: ["http://本机IP地址:9200"]

# 汉化

i18n.locale: "zh-CN"

创建 kibana容器:

docker-compose -f /www/apps/elk_kibana.yml up -d

当在网页里输入“本机地址:5601”显示如下页面,则表明容器创建成功:

4、Logstash的搭建与配置

创建Logstash所需的docker-compose执行文件:

#写入Logstash的docker-compose配置文件#

sudo vim /www/apps/elk_logstash.yml

elk_logstash.yml的配置文件如下:

version: "3"

services:

logstash:

container_name: logstash

hostname: logstash

image: registry.cn-hangzhou.aliyuncs.com/koujiang-images/logstash:7.17.1

command: logstash -f ./conf/logstash.conf

restart: always

volumes:

# 映射到容器中

- /www/logstash/conf/logstash.conf:/usr/share/logstash/conf/logstash.conf

environment:

- elasticsearch.hosts=http://本机IP地址:9200

# 解决logstash监控连接报错

- xpack.monitoring.elasticsearch.hosts=http://192.168.216.144:9200

- "TZ=Asia/Shanghai"

ports:

- 5044:5044创建Logstash所需的文件夹和配置文件:

sudo mkdir -p /www/logstash/conf

sudo vim /www/logstash/conf/logstash.conf

logstash.conf的配置如下:

input {

beats {

port => 5044

client_inactivity_timeout => 36000

}

}

# 分析、过滤插件,可以多个

filter {

grok {

match => ["message", "%{TIMESTAMP_ISO8601:logdate}"]

}

date {

match => ["logdate", "yyyy-MM-dd HH:mm:ss.SSS"]

target => "@timestamp"

}

}

output {

elasticsearch {

hosts => "http://本机IP地址:9200"

index => "%{[fields][log_topics]}-%{+YYYY.MM.dd}"

document_type => "%{[@metadata][type]}"

}

}

创建logstash容器:

sudo docker-compose -f /www/apps/elk_logstash.yml up -d

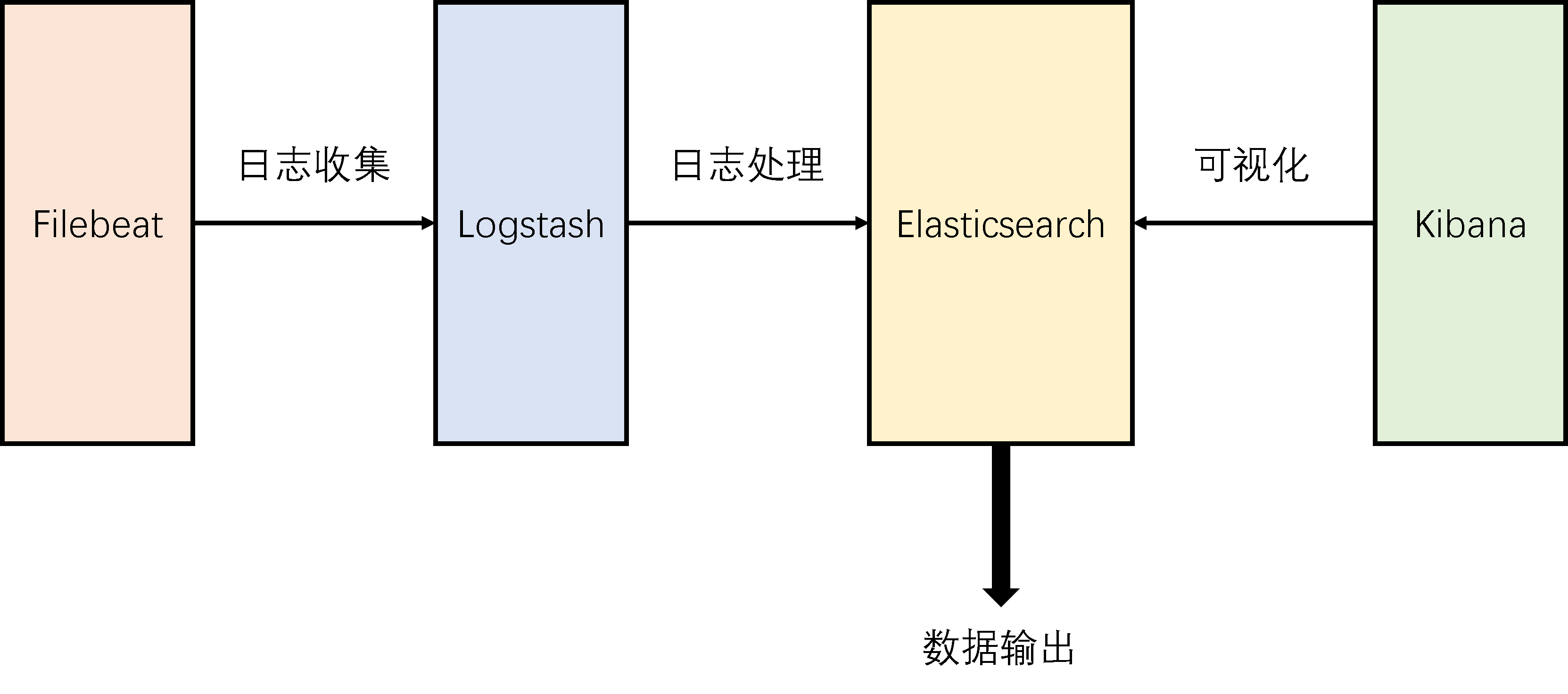

检验logstash容器:

sudo docker logs logstash -f

当显示如下日志,则表明容器创建成功:

5、Filebeat的搭建与配置

创建Filebeat所需的docker-compose执行文件:

#写入Filebeat的docker-compose配置文件#

sudo vim /www/apps/elk_filebeat.yml

elk_filebeat.yml的配置如下所示:

version: "3"

services:

filebeat:

# 容器名称

container_name: filebeat

# 主机名称

hostname: filebeat

# 镜像

image: registry.cn-hangzhou.aliyuncs.com/koujiang-images/filebeat:7.17.1

# 重启机制

restart: always

# 启动用户

user: root

# 持久化挂载

volumes:

# 映射到容器中[作为数据源]

- /var/lib/docker/containers:/var/lib/docker/containers

- /www/welcome:/www/welcome

# 方便查看数据及日志

- /www/filebeat/logs:/usr/share/filebeat/logs

- /www/filebeat/data:/usr/share/filebeat/data

# 映射配置文件到容器中

- /www/filebeat/conf/filebeat.yml:/usr/share/filebeat/filebeat.yml

# 使用主机网络模式

network_mode: host创建filebeat所需的文件夹和配置文件:

sudo mkdir -p /www/filebeat/logs

sudo mkdir -p /www/filebeat/data

sudo mkdir -p /www/filebeat/conf#写入elasticsearch的配置信息#

sudo vim /www/filebeat/conf/filebeat.yml

elasticsearch.yml的配置如下所示:

filebeat.inputs:

- type: log

enabled: true

scan_frequency: 1s

paths:

- /www/welcome/*.log

multiline:

pattern: '[0-9]{4}-[0-9]{2}-[0-9]{2}T[0-9]{2}:[0-9]{2}:[0-9]{2}'

negate: true

match: after

fields:

log_topics: welcome

#- type: docker

# combine_partial: true

# scan_frequency: 1s

# containers:

# path: "/var/lib/docker/containers"

# ids:

# - "*"

# tags: ["docker"]

# fields:

# log_topics: docker

- type: docker

scan_frequency: 1s

containers:

path: "/var/lib/docker/containers"

ids:

- "#容器日志id(全称)#"

tags: ["docker"]

fields:

log_topics: #标签名#

output.logstash:

hosts: ["192.168.216.144:5044"]

创建校验文件:

sudo mkdir -p /www/welcome

sudo vim /www/welcome/welcome.log

!!!!!WELCOME!!!!!创建 elasticsearch容器:

sudo docker-compose -f /www/apps/elk_filebeat.yml up -d

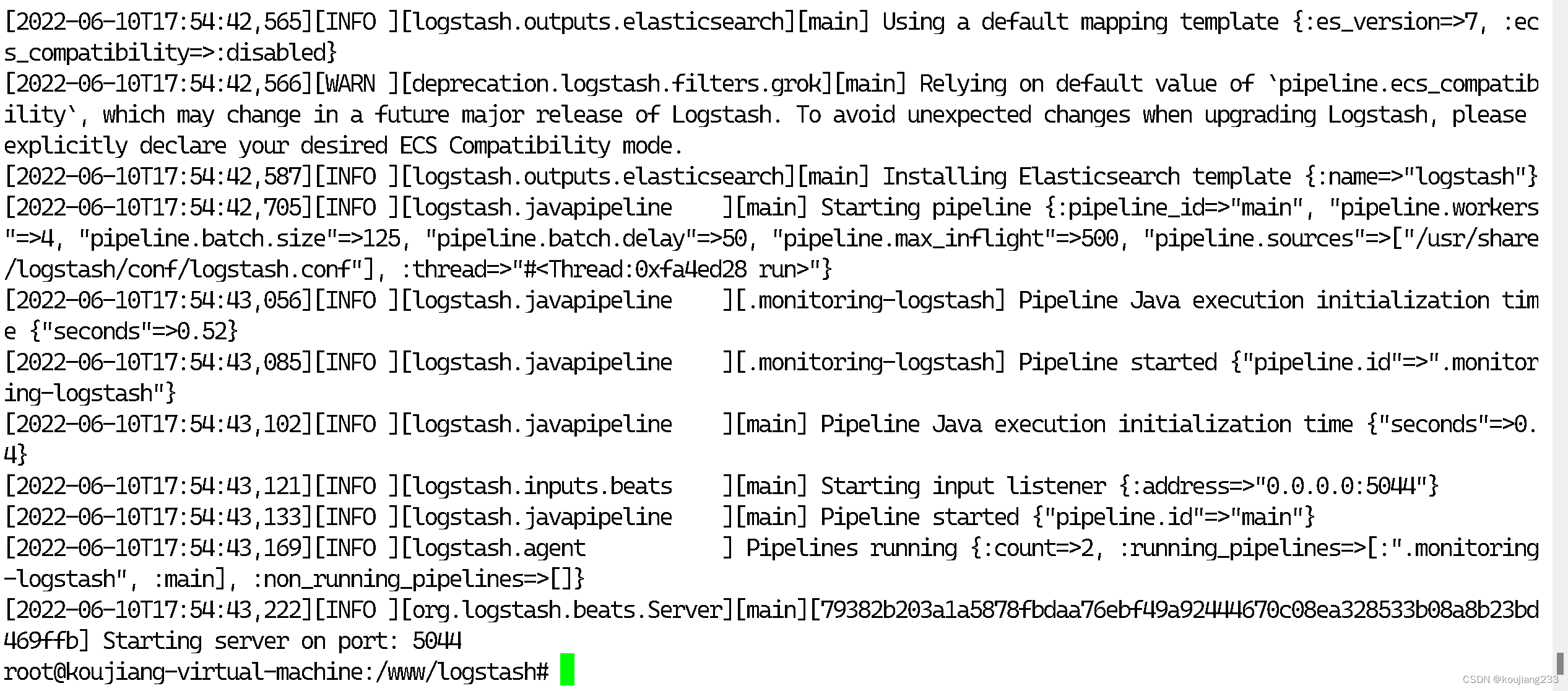

#检测filebeat是否创建成功#

sudo docker logs filebeat -f

展示如下信息则表示filebeat创建成功并处于监听状态中:

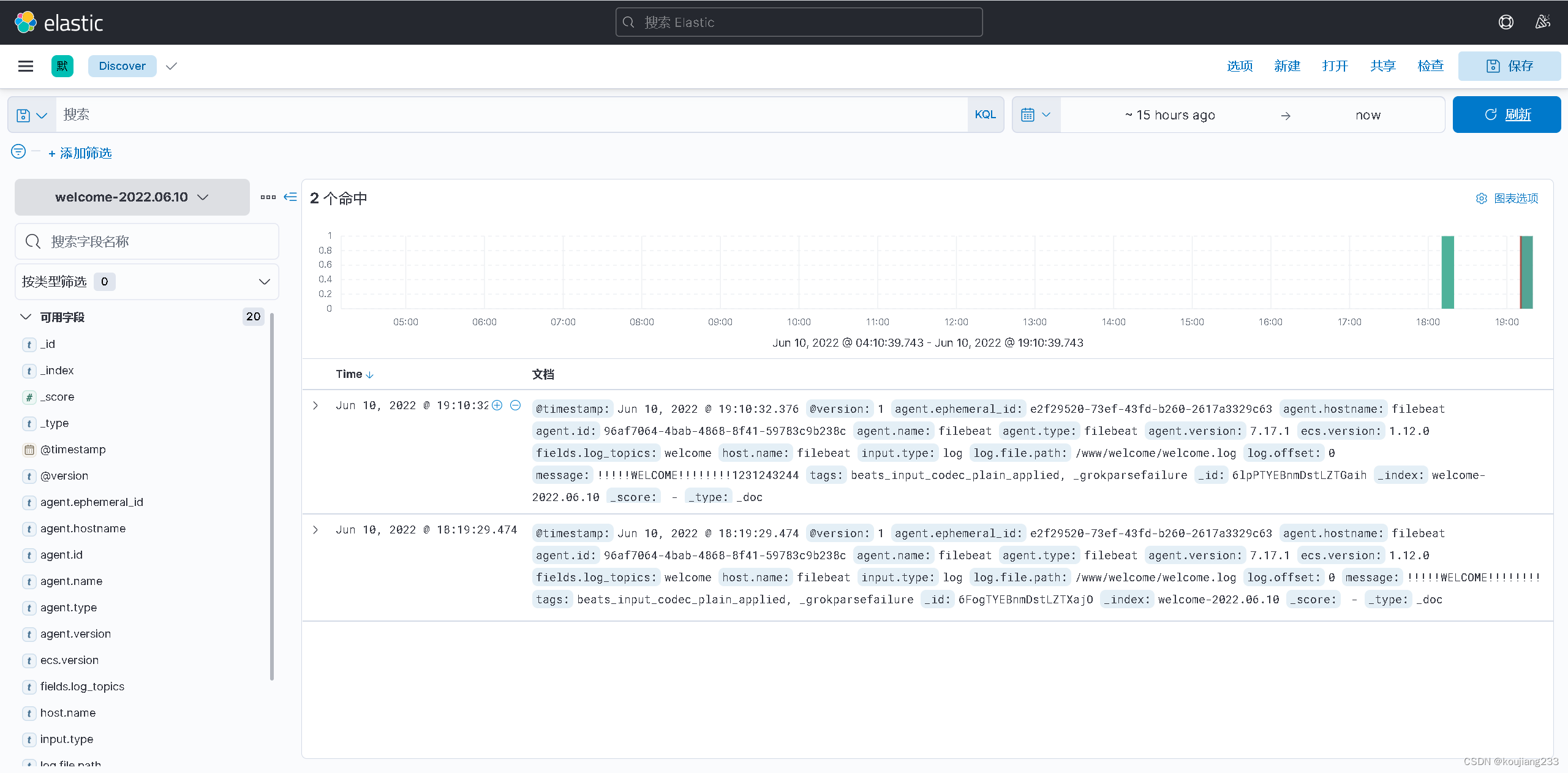

进行如下操作对welcome.log的日志进行刷新,防止elasticsearch的索引刷新不成功:

sudo vim /www/welcome/welcome.log

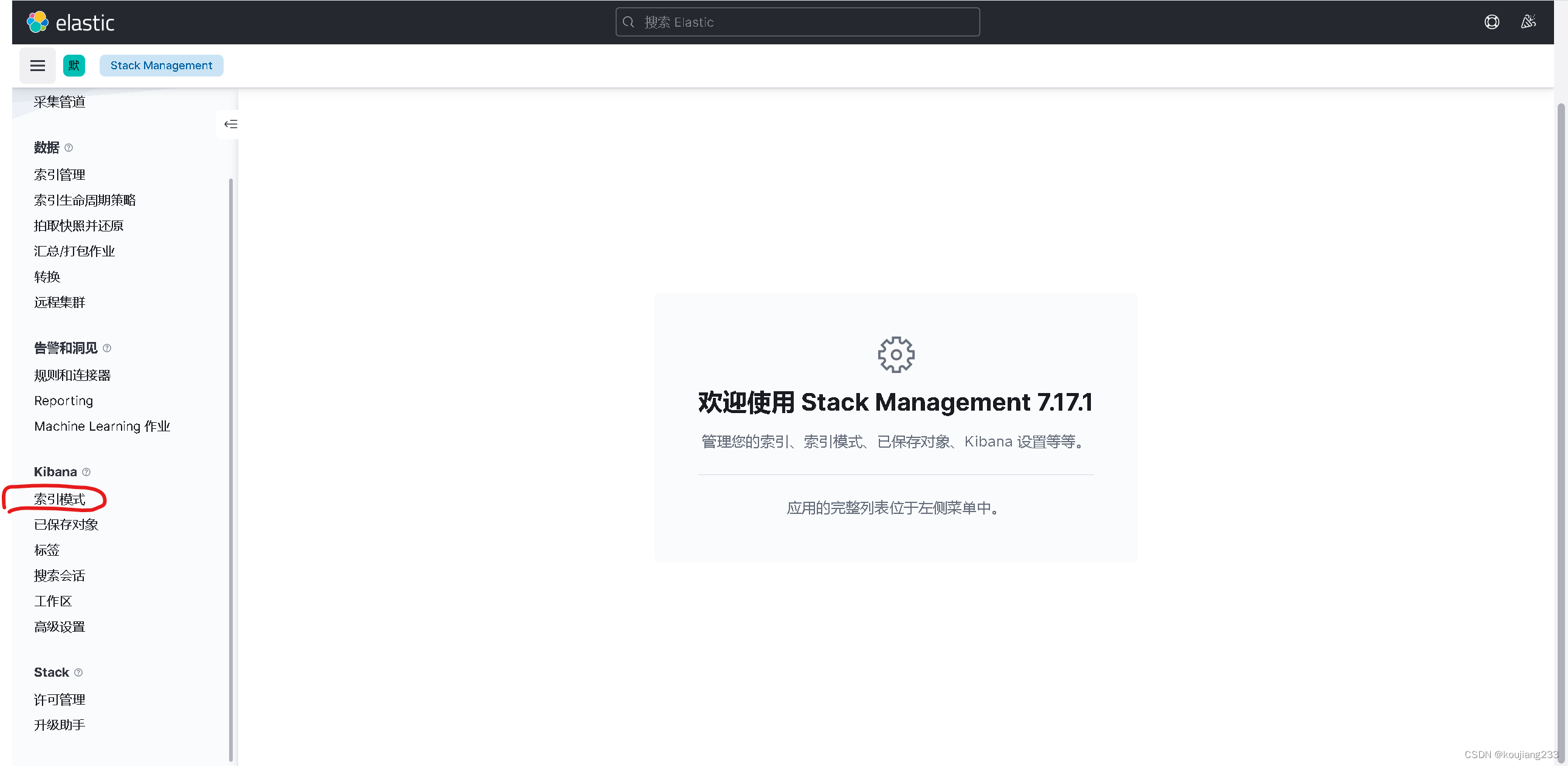

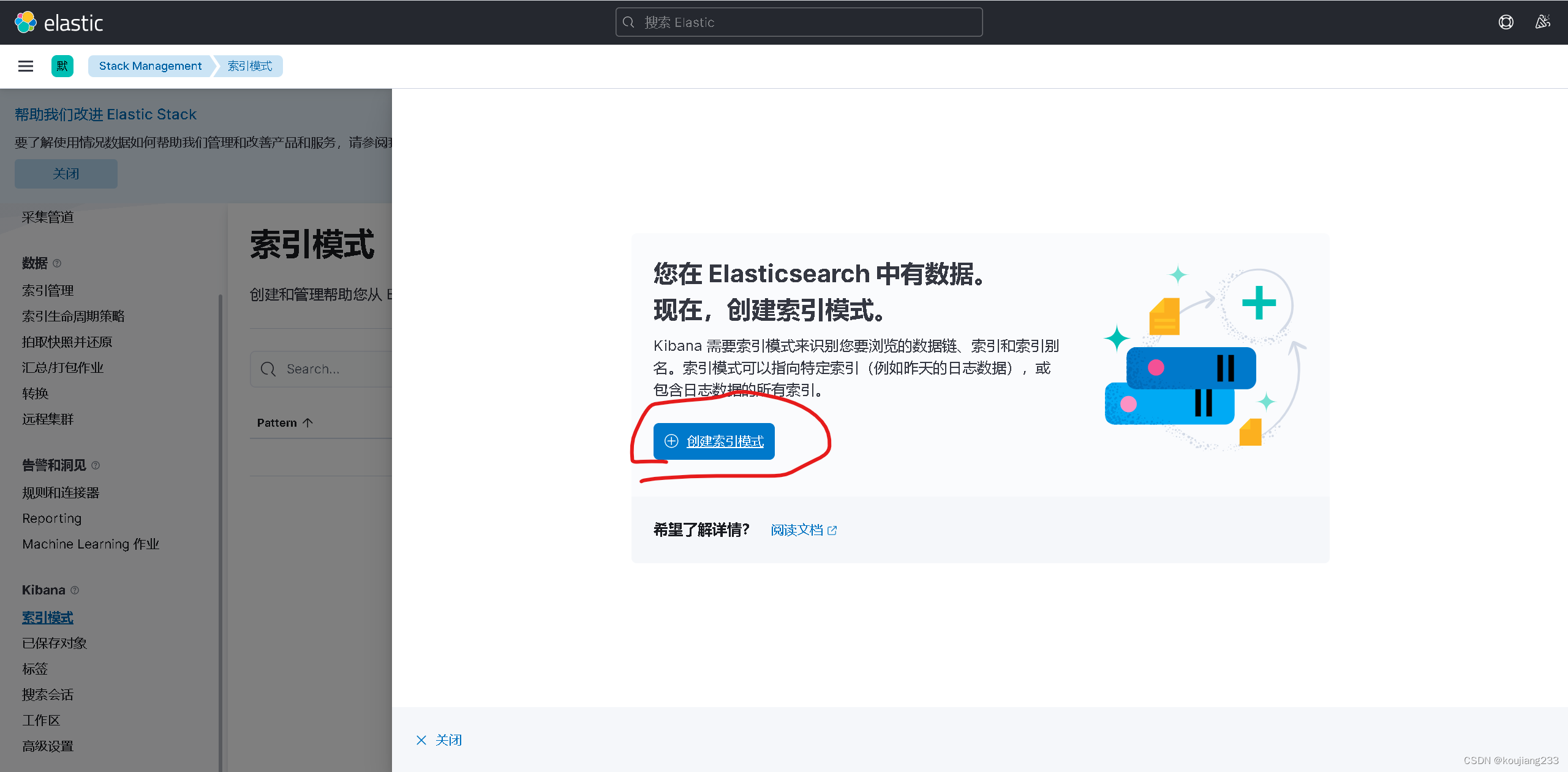

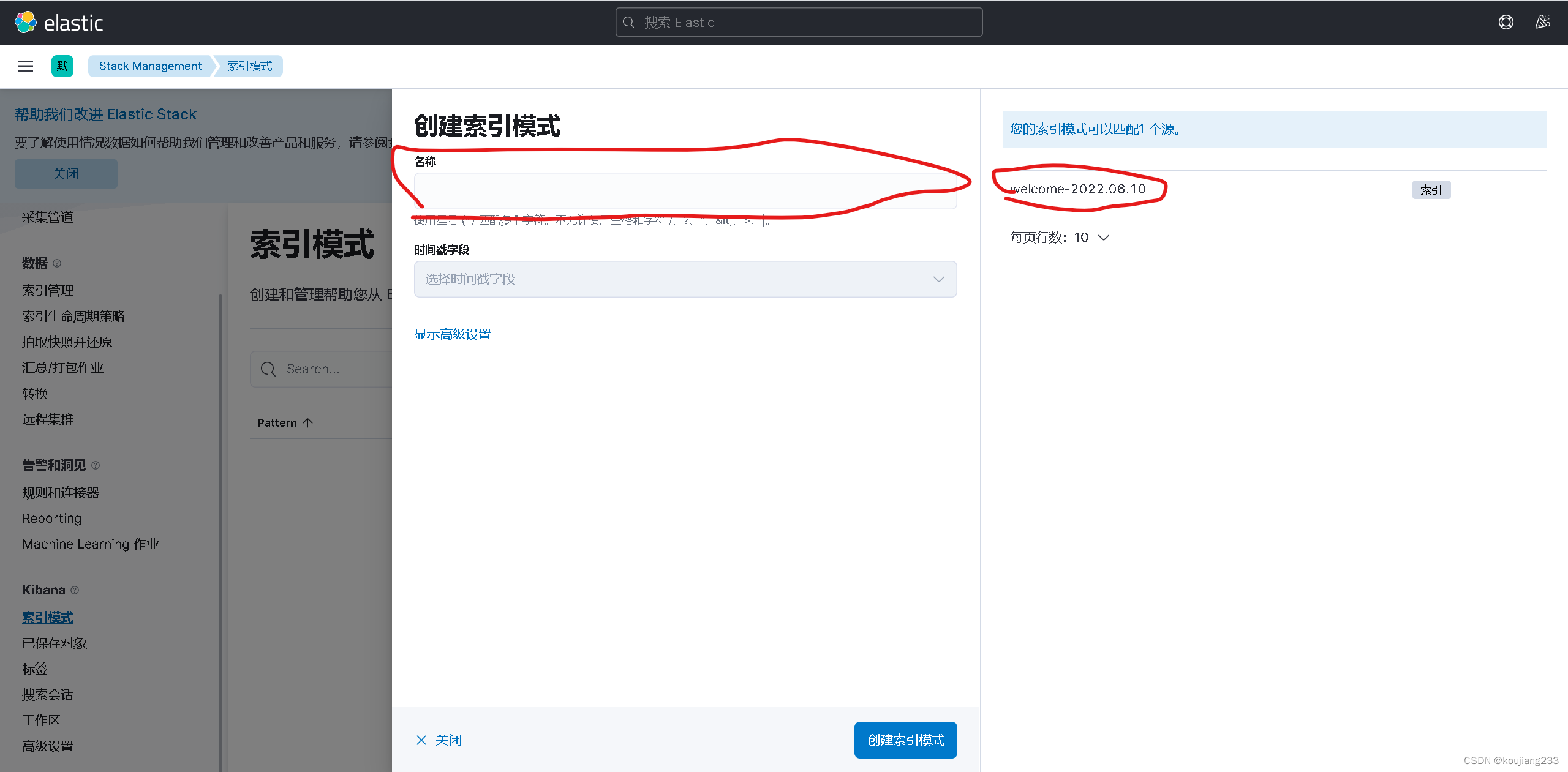

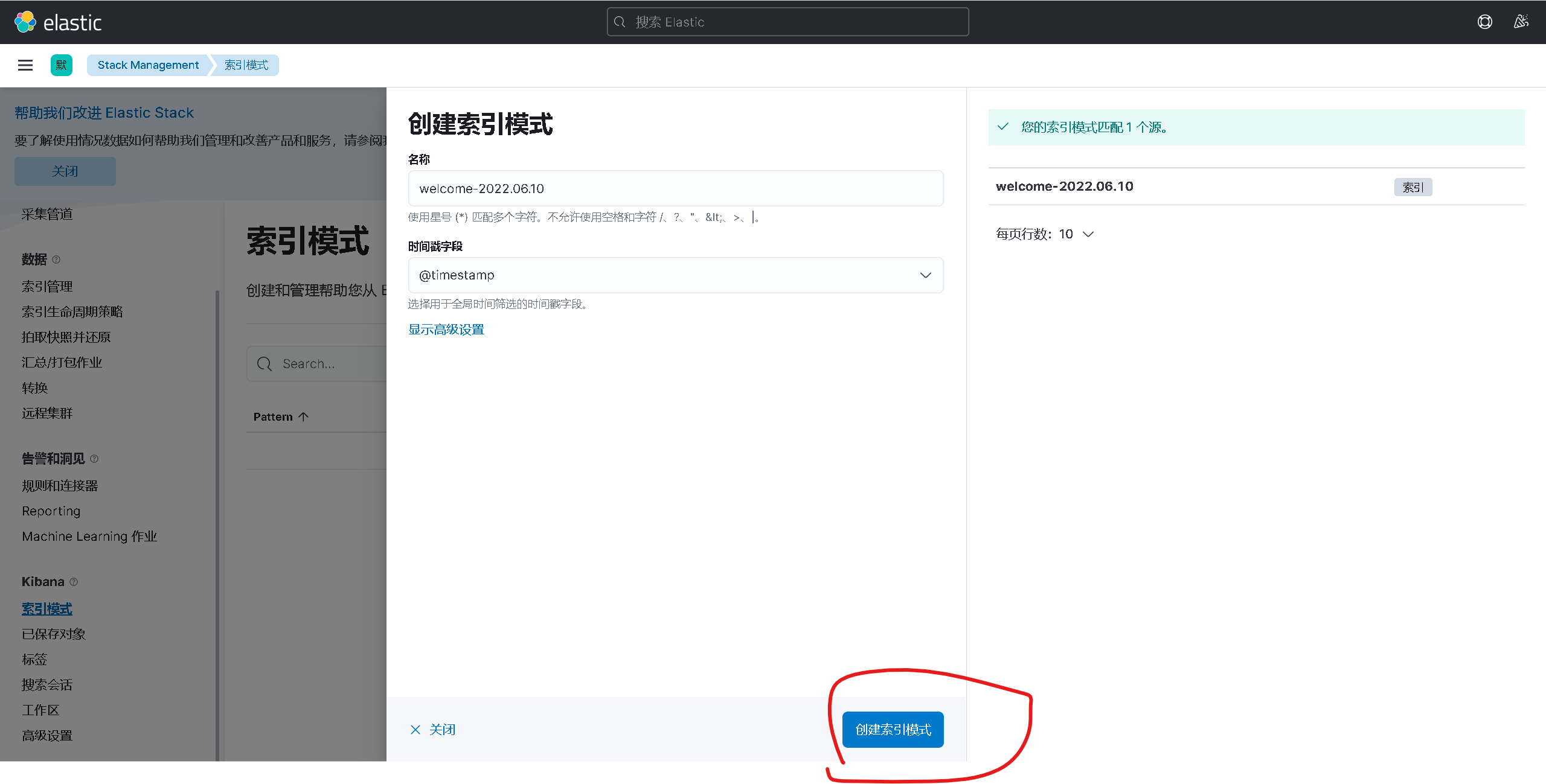

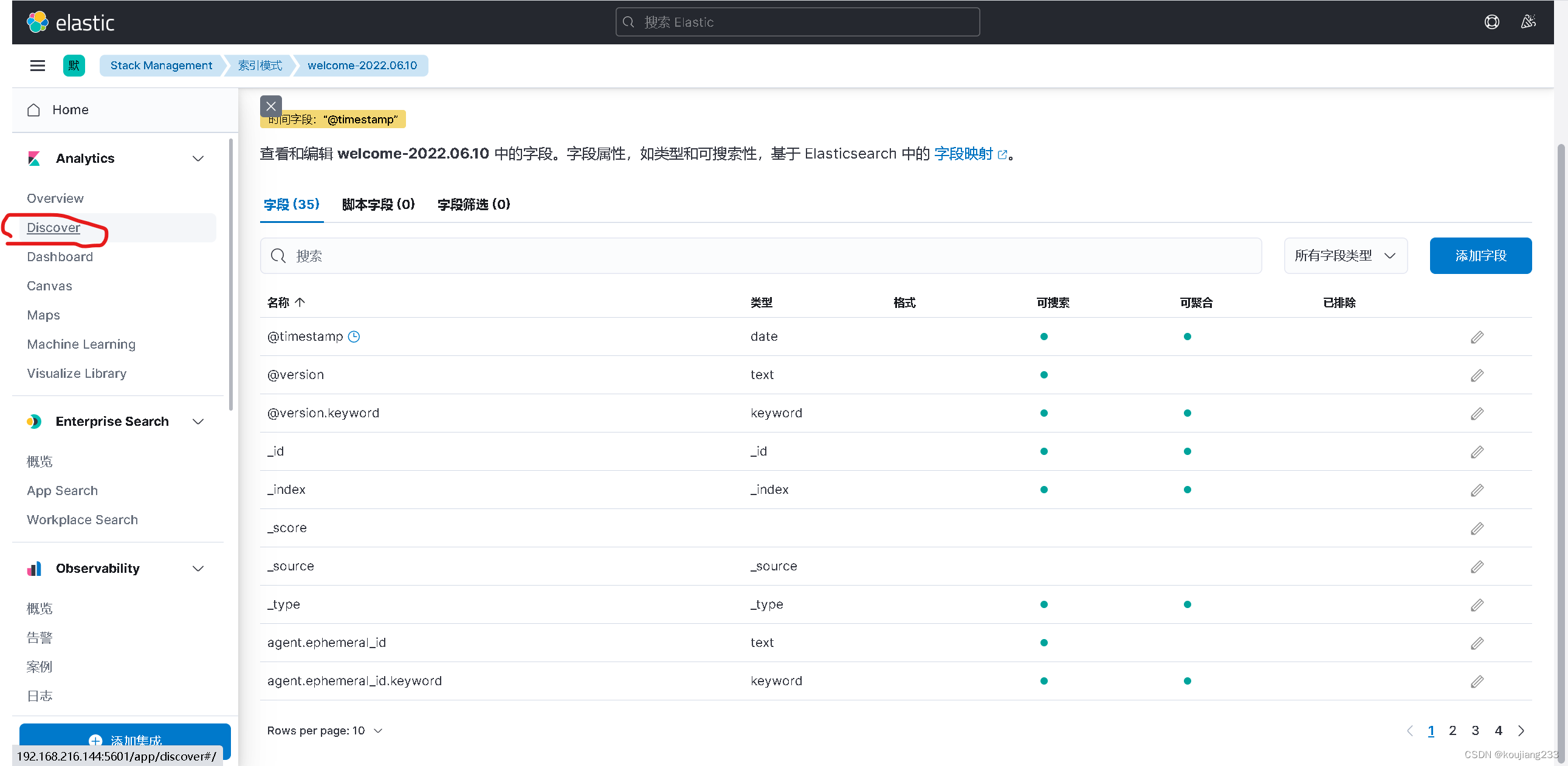

!!!!!WELCOME!!!!!123123123按照如下流程进行操作:

6、创建总的docker-compose文件

创建集合上述四个组件的总docker-compose文件:

sudo vim /www/apps/elk_all.yml

elk_all.yml的配置如下:

version: "3"

services:

elasticsearch:

container_name: elasticsearch

hostname: elasticsearch

image: registry.cn-hangzhou.aliyuncs.com/koujiang-images/elasticsearch:7.17.1

restart: always

user: root

ports:

- 9200:9200

volumes:

- /www/elasticsearch/conf/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml

- /www/elasticsearch/data:/usr/share/elasticsearch/data

- /www/elasticsearch/logs:/usr/share/elasticsearch/logs

environment:

- "discovery.type=single-node"

- "TAKE_FILE_OWNERSHIP=true"

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

- "TZ=Asia/Shanghai"

kibana:

container_name: kibana

hostname: kibana

image: registry.cn-hangzhou.aliyuncs.com/koujiang-images/kibana:7.17.1

restart: always

ports:

- 5601:5601

volumes:

- /www/kibana/conf/kibana.yml:/usr/share/kibana/config/kibana.yml

environment:

- elasticsearch.hosts=http://192.168.216.144:9200

- "TZ=Asia/Shanghai"

depends_on:

- elasticsearch

logstash:

container_name: logstash

hostname: logstash

image: registry.cn-hangzhou.aliyuncs.com/koujiang-images/logstash:7.17.1

command: logstash -f ./conf/logstash.conf

restart: always

volumes:

# 映射到容器中

- /www/logstash/conf/logstash.conf:/usr/share/logstash/conf/logstash.conf

environment:

- elasticsearch.hosts=http://192.168.216.144:9200

# 解决logstash监控连接报错

- xpack.monitoring.elasticsearch.hosts=http://192.168.216.144:9200

- "TZ=Asia/Shanghai"

ports:

- 5044:5044

depends_on:

- elasticsearch

filebeat:

# 容器名称

container_name: filebeat

# 主机名称

hostname: filebeat

# 镜像

image: registry.cn-hangzhou.aliyuncs.com/koujiang-images/filebeat:7.17.1

# 重启机制

restart: always

# 启动用户

user: root

# 持久化挂载

volumes:

# 映射到容器中[作为数据源]

- /var/lib/docker/containers:/var/lib/docker/containers

- /www/welcome:/www/welcome

# 方便查看数据及日志

- /www/filebeat/logs:/usr/share/filebeat/logs

- /www/filebeat/data:/usr/share/filebeat/data

# 映射配置文件到容器中

- /www/filebeat/conf/filebeat.yml:/usr/share/filebeat/filebeat.yml

# 使用主机网络模式

network_mode: host

depends_on:

- elasticsearch

- logstash(此文件可同时启动四个组件)

参考文档:Docker搭建ELK日志日志分析系统_真滴是小机灵鬼的博客-CSDN博客_elk日志分析系统docker

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)