elk8.0部署文档(rsyslog+kafka+logstash+elasticsearch+kibana))

最近需要收集linux系统日志,然后新版本rsyslog也支持直接吐到kafka,所以计划采用rsyslog加上传统的elk集群,大概的架构计划如下:最开始也想过用filebeat,但后来考虑,新增一个agent,其实增加了不可控因素,直接用系统自带的rsyslog的话,客户端只需要配置一行服务端地址,而且,可以采用udp方式传输数据效率也高。组件版本:采用的都是新版本,kafka这个版本,不再需

目录

最近需要收集linux系统日志,然后新版本rsyslog也支持直接吐到kafka,所以计划采用rsyslog加上传统的elk集群,大概的架构计划如下:

最开始也想过用filebeat,但后来考虑,新增一个agent,其实增加了不可控因素,直接用系统自带的rsyslog的话,客户端只需要配置一行服务端地址,而且,可以采用udp方式传输数据效率也高。

组件版本:

elasticsearch 8.0.0logstash 8.0.0kibanna 8.0.0rsyslog 8.24.0kafka 3.2.0

采用的都是新版本,kafka这个版本,不再需要单独部署zookeeper。

其中es logstash kafka都采用rpm包部署

包获取路径:

eshttps://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-8.0.0-x86_64.rpmlogstashhttps://artifacts.elastic.co/downloads/logstash/logstash-8.0.0-x86_64.rpmkibanahttps://artifacts.elastic.co/downloads/kibana/kibana-8.0.0-x86_64.rpmkafkahttps://www.apache.org/dyn/closer.cgi?path=/kafka/3.2.0/kafka_2.12-3.2.0.tgz

elasticsearch部署

先在第一台安装

rpm -ivh elasticsearch-8.0.0-x86_64.rpm

目前8.0部署,会直接开启认证,相关的密钥也会在输出中:

可以看到kibana的token也会生成,但是有时效,需要调整

✅ Elasticsearch security features have been automatically configured!✅ Authentication is enabled and cluster connections are encrypted.ℹ️ Password for the elastic user (reset with `bin/elasticsearch-reset-password -u elastic`):密钥ℹ️ HTTP CA certificate SHA-256 fingerprint:9da4a430e857626b3e126a4bdf32724560bb0c51f093ef29232703ce43712548ℹ️ Configure Kibana to use this cluster:• Run Kibana and click the configuration link in the terminal when Kibana starts.• Copy the following enrollment token and paste it into Kibana in your browser (valid for the next 30 minutes):eyJ2ZXIiOiI4LjAuMSIsImFkciI6WyIxMC4xMTMuMjIuMTY1OjkyMDEiXSwiZmdyIjoiOWRhNGE0MzBlODU3NjI2YjNlMTI2YTRiZGYzMjcyNDU2MGJiMGM1MWYwOTNlZjI5MjMyNzAzY2U0MzcxMjU0OCIsImtleSI6Ik9OR21UbjhCcnVQeTczamd4S3FtOkkwS1ZTV1VmUmNpYXpLQzFTMG1TV2cifQ==ℹ️ Configure other nodes to join this cluster:• Copy the following enrollment token and start new Elasticsearch nodes with `bin/elasticsearch --enrollment-token <token>` (valid for the next 30 minutes):eyJ2ZXIiOiI4LjAuMSIsImFkciI6WyIxMC4xMTMuMjIuMTY1OjkyMDEiXSwiZmdyIjoiOWRhNGE0MzBlODU3NjI2YjNlMTI2YTRiZGYzMjcyNDU2MGJiMGM1MWYwOTNlZjI5MjMyNzAzY2U0MzcxMjU0OCIsImtleSI6Ik45R21UbjhCcnVQeTczamd4S3FsOkZjcVZCNFF5VHVhcFZVUmFUVHBLS2cifQ==If you're running in Docker, copy the enrollment token and run:`docker run -e "ENROLLMENT_TOKEN=<token>" docker.elastic.co/elasticsearch/elasticsearch:8.0.1`

这里需要注意的话,就是部署第二、三台的话,需要加入集群

第一步的话,依旧是

rpm -ivh elasticsearch-8.0.0-x86_64.rpm/usr/share/elasticsearch/bin/elasticsearch-reconfigure-node --enrollment-token 加上述token最后的话,就是配置文件了,其实要注意,有些是会自动生成的,自己配的话,有时候会重复elasticsearch.ymlcluster.name: esnode.name: node-1path.data: /data/elasticsearch/datapath.logs: /data/elasticsearch/logsnetwork.host: 0.0.0.0discovery.seed_hosts: ["ip1","ip2", "ip3"]cluster.initial_master_nodes: ["node-1"]xpack.security.enabled: truexpack.security.enrollment.enabled: truexpack.security.http.ssl:enabled: truekeystore.path: certs/http.p12xpack.security.transport.ssl:enabled: trueverification_mode: certificatekeystore.path: certs/transport.p12truststore.path: certs/transport.p12http.host: [_local_, _site_]

启动的话,需要

systemctl daemon-reloadsystemctl start elasticsearch

kafka部署

tar包部署,三台分别解压,然后修改配置

tar -xvfvim /data/kafka/config/server.propertiesbroker.id=0 #需要修改num.network.threads=3num.io.threads=8socket.send.buffer.bytes=102400socket.receive.buffer.bytes=102400socket.request.max.bytes=104857600log.dirs=/data/kafka/kafka-logsnum.partitions=1num.recovery.threads.per.data.dir=1offsets.topic.replication.factor=1transaction.state.log.replication.factor=1transaction.state.log.min.isr=1log.retention.hours=168log.segment.bytes=1073741824log.retention.check.interval.ms=300000zookeeper.connect=ip1:2181,ip2:2181,ip3:2181 #需要配置zookeeper.connection.timeout.ms=18000group.initial.rebalance.delay.ms=0#启动

kafka-server-start.sh -daemon ../config/server.properties相关命令

查看topic/data/kafka/bin/kafka-topics.sh --list --bootstrap-server ip1:9092查看group/data/kafka/bin/kafka-consumer-groups.sh --list --bootstrap-server ip:9092

logstash部署

同样rpm部署

rpm -ivh logstash-8.0.0-x86_64.rpm修改配置文件

vim /etc/logsgtash/conf.d/test.conf

input {kafka {codec => "json"group_id => "logs"topics => ["system_logs"]bootstrap_servers => "ip1:9092,ip2:9092,ip3:9092"auto_offset_reset => "latest"}}output {elasticsearch {hosts => ["https://ip1:9200"]index => "sys-log-%{+YYYY.MM.dd}"user => "elastic"password => "passwd"cacert => '/etc/logstash/http_ca.crt'}}#注意是https 密钥文件的话,是从es拷贝过来的

kibana部署

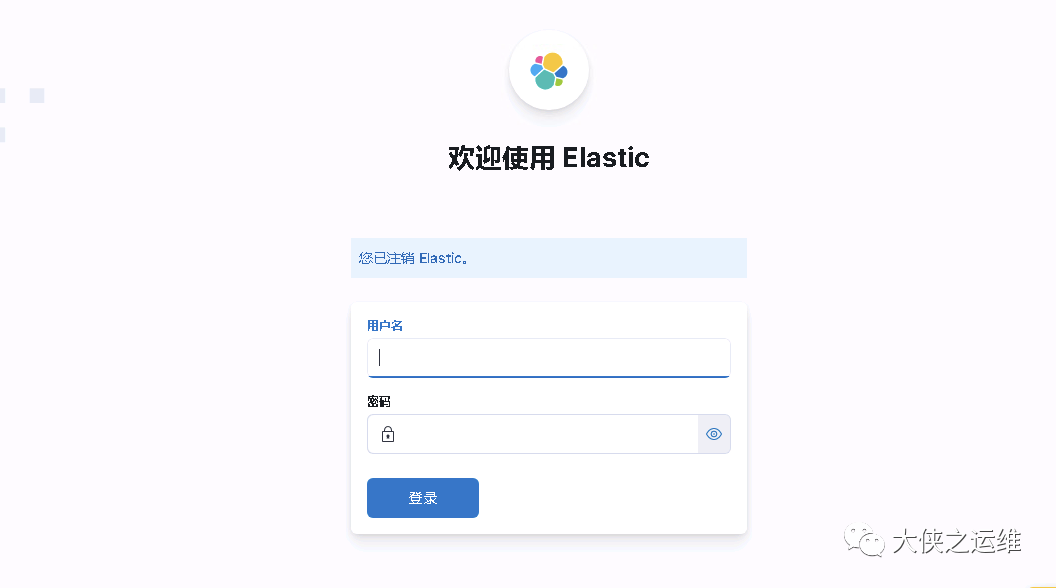

同样直接rpm部署就好,然后网页打开后,填写上述的token值,如果过期的话,重新生成下

rpm -ivh kibana-8.0.0-x86_64.rpm上述都启动后,再去配置rsyslog服务端配置

主要有两个配置文件需要修改

/etc/rsyslog.conf

#添加如下几行,另外需要在服务端部署 yum install rsyslog-kafkamodule(load="omkafka")module(load="imudp")input(type="imudp" port="514" ruleset="tokafka")*.* @ip:514 #服务端ip

/etc/ryslog.d/system.conf

#这个配置是将系统日志json化template(name="json" type="list" option.json="on") {constant(value="{")constant(value="\"timestamp\":\"")property(name="timereported" dateFormat="rfc3339")constant(value="\",\"host\":\"")property(name="hostname")constant(value="\",\"facility\":\"")property(name="syslogfacility-text")constant(value="\",\"severity\":\"")property(name="syslogseverity-text")constant(value="\",\"syslog-tag\":\"")property(name="syslogtag")constant(value="\",\"message\":\"")property(name="msg" position.from="2")constant(value="\"}")}ruleset(name="tokafka") {action(type="omkafka"broker="ip1:9092,ip3:9092,ip2:9092"confParam=["compression.codec=snappy", "socket.keepalive.enable=true"]topic="system_logs"partitions.auto="on"template="json"queue.workerThreads="4"queue.size="360000"queue.dequeueBatchSize="100")}

好了,都完成了,重启rsyslog服务,然后等日志加入,在kibana中配置索引。

更多推荐

已为社区贡献4条内容

已为社区贡献4条内容

所有评论(0)