Hive客户端启动报SLF4J: Class path contains multiple SLF4J bindings.

使用Hive客户端的时候,如果不注意细节,那么会遇到一个问题:重复打印输出SLF4J信息文章目录1、出现的问题2、根本原因3、解决方法4、查看最终效果5、PS:hive日志在哪呐?1、出现的问题[root@hadoop11 lib]# hiveSLF4J: Class path contains multiple SLF4J bindings.SLF4J: Found binding in [ja

·

使用Hive客户端的时候,如果不注意细节,那么会遇到一个问题:重复打印输出SLF4J信息

1、出现的问题

[root@hadoop11 lib]# hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/datafs/hive/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/datafs/hadoop/hadoop-3.1.1/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

which: no hbase in (/datafs/zookeeper/bin:/datafs/zookeeper/conf:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.292.b10-1.el7_9.aarch64/bin:/datafs/hadoop/hadoop-3.1.1/bin:/datafs/hadoop/hadoop-3.1.1/sbin:/datafs/hadoop/hadoop-3.1.1/libexec:/datafs/hive/bin:/datafs/sqoop-1.4.7.bin__hadoop-2.6.0/bin:/root/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/datafs/hive/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/datafs/hadoop/hadoop-3.1.1/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Hive Session ID = cb494b0f-cab0-467b-b217-2388d307df78

Logging initialized using configuration in file:/datafs/hive/conf/hive-log4j2.properties Async: true

Hive Session ID = e75c9708-c257-461f-a740-621d0ec15c92

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

hive> [root@hadoop11 lib]#

如果使用过程中没出上述报错,可以把这个问题可以忽略

2、根本原因

log4j-slf4j-impl-2.10.0.jar这个 jar包没有干掉

3、解决方法

干掉 log4j-slf4j-impl-2.10.0.jar

[root@hadoop11 lib]# mv log4j-slf4j-impl-2.10.0.jar log4j-slf4j-impl-2.10.0.jar.bak

4、查看最终效果

[root@hadoop139 lib]# hive

which: no hbase in (/datafs/zookeeper/bin:/datafs/zookeeper/conf:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.292.b10-1.el7_9.aarch64/bin:/datafs/hadoop/hadoop-3.1.1/bin:/datafs/hadoop/hadoop-3.1.1/sbin:/datafs/hadoop/hadoop-3.1.1/libexec:/datafs/hive/bin:/datafs/sqoop-1.4.7.bin__hadoop-2.6.0/bin:/root/bin)

Hive Session ID = 3d4539f8-c091-4d52-935f-af592ece0335

Logging initialized using configuration in file:/datafs/hive/conf/hive-log4j2.properties Async: true

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

Hive Session ID = da41b52a-d740-4dc5-bd73-45f696ef7ea2

hive> [root@hadoop139 lib]#

其实还是有点问题,不过这个hive跟hbase没多大关系,暂时忽略就OK了,

主要是把日志那一块去掉了

5、PS:hive日志在哪呐?

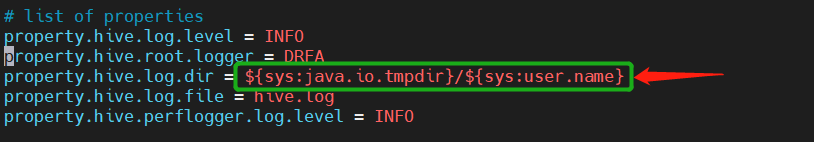

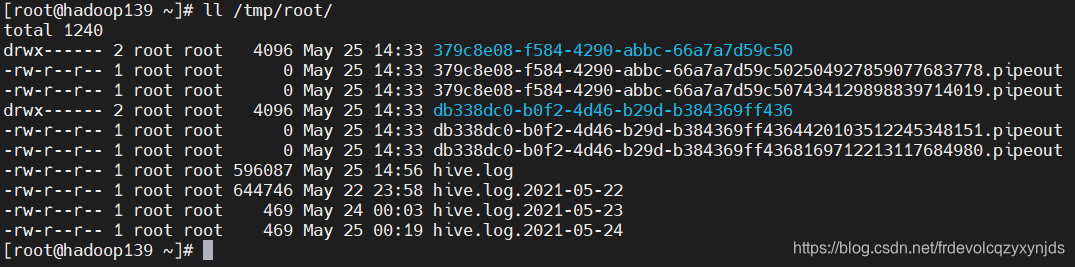

/datafs/hive/conf/hive-log4j2.properties

这个文件是配置hive日志存放目录

${sys:java.io.tmpdir}/${sys:user.name}

默认存放目录

可以找到hive日志文件

做技术,一定要严谨!!!

更多推荐

已为社区贡献40条内容

已为社区贡献40条内容

所有评论(0)