国基北盛-openstack-容器云-环境搭建

云计算技能大赛,国基北盛 容器云详细搭建过程

前言

本篇文章内实验内容为国基北盛试点版赛题内容,时间是2020年底的资料和其他省现状可能不太相符,但是学习的内容并不会有太大差距,之前在csdn上写过一篇,但是太过简便,并且没有记录完整的做题过程,本次再写一篇,将会按照官方提供的手册进行实验。

欢迎来我的个人博客逛逛

实验环境

系统说明:利用openstack私有云部分所搭建的环境,创建两台云主机进行部署k8s集群环境。

运行环境:CentOS7.5,内核版本>=3.10

Docker 版本: docker-ce-19.03.13

Kubernetes 版本: 1.18.1

环境所需镜像文件:

Paas镜像包点击下载

系统镜像包点击下载

| 节点角色 | 主机名 | 内存 | 硬盘 | IP地址 |

|---|---|---|---|---|

| master Node | master | 8G | 100G | 192.168.58.6 |

| Worker Node | node | 8G | 100G | 192.168.58.11 |

| Harbor | master | 8G | 100G | 192.168.58.6 |

1、基础环境部署

安装好CentOS7.5操作系统后,将官方提供的chinaskills_cloud_paas.iso镜像文件上传到master节点上,并将基础系统镜像挂载到master节点。

(如果使用的openstack平台进行创建云主机部署k8s的话,可以不用将基础系统镜像挂载上,在云主机的yum中可以使用物理机器系统中的基础软件包)

注:本次实验中所使用的yum源除k8s源之外都来自controller节点的ftp源,controller节点中所需镜像文件及repo文件内容如下图

####如果不会使用ftp源的,麻烦移步查看:https://blog.csdn.net/qq_45925514/article/details/111782965

[root@controller ~]# tree

.

├── anaconda-ks.cfg

├── CentOS-7-x86_64-DVD-1804.iso

├── chinaskills_cloud_iaas.iso

└── chinaskills_cloud_paas.iso #此镜像会上传至master节点

# repo文件内容

[root@controller ~]# cat /etc/yum.repos.d/ftp.repo

[centos]

name = centos

baseurl = ftp://controller/centos

gpgcheck = 0

enabled = 1

[iaas]

name = iaas

baseurl = ftp://controller/OpenStack/iaas-repo

gpgcheck = 0

enabled = 1

# 镜像挂载目录,centos目录下挂载CentOS7.5,Openstack目录下挂载的iaas

[root@controller ~]# tree -L 2 /opt/

/opt/

├── centos

│ ├── CentOS_BuildTag

│ ├── EFI

│ ├── EULA

│ ├── GPL

│ ├── images

│ ├── isolinux

│ ├── LiveOS

│ ├── Packages

│ ├── repodata

│ ├── RPM-GPG-KEY-CentOS-7

│ ├── RPM-GPG-KEY-CentOS-Testing-7

│ └── TRANS.TBL

└── OpenStack

├── iaas-repo

└── images

# 将pass镜像包上传至docker的master节点

[root@controller ~]# scp chinaskills_cloud_paas.iso 192.168.58.6:/opt/

The authenticity of host '192.168.58.6 (192.168.58.6)' can't be established.

ECDSA key fingerprint is SHA256:FqTDtd28812m1IAFRjAbURuwoPQQRbq7gqGrEYh77C4.

ECDSA key fingerprint is MD5:1a:d0:c6:aa:89:3a:1c:ed:c6:21:1d:dc:4d:63:e8:33.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.58.6' (ECDSA) to the list of known hosts.

root@192.168.58.6's password:

1.1、系统初始化配置(master和node)

1.1.1、修改主机名

docker-1主机名修改为master,docker-2主机名修改为node

# docker-1

[root@docker-1 ~]# hostnamectl set-hostname master

[root@docker-1 ~]# bash

[root@master ~]# hostnamectl

Static hostname: master

Icon name: computer-vm

Chassis: vm

Machine ID: 622ba110a69e24eda2dca57e4d306baa

Boot ID: 51064e086449407987e3a42a3e53547b

Virtualization: kvm

Operating System: CentOS Linux 7 (Core)

CPE OS Name: cpe:/o:centos:centos:7

Kernel: Linux 3.10.0-862.2.3.el7.x86_64 #内核版本

Architecture: x86-64

# docker-2

[root@docker-2 ~]# hostnamectl set-hostname node

[root@docker-2 ~]# bash

[root@node ~]# hostnamectl

Static hostname: node

Icon name: computer-vm

Chassis: vm

Machine ID: 622ba110a69e24eda2dca57e4d306baa

Boot ID: 493bc83d9ecb4e36bba1cec5fd1c2328

Virtualization: kvm

Operating System: CentOS Linux 7 (Core)

CPE OS Name: cpe:/o:centos:centos:7

Kernel: Linux 3.10.0-862.2.3.el7.x86_64 #内核版本

Architecture: x86-64

1.1.2、配置主机名映射

使用的云主机可能会存在两个ip,一个的内网IP,一个外网ip,我们使用ssh工具连接的外网ip地址,云主机内部通信使用的内网ip地址,ip互通就没有什么太大问题,用那个都可以,我这里使用的内网IP地址进行映射。

# master 节点

[root@master ~]# vim /etc/hosts

[root@master ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.0.0.6 master

10.0.0.21 node

# 将hosts文件上传到node

[root@master ~]# scp /etc/hosts node:/etc/hosts

The authenticity of host 'node (10.0.0.21)' can't be established.

ECDSA key fingerprint is SHA256:FqTDtd28812m1IAFRjAbURuwoPQQRbq7gqGrEYh77C4.

ECDSA key fingerprint is MD5:1a:d0:c6:aa:89:3a:1c:ed:c6:21:1d:dc:4d:63:e8:33.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'node,10.0.0.21' (ECDSA) to the list of known hosts.

hosts 100% 190 1.6KB/s 00:00

[root@node ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.0.0.6 master

10.0.0.21 node

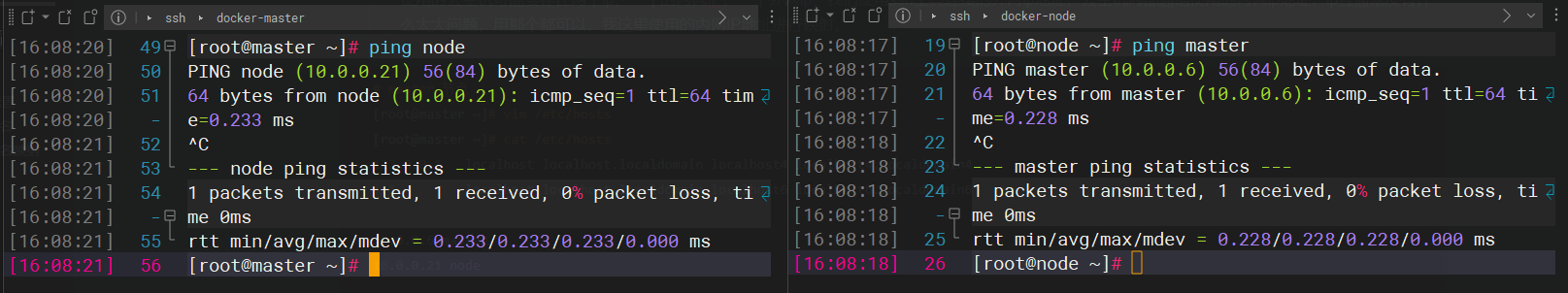

检查一下主机名映射是否成功

1.1.3、关闭防火墙和SElinux

在云主机中默认selinux和防火墙都是关闭的,如果使用的虚拟机或物理机,这步就需要做。

## 关闭iptables

[root@master ~]# iptables -F

[root@master ~]# iptables -X

[root@master ~]# iptables -Z

[root@master ~]# iptables -L

Chain INPUT (policy ACCEPT)

target prot opt source destination

Chain FORWARD (policy ACCEPT)

target prot opt source destination

Chain OUTPUT (policy ACCEPT)

target prot opt source destination

[root@master ~]# iptables-save

# Generated by iptables-save v1.4.21 on Tue Mar 15 08:10:58 2022

*filter

:INPUT ACCEPT [33:1948]

:FORWARD ACCEPT [0:0]

:OUTPUT ACCEPT [20:1936]

COMMIT

# Completed on Tue Mar 15 08:10:58 2022

## 关闭SElinux

[root@master ~]# sed -i 's/SELINUX=.*/SELINUX=disabled/g' /etc/selinux/config # 修改配置环境

[root@master ~]# setenforce 0 # 临时设置

setenforce: SELinux is disabled

[root@master ~]# getenforce # 查看设置

Disabled

## 关闭firewalld

systemctl stop firewalld

systemctl disable firewalld

1.1.4、配置yum源

基础环境部署中说过,基础的镜像源使用controller节点的ftp源,而paas源在本地搭建ftp,也使用ftp的,可以供node节点使用

## 上面我已经将paas的镜像包传到了master节点下

## 挂载paas镜像,并将镜像中的文件复制到/opt下

[root@master opt]# ls

chinaskills_cloud_paas.iso

[root@master opt]# mount chinaskills_cloud_paas.iso /mnt/

mount: /dev/loop0 is write-protected, mounting read-only

[root@master opt]# ll /mnt/

total 64

dr-xr-xr-x 1 root root 2048 Oct 23 2020 ChinaskillMall

dr-xr-xr-x 1 root root 2048 Oct 23 2020 ChinaskillProject

dr-xr-xr-x 1 root root 2048 Oct 23 2020 docker-compose

dr-xr-xr-x 1 root root 2048 Oct 23 2020 harbor

dr-xr-xr-x 1 root root 6144 Oct 23 2020 images

-r-xr-xr-x 1 root root 3049 Oct 21 2020 k8s_harbor_install.sh

-r-xr-xr-x 1 root root 5244 Oct 21 2020 k8s_image_push.sh

-r-xr-xr-x 1 root root 1940 Oct 21 2020 k8s_master_install.sh

-r-xr-xr-x 1 root root 3055 Oct 21 2020 k8s_node_install.sh

dr-xr-xr-x 1 root root 20480 Oct 23 2020 kubernetes-repo

dr-xr-xr-x 1 root root 14336 Oct 23 2020 plugins

dr-xr-xr-x 1 root root 2048 Oct 23 2020 yaml

[root@master opt]# cp -rf /mnt/* /opt/

## master节点

[root@master opt]# mv /etc/yum.repos.d/* /home/ #备份原 yum文件

[root@master opt]# vim /etc/yum.repos.d/ftp.repo

[centos]

name = centos

baseurl = ftp://controller/centos #这里使用的是controller节点的源,如果没有做controller节点的主机名映射,就需要将controller改成ip地址,建议使用IP

gpgcheck = 0

enabled = 1

[k8s]

name = kubernetes-repo

baseurl = file:///opt/kubernetes-repo

gpgcheck = 0

enabled = 1

## 刷新源缓存

[root@master opt]# yum clean all && yum makecache

Loaded plugins: fastestmirror

Cleaning repos: centos k8s

Cleaning up everything

Maybe you want: rm -rf /var/cache/yum, to also free up space taken by orphaned data from disabled or removed repos

Cleaning up list of fastest mirrors

Loaded plugins: fastestmirror

Determining fastest mirrors

centos | 3.6 kB 00:00:00

k8s | 3.0 kB 00:00:00

(1/7): centos/group_gz | 166 kB 00:00:00

(2/7): centos/filelists_db | 3.1 MB 00:00:00

(3/7): centos/primary_db | 3.1 MB 00:00:00

(4/7): centos/other_db | 1.3 MB 00:00:00

(5/7): k8s/filelists_db | 138 kB 00:00:00

(6/7): k8s/primary_db | 161 kB 00:00:00

(7/7): k8s/other_db | 80 kB 00:00:00

Metadata Cache Created

## 安装vsftp服务,在master节点开启ftp源

[root@master opt]# yum install -y tree net-tools vsftpd

Installed:

tree.x86_64 0:1.6.0-10.el7 vsftpd.x86_64 0:3.0.2-27.el7

Updated:

net-tools.x86_64 0:2.0-0.25.20131004git.el7

## 启动vsftpd服务,配置vsftp配置文件,设置opt为匿名访问的根目录

[root@master opt]# echo 'anon_root=/opt' >> /etc/vsftpd/vsftpd.conf

[root@master opt]# cat /etc/vsftpd/vsftpd.conf | grep anon_root

anon_root=/opt

[root@master opt]# systemctl start vsftpd

[root@master opt]# systemctl enable vsftpd

Created symlink from /etc/systemd/system/multi-user.target.wants/vsftpd.service to /usr/lib/systemd/system/vsftpd.service.

## 修改ftp.repo文件,k8s源路径改为ftp

[root@master opt]# cat /etc/yum.repos.d/ftp.repo | grep baseurl

baseurl = ftp://controller/centos

baseurl = ftp://master/kubernetes-repo

## 再次重建缓存

[root@master opt]# yum clean all && yum makecache

Loaded plugins: fastestmirror

Cleaning repos: centos k8s

Cleaning up everything

...

Metadata Cache Created

## 将配置好的repo文件传到node节点上

[root@node ~]# mv /etc/yum.repos.d/* /home/ #备份node节点上的yum文件

[root@master opt]# scp /etc/yum.repos.d/ftp.repo node:/etc/yum.repos.d/ #从master节点上传ftp.repo到node节点

ftp.repo 100% 176 120.6KB/s 00:00

[root@node ~]# ls /etc/yum.repos.d/ # 查看

ftp.repo

[root@node ~]# yum clean all && yum makecache # 重建缓存

Loaded plugins: fastestmirror

Cleaning repos: centos k8s

Cleaning up everything

...

Metadata Cache Created

## 列出 repo 软件包个数

[root@node ~]# yum repolist

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

repo id repo name status

centos centos 3,971

k8s kubernetes-repo 168

repolist: 4,139

1.2、部署Harbor仓库

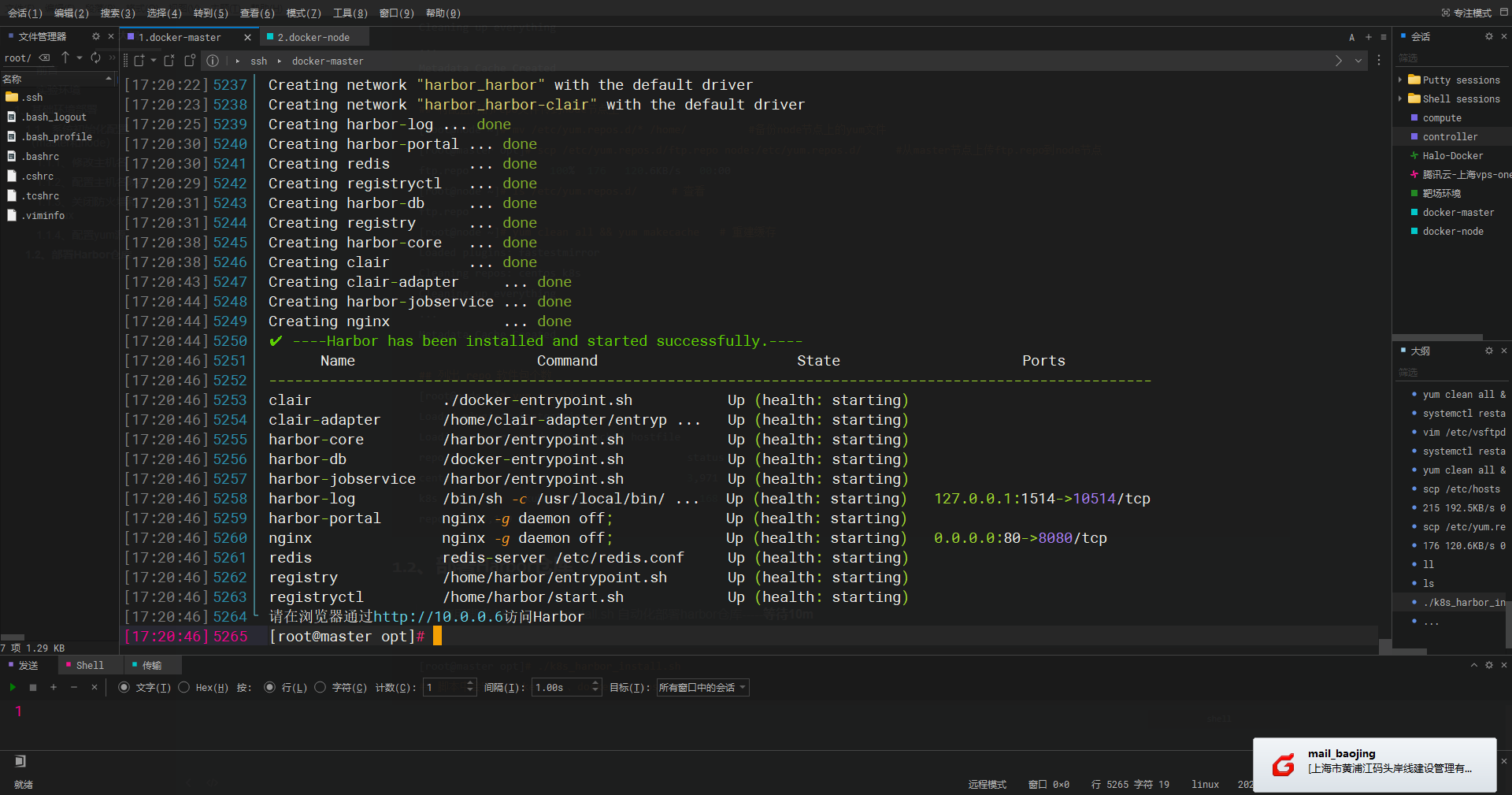

使用脚本 k8s_harbor_install.sh 自动化部署harbor仓库-----等待10m

[root@master opt]# ./k8s_harbor_install.sh

## 脚本中会安装docker-ce 、docker-compose

部署完成

图中给出的是内网的访问地址,我们是无法在浏览器直接访问的,我们使用外网的IP进行访问Harbor仓库即可

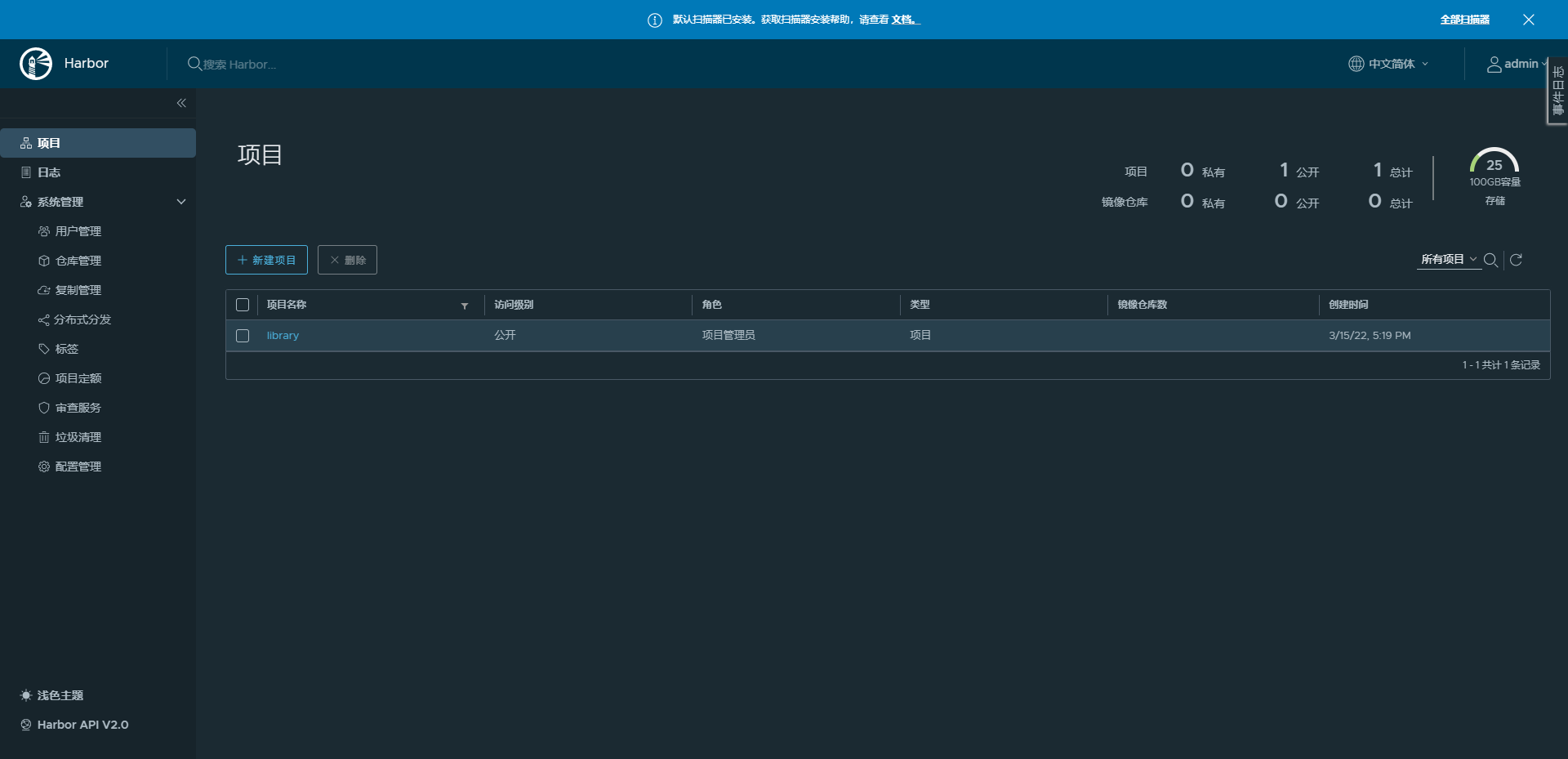

# Harbor 默认账号:admin

# Harbor 默认密码:Harbor12345

1.3、上传镜像到Harbor仓库

使用脚本 k8s_image_push.sh 将paas中准备好的镜像全部推送到我们的仓库中去,使用的时候直接从我们的仓库拉取镜像,速度更快

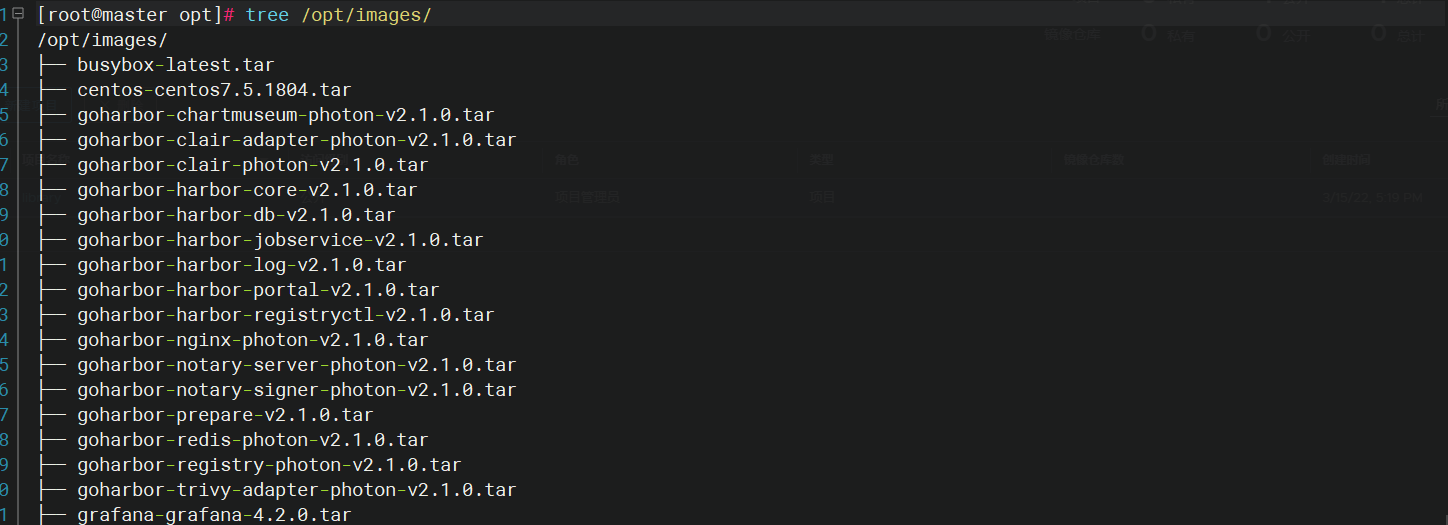

## 镜像tar包都在/opt/images下

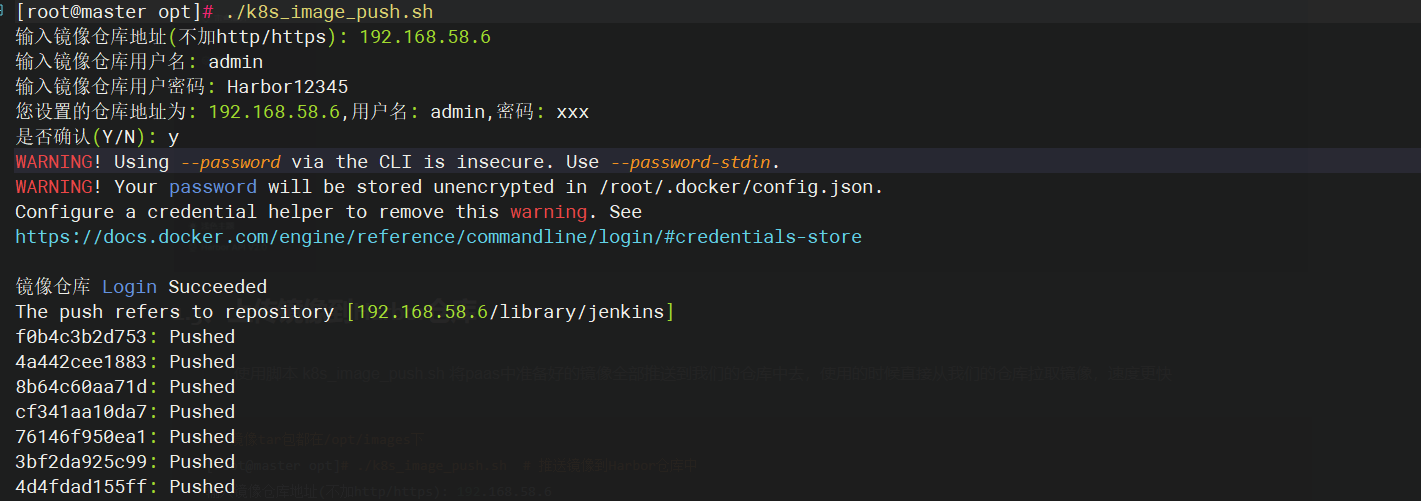

[root@master opt]# ./k8s_image_push.sh # 推送镜像到Harbor仓库中

输入镜像仓库地址(不加http/https): 192.168.58.6

输入镜像仓库用户名: admin

输入镜像仓库用户密码: Harbor12345

您设置的仓库地址为: 192.168.58.6,用户名: admin,密码: xxx

是否确认(Y/N): y

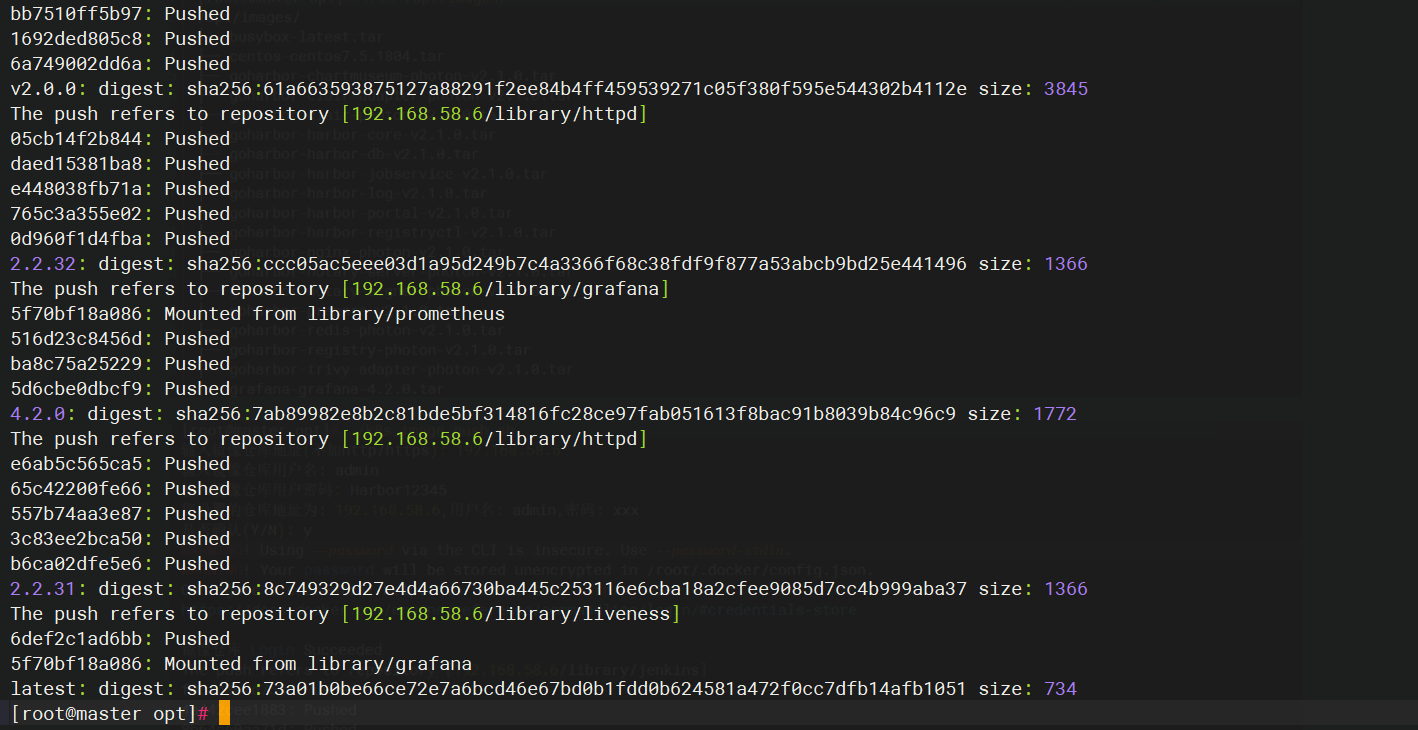

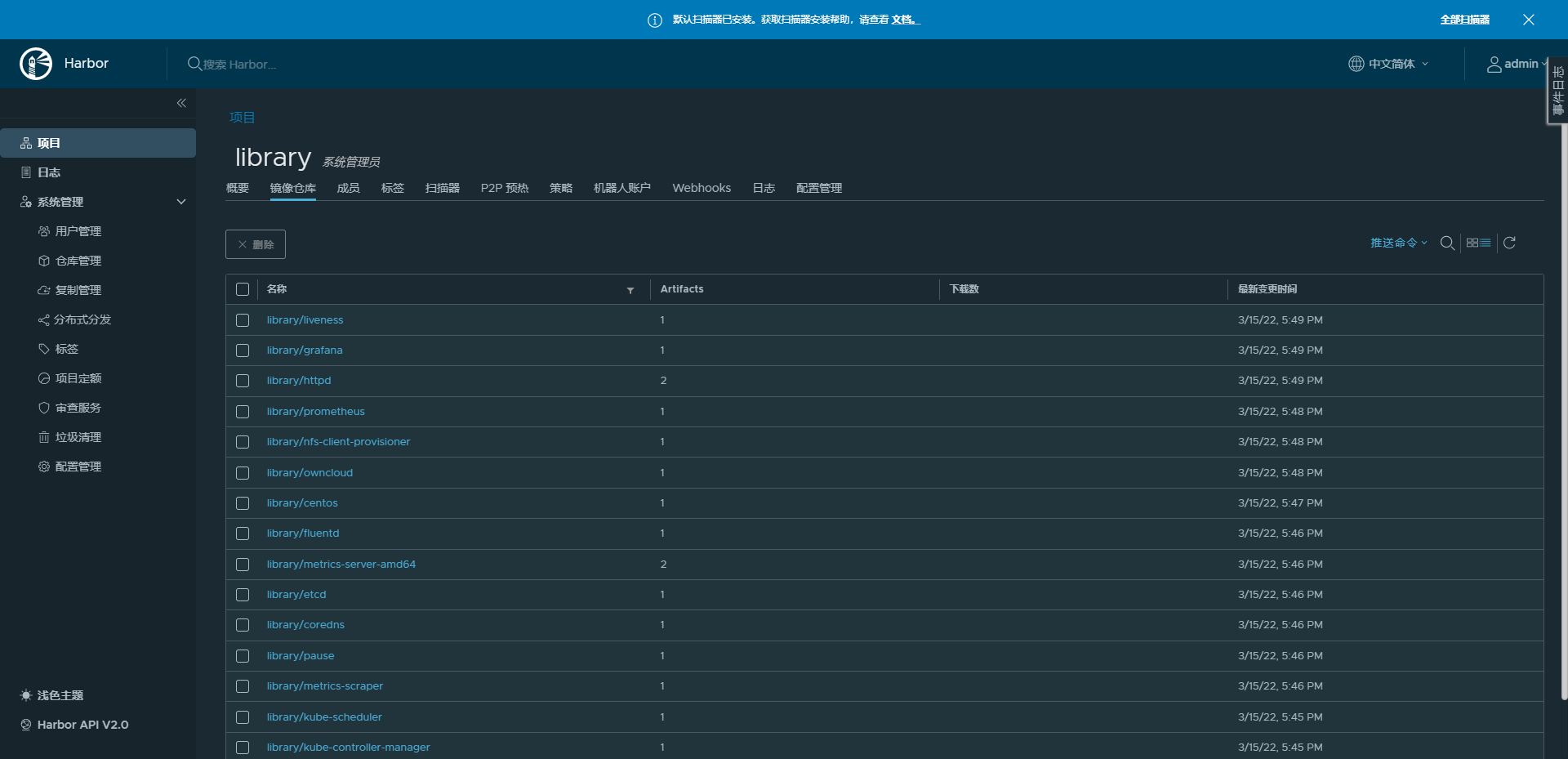

镜像全部推送完成,在Harbor平台 项目–>library 中可以查看到上传的镜像

1.4、部署Kubernetes集群

执行脚本 k8s_master_install.sh 自动化部署K8s部署

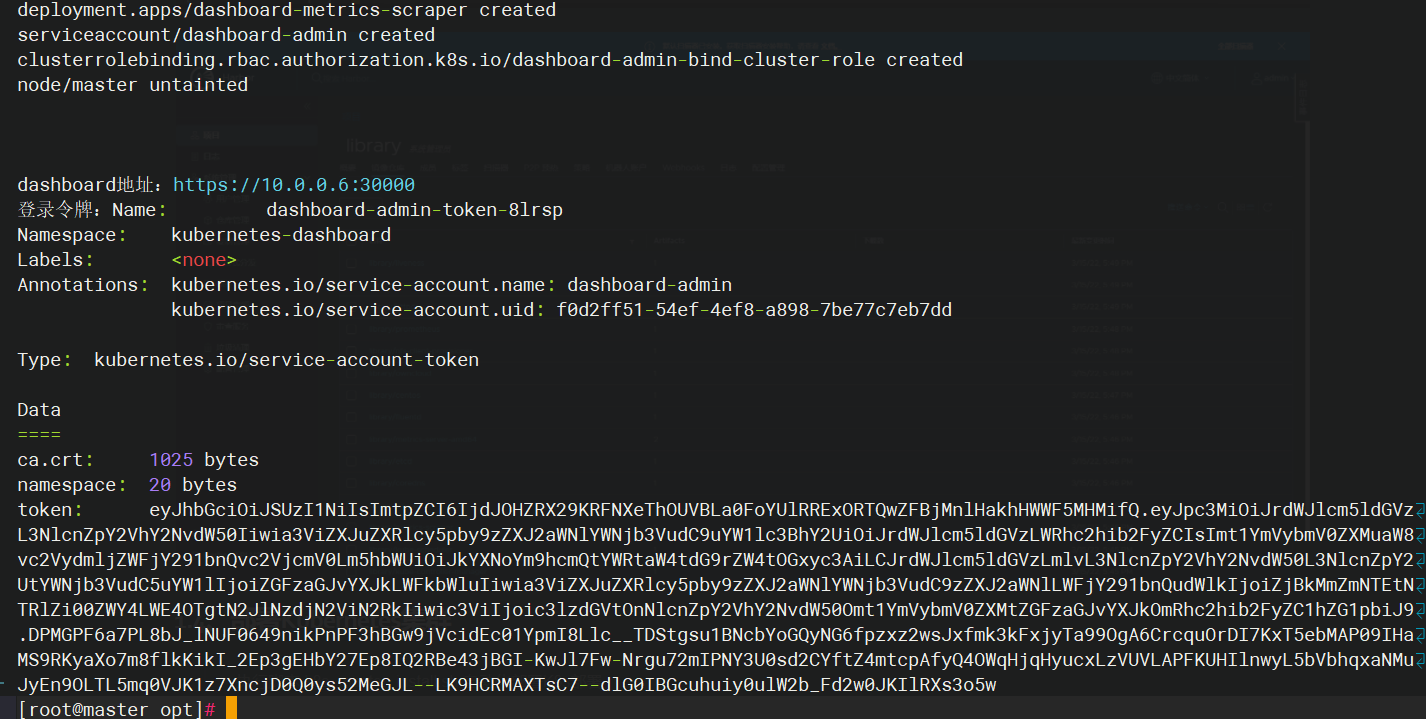

[root@master opt]# ./k8s_master_install.sh

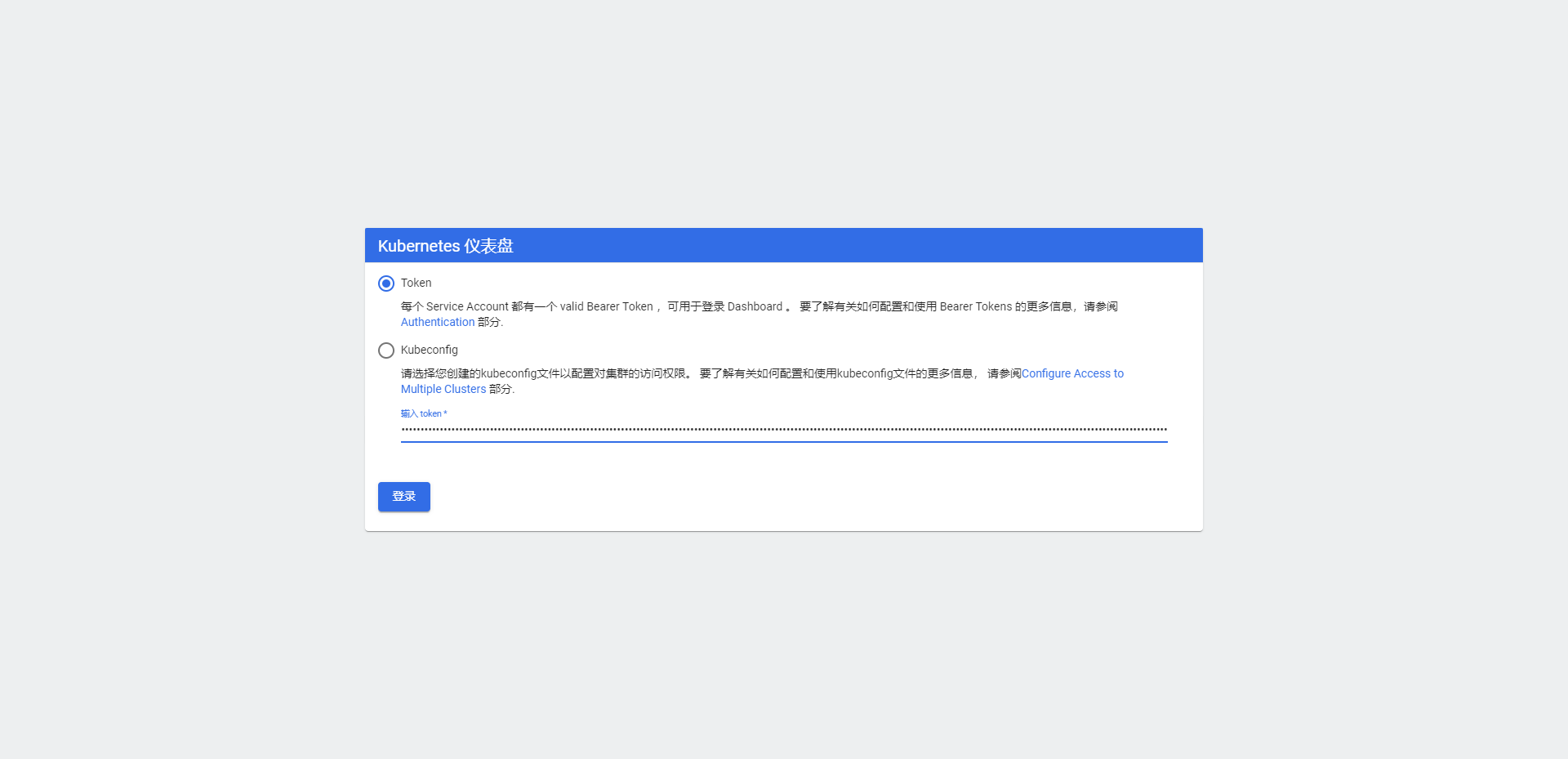

Token用于登录web端平台时的身份验证,登录地址:https://IP:30000

现在集群中只有master节点,还没有加入node节点,需要再执行下一个脚本将node节点加入到集群中

1.5、将node节点加入集群

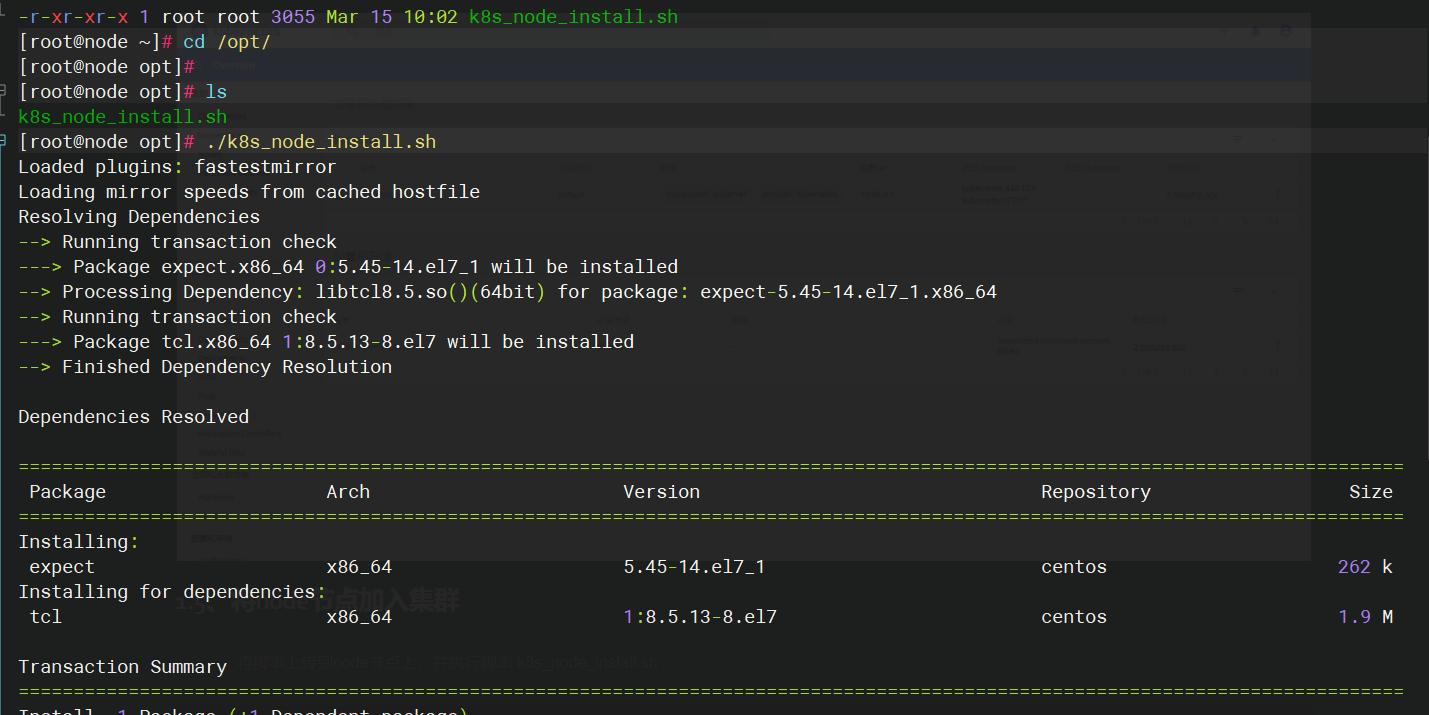

将脚本上传到node节点上,并执行脚本 k8s_node_install.sh

[root@master opt]# scp k8s_node_install.sh node:/opt/

k8s_node_install.sh 100% 3055 1.7MB/s 00:00

[root@node opt]# ./k8s_node_install.sh

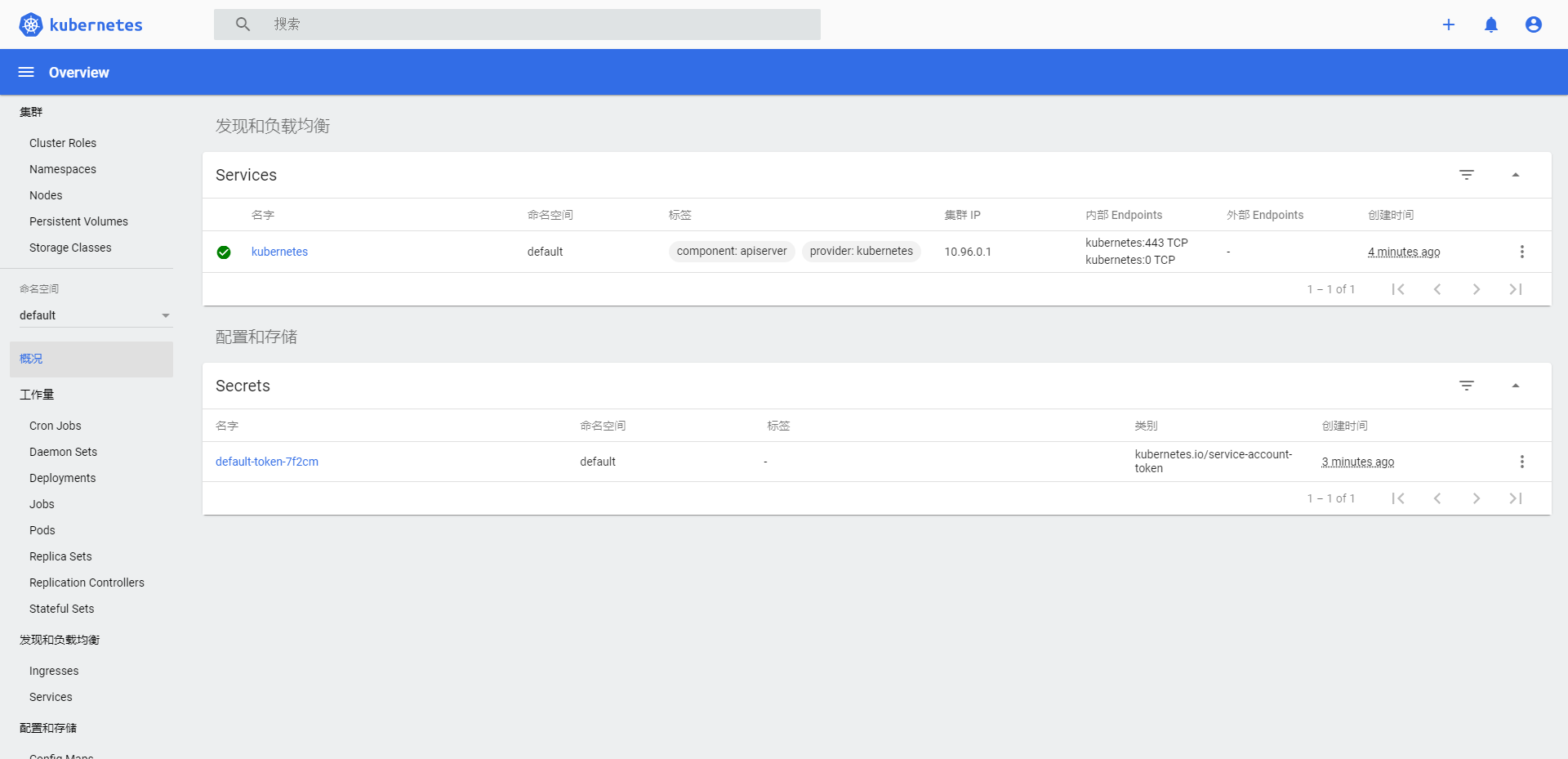

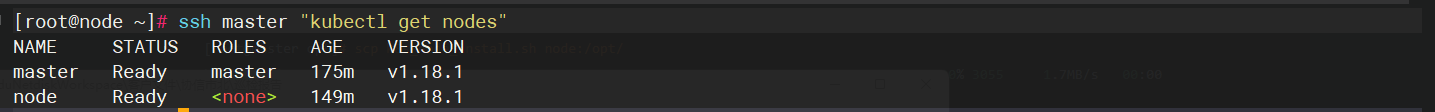

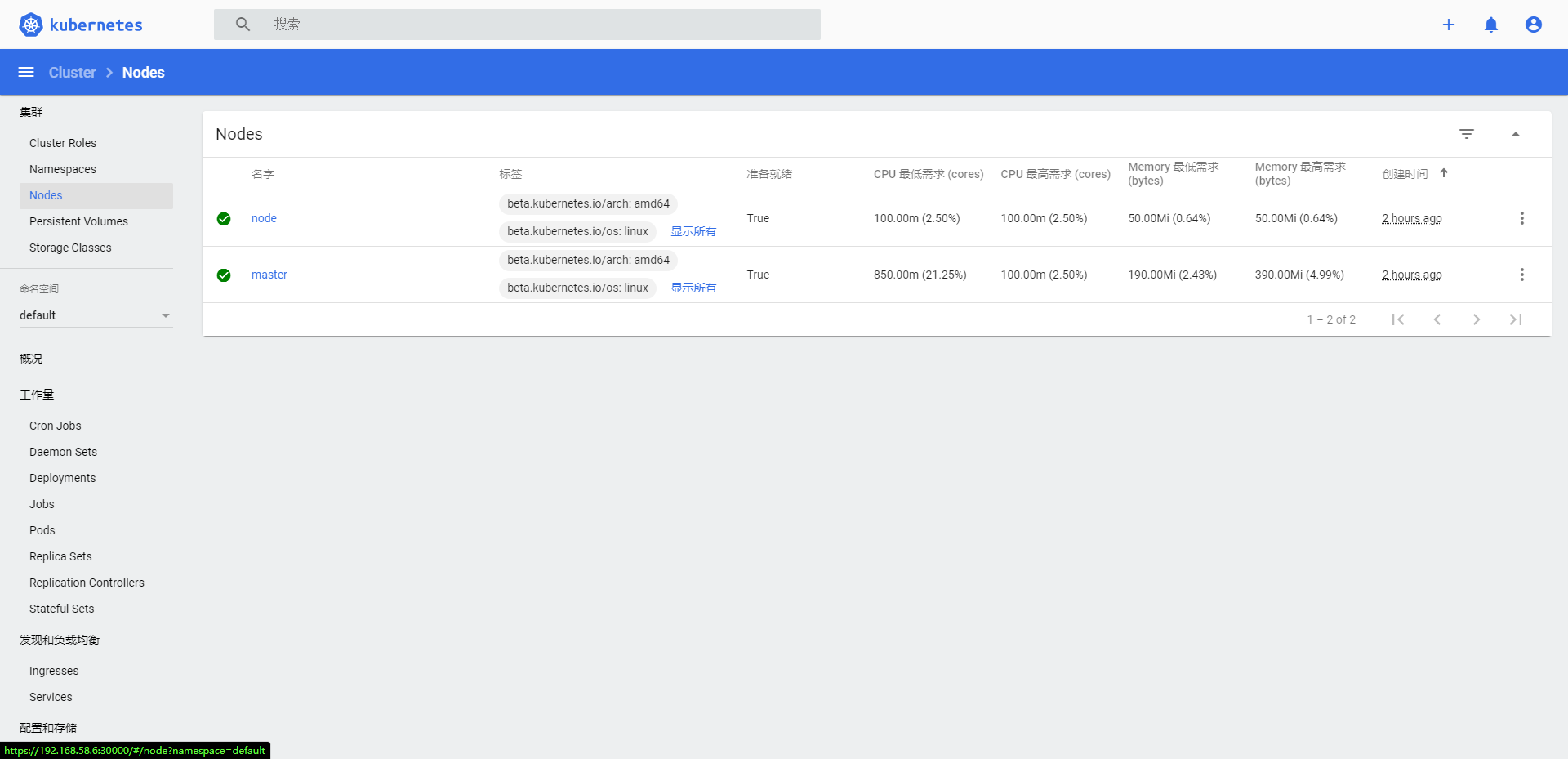

node节点加入集群完成后,可以在k8s的仪表盘上看到有两个节点了,也可以使用命令查看集群节点

到此,容器云的搭建部分就完成了。

更多推荐

已为社区贡献3条内容

已为社区贡献3条内容

所有评论(0)