Spark-bug总结之一次task长时间卡住不动,一直Running的问题解决

目录现象排查现象spark提交任务后,某一个Stage卡住,通过spark界面看到,executor运行正常,卡住的Stage的task已经分配至executor,但task 一直在running并且数据量不大,task不结束,同时log中也无异常报出。20/07/27 07:40:13 INFO CoarseGrainedExecutorBackend: Started daemon with

·

目录

现象

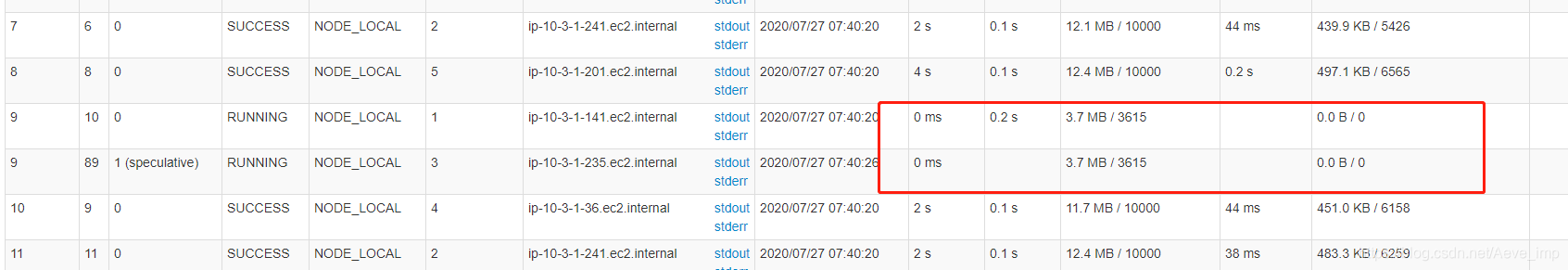

spark提交任务后,某一个Stage卡住,通过spark界面看到,executor运行正常,卡住的Stage的task已经分配至executor,但task 一直在running并且数据量不大,task不结束,同时log中也无异常报出。

20/07/27 07:40:13 INFO CoarseGrainedExecutorBackend: Started daemon with process name: 15841@ip-10-3-1-141.ec2.internal

20/07/27 07:40:13 INFO SignalUtils: Registered signal handler for TERM

20/07/27 07:40:13 INFO SignalUtils: Registered signal handler for HUP

20/07/27 07:40:13 INFO SignalUtils: Registered signal handler for INT

20/07/27 07:40:14 INFO SecurityManager: Changing view acls to: hadoop

20/07/27 07:40:14 INFO SecurityManager: Changing modify acls to: hadoop

20/07/27 07:40:14 INFO SecurityManager: Changing view acls groups to:

20/07/27 07:40:14 INFO SecurityManager: Changing modify acls groups to:

20/07/27 07:40:14 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); groups with view permissions: Set(); users with modify permissions: Set(hadoop); groups with modify permissions: Set()

20/07/27 07:40:14 INFO TransportClientFactory: Successfully created connection to ip-10-3-1-201.ec2.internal/10.3.1.201:44707 after 52 ms (0 ms spent in bootstraps)

20/07/27 07:40:14 INFO SecurityManager: Changing view acls to: hadoop

20/07/27 07:40:14 INFO SecurityManager: Changing modify acls to: hadoop

20/07/27 07:40:14 INFO SecurityManager: Changing view acls groups to:

20/07/27 07:40:14 INFO SecurityManager: Changing modify acls groups to:

20/07/27 07:40:14 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); groups with view permissions: Set(); users with modify permissions: Set(hadoop); groups with modify permissions: Set()

20/07/27 07:40:14 INFO TransportClientFactory: Successfully created connection to ip-10-3-1-201.ec2.internal/10.3.1.201:44707 after 1 ms (0 ms spent in bootstraps)

20/07/27 07:40:14 INFO DiskBlockManager: Created local directory at /data/hadoop/tmp/nm-local-dir/usercache/hadoop/appcache/application_1595219623503_0058/blockmgr-25af1e8e-f969-490e-9299-a07ec34195ea

20/07/27 07:40:14 INFO MemoryStore: MemoryStore started with capacity 2004.6 MB

20/07/27 07:40:14 INFO CoarseGrainedExecutorBackend: Connecting to driver: spark://CoarseGrainedScheduler@ip-10-3-1-201.ec2.internal:44707

20/07/27 07:40:14 INFO CoarseGrainedExecutorBackend: Successfully registered with driver

20/07/27 07:40:14 INFO Executor: Starting executor ID 1 on host ip-10-3-1-141.ec2.internal

20/07/27 07:40:14 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 33713.

20/07/27 07:40:14 INFO NettyBlockTransferService: Server created on ip-10-3-1-141.ec2.internal:33713

20/07/27 07:40:14 INFO BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

20/07/27 07:40:14 INFO BlockManagerMaster: Registering BlockManager BlockManagerId(1, ip-10-3-1-141.ec2.internal, 33713, None)

20/07/27 07:40:14 INFO BlockManagerMaster: Registered BlockManager BlockManagerId(1, ip-10-3-1-141.ec2.internal, 33713, None)

20/07/27 07:40:14 INFO BlockManager: external shuffle service port = 7337

20/07/27 07:40:14 INFO BlockManager: Registering executor with local external shuffle service.

20/07/27 07:40:14 INFO TransportClientFactory: Successfully created connection to ip-10-3-1-141.ec2.internal/10.3.1.141:7337 after 1 ms (0 ms spent in bootstraps)

20/07/27 07:40:14 INFO BlockManager: Initialized BlockManager: BlockManagerId(1, ip-10-3-1-141.ec2.internal, 33713, None)

20/07/27 07:40:20 INFO CoarseGrainedExecutorBackend: Got assigned task 0

20/07/27 07:40:20 INFO CoarseGrainedExecutorBackend: Got assigned task 5

20/07/27 07:40:20 INFO CoarseGrainedExecutorBackend: Got assigned task 10

20/07/27 07:40:20 INFO CoarseGrainedExecutorBackend: Got assigned task 15

20/07/27 07:40:20 INFO Executor: Running task 14.0 in stage 0.0 (TID 15)

20/07/27 07:40:20 INFO Executor: Running task 1.0 in stage 0.0 (TID 0)

20/07/27 07:40:20 INFO Executor: Running task 4.0 in stage 0.0 (TID 5)

20/07/27 07:40:20 INFO Executor: Running task 9.0 in stage 0.0 (TID 10)

20/07/27 07:40:20 INFO TorrentBroadcast: Started reading broadcast variable 1

20/07/27 07:40:20 INFO TransportClientFactory: Successfully created connection to ip-10-3-1-201.ec2.internal/10.3.1.201:37427 after 1 ms (0 ms spent in bootstraps)

20/07/27 07:40:20 INFO MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 8.6 KB, free 2004.6 MB)

20/07/27 07:40:20 INFO TorrentBroadcast: Reading broadcast variable 1 took 87 ms

20/07/27 07:40:20 INFO MemoryStore: Block broadcast_1 stored as values in memory (estimated size 19.7 KB, free 2004.6 MB)

20/07/27 07:40:20 INFO HadoopRDD: Input split: hdfs://hdfscluster/spark/htmlparse/20200726/03/202007260314300.3784922616723815.txt:0+13016575

20/07/27 07:40:20 INFO HadoopRDD: Input split: hdfs://hdfscluster/spark/htmlparse/20200726/01/202007260108090.40969421478725176.txt:0+13654215

20/07/27 07:40:20 INFO HadoopRDD: Input split: hdfs://hdfscluster/spark/htmlparse/20200726/02/202007260249140.927476060257793.txt:0+4578622

20/07/27 07:40:20 INFO HadoopRDD: Input split: hdfs://hdfscluster/spark/htmlparse/20200726/00/202007260031380.15291583926088292.txt:0+13329307

20/07/27 07:40:20 INFO TorrentBroadcast: Started reading broadcast variable 0

20/07/27 07:40:20 INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 26.0 KB, free 2004.5 MB)

20/07/27 07:40:20 INFO TorrentBroadcast: Reading broadcast variable 0 took 11 ms

20/07/27 07:40:20 INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 389.8 KB, free 2004.2 MB)

20/07/27 07:40:21 WARN RetryInvocationHandler: A failover has occurred since the start of this method invocation attempt.

20/07/27 07:40:21 WARN RetryInvocationHandler: A failover has occurred since the start of this method invocation attempt.

20/07/27 07:40:21 WARN RetryInvocationHandler: A failover has occurred since the start of this method invocation attempt.

20/07/27 07:40:21 INFO CodeGenerator: Code generated in 173.545789 ms

20/07/27 07:40:21 INFO CodeGenerator: Code generated in 7.001628 ms

20/07/27 07:40:21 INFO CodeGenerator: Code generated in 9.54327 ms

20/07/27 07:40:21 INFO CodeGenerator: Code generated in 9.463361 ms

20/07/27 07:40:22 INFO CodeGenerator: Code generated in 19.136314 ms

20/07/27 07:40:22 INFO CodeGenerator: Code generated in 11.578724 ms

20/07/27 07:40:22 INFO Executor: Finished task 4.0 in stage 0.0 (TID 5). 1842 bytes result sent to driver

20/07/27 07:40:22 INFO Executor: Finished task 14.0 in stage 0.0 (TID 15). 1842 bytes result sent to driver

20/07/27 07:40:22 INFO Executor: Finished task 1.0 in stage 0.0 (TID 0). 1842 bytes result sent to driver

20/07/27 07:40:22 INFO CoarseGrainedExecutorBackend: Got assigned task 24

20/07/27 07:40:22 INFO Executor: Running task 24.0 in stage 0.0 (TID 24)

20/07/27 07:40:22 INFO CoarseGrainedExecutorBackend: Got assigned task 25

20/07/27 07:40:22 INFO Executor: Running task 25.0 in stage 0.0 (TID 25)

20/07/27 07:40:22 INFO CoarseGrainedExecutorBackend: Got assigned task 26

20/07/27 07:40:22 INFO Executor: Running task 26.0 in stage 0.0 (TID 26)

20/07/27 07:40:22 INFO HadoopRDD: Input split: hdfs://hdfscluster/spark/htmlparse/20200726/05/202007260559020.1679287146580668.txt:0+12975407

20/07/27 07:40:22 INFO HadoopRDD: Input split: hdfs://hdfscluster/spark/htmlparse/20200726/06/202007260627590.15476360731872263.txt:0+12695783

20/07/27 07:40:22 INFO HadoopRDD: Input split: hdfs://hdfscluster/spark/htmlparse/20200726/06/202007260608480.2114375683619245.txt:0+12605707

20/07/27 07:40:23 INFO Executor: Finished task 25.0 in stage 0.0 (TID 25). 1842 bytes result sent to driver

20/07/27 07:40:23 INFO CoarseGrainedExecutorBackend: Got assigned task 38

20/07/27 07:40:23 INFO Executor: Running task 37.0 in stage 0.0 (TID 38)

20/07/27 07:40:23 INFO HadoopRDD: Input split: hdfs://hdfscluster/spark/htmlparse/20200726/10/202007261012380.11490823191165889.txt:0+13020375

20/07/27 07:40:23 INFO Executor: Finished task 24.0 in stage 0.0 (TID 24). 1842 bytes result sent to driver

20/07/27 07:40:23 INFO CoarseGrainedExecutorBackend: Got assigned task 41

20/07/27 07:40:23 INFO Executor: Running task 41.0 in stage 0.0 (TID 41)

20/07/27 07:40:23 INFO HadoopRDD: Input split: hdfs://hdfscluster/spark/htmlparse/20200726/11/202007261116520.8547158132034373.txt:0+13735003

20/07/27 07:40:23 INFO Executor: Finished task 26.0 in stage 0.0 (TID 26). 1842 bytes result sent to driver

20/07/27 07:40:23 INFO CoarseGrainedExecutorBackend: Got assigned task 42

20/07/27 07:40:23 INFO Executor: Running task 43.0 in stage 0.0 (TID 42)

20/07/27 07:40:23 INFO HadoopRDD: Input split: hdfs://hdfscluster/spark/htmlparse/20200726/11/202007261140550.9553149774235719.txt:0+13191287

20/07/27 07:40:24 INFO Executor: Finished task 37.0 in stage 0.0 (TID 38). 1842 bytes result sent to driver

20/07/27 07:40:24 INFO CoarseGrainedExecutorBackend: Got assigned task 61

20/07/27 07:40:24 INFO Executor: Running task 61.0 in stage 0.0 (TID 61)

20/07/27 07:40:24 INFO HadoopRDD: Input split: hdfs://hdfscluster/spark/htmlparse/20200726/18/202007261838530.5045655129054344.txt:0+13993759

20/07/27 07:40:24 INFO Executor: Finished task 43.0 in stage 0.0 (TID 42). 1842 bytes result sent to driver

20/07/27 07:40:24 INFO CoarseGrainedExecutorBackend: Got assigned task 62

20/07/27 07:40:24 INFO Executor: Running task 63.0 in stage 0.0 (TID 62)

20/07/27 07:40:24 INFO HadoopRDD: Input split: hdfs://hdfscluster/spark/htmlparse/20200726/19/202007261908510.355772341432538.txt:0+16076175

20/07/27 07:40:24 INFO Executor: Finished task 41.0 in stage 0.0 (TID 41). 1885 bytes result sent to driver

20/07/27 07:40:24 INFO CoarseGrainedExecutorBackend: Got assigned task 64

20/07/27 07:40:24 INFO Executor: Running task 64.0 in stage 0.0 (TID 64)

20/07/27 07:40:24 INFO HadoopRDD: Input split: hdfs://hdfscluster/spark/htmlparse/20200726/19/202007261941150.7662820278184762.txt:0+16114275

20/07/27 07:40:24 INFO Executor: Finished task 64.0 in stage 0.0 (TID 64). 1842 bytes result sent to driver

20/07/27 07:40:24 INFO CoarseGrainedExecutorBackend: Got assigned task 76

20/07/27 07:40:24 INFO Executor: Running task 71.0 in stage 0.0 (TID 76)

20/07/27 07:40:24 INFO HadoopRDD: Input split: hdfs://hdfscluster/spark/htmlparse/20200726/21/202007262144390.5792509832271843.txt:0+15898115

20/07/27 07:40:24 INFO Executor: Finished task 61.0 in stage 0.0 (TID 61). 1842 bytes result sent to driver

20/07/27 07:40:25 INFO CoarseGrainedExecutorBackend: Got assigned task 77

20/07/27 07:40:25 INFO Executor: Running task 78.0 in stage 0.0 (TID 77)

20/07/27 07:40:25 INFO HadoopRDD: Input split: hdfs://hdfscluster/spark/htmlparse/20200726/22/202007262244370.10744673727723486.txt:0+14887763

20/07/27 07:40:25 INFO Executor: Finished task 63.0 in stage 0.0 (TID 62). 1842 bytes result sent to driver

20/07/27 07:40:25 INFO CoarseGrainedExecutorBackend: Got assigned task 78

20/07/27 07:40:25 INFO Executor: Running task 79.0 in stage 0.0 (TID 78)

20/07/27 07:40:25 INFO HadoopRDD: Input split: hdfs://hdfscluster/spark/htmlparse/20200726/22/202007262244540.6265893918572715.txt:0+15494875

20/07/27 07:40:25 INFO Executor: Finished task 78.0 in stage 0.0 (TID 77). 1842 bytes result sent to driver

20/07/27 07:40:25 INFO CoarseGrainedExecutorBackend: Got assigned task 88

20/07/27 07:40:25 INFO Executor: Running task 86.0 in stage 0.0 (TID 88)

20/07/27 07:40:25 INFO HadoopRDD: Input split: hdfs://hdfscluster/spark/htmlparse/20200726/23/202007262342290.46674710381105455.txt:0+14971231

20/07/27 07:40:25 INFO Executor: Finished task 71.0 in stage 0.0 (TID 76). 1842 bytes result sent to driver

20/07/27 07:40:25 INFO Executor: Finished task 79.0 in stage 0.0 (TID 78). 1842 bytes result sent to driver

20/07/27 07:40:26 INFO Executor: Finished task 86.0 in stage 0.0 (TID 88). 1842 bytes result sent to driver

20/07/27 07:40:26 INFO CoarseGrainedExecutorBackend: Got assigned task 91

20/07/27 07:40:26 INFO Executor: Running task 52.1 in stage 0.0 (TID 91)

20/07/27 07:40:26 INFO CoarseGrainedExecutorBackend: Got assigned task 94

20/07/27 07:40:26 INFO Executor: Running task 47.1 in stage 0.0 (TID 94)

20/07/27 07:40:26 INFO HadoopRDD: Input split: hdfs://hdfscluster/spark/htmlparse/20200726/16/202007261655150.6932849758940415.txt:0+13964655

20/07/27 07:40:26 INFO HadoopRDD: Input split: hdfs://hdfscluster/spark/htmlparse/20200726/12/202007261236290.1707930240428044.txt:0+6242332

20/07/27 07:40:26 INFO Executor: Finished task 47.1 in stage 0.0 (TID 94). 1799 bytes result sent to driver

20/07/27 07:40:27 INFO Executor: Finished task 52.1 in stage 0.0 (TID 91). 1799 bytes result sent to driver

排查

- 分析没有异常说明程序本身没有问题,并且之前定时跑的都没问题,框架运行也运行正常,很有可能是因为该stage操作较为复杂,将CPU打满。

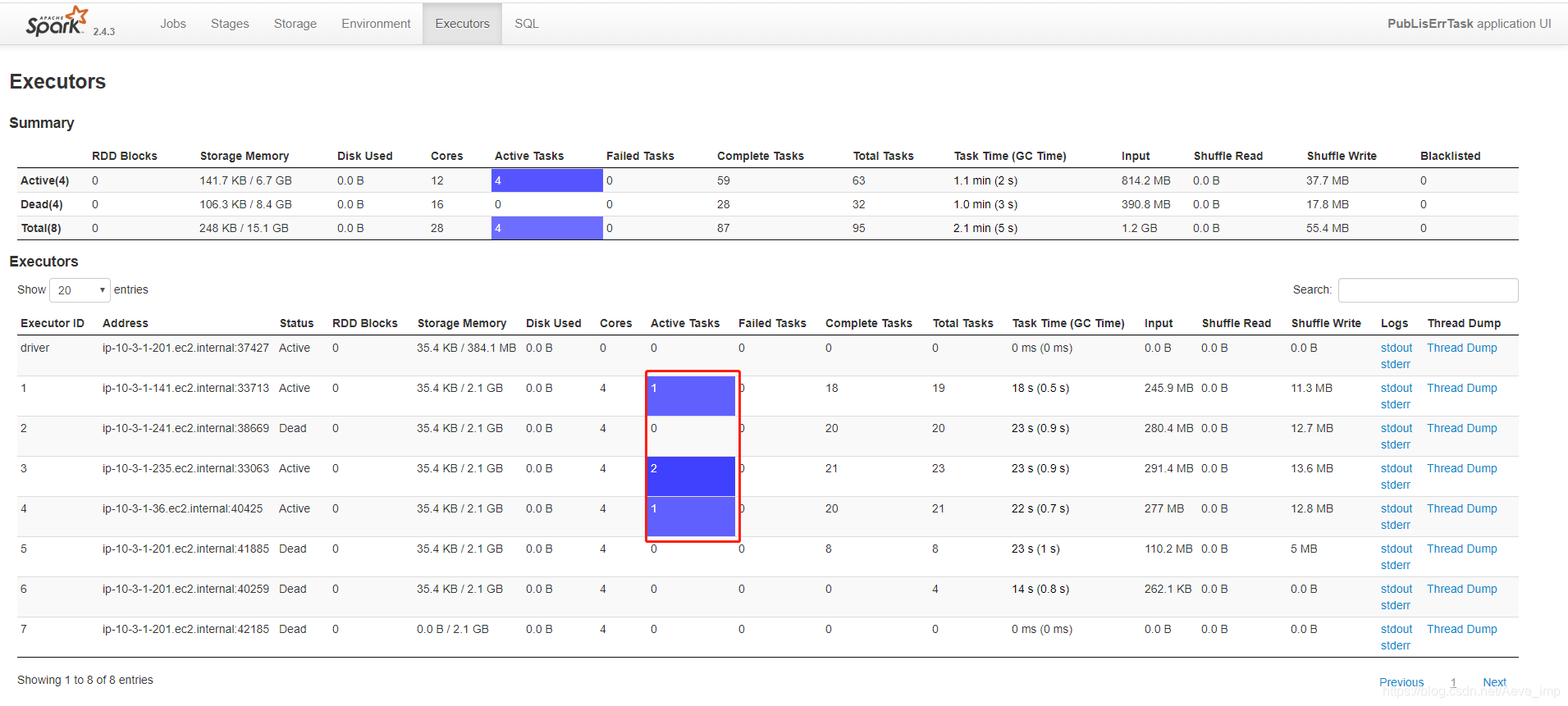

- 通过spark监控界面找到task被分配到的机器,并登陆,通过jps -ml 打印出具体的java进程信息。

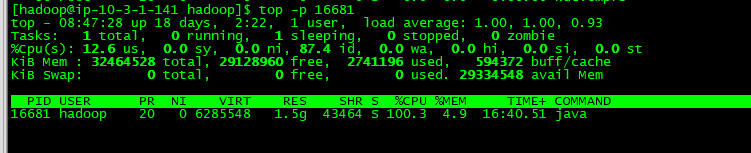

- 得到pid之后,使用top -p 观察该process,发现确实CPU占用非常高,说明某项操作将CPU占满,此时task并未失败,而是一直在进行

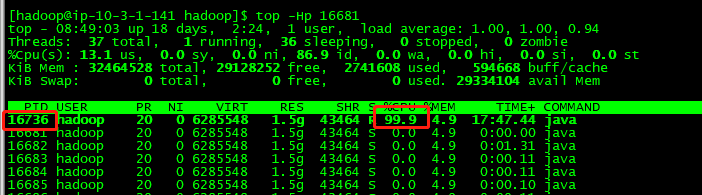

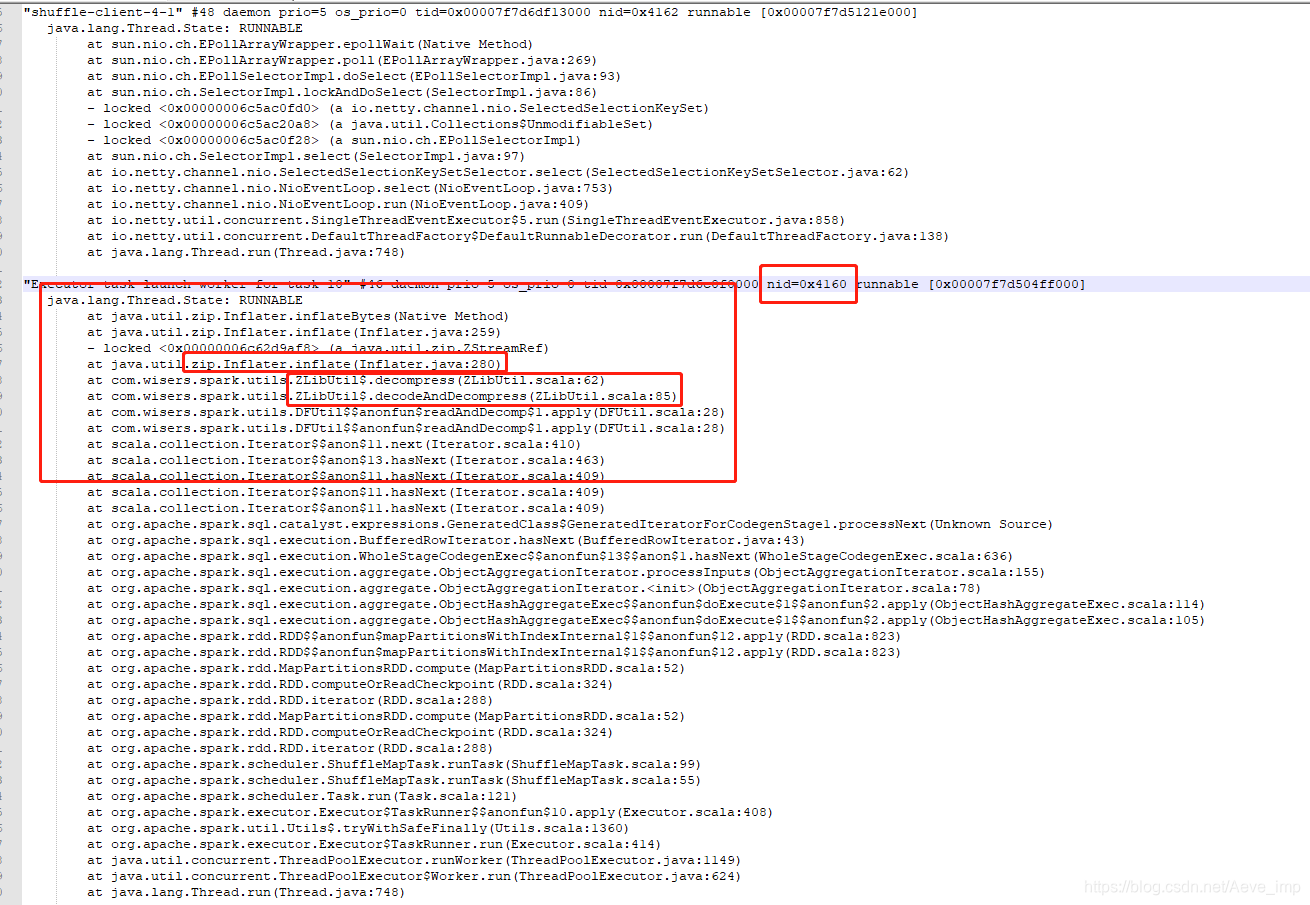

- 通过top -Hp {pid}将该进程内部线程信息打印,获得哪条线程占用CPU高

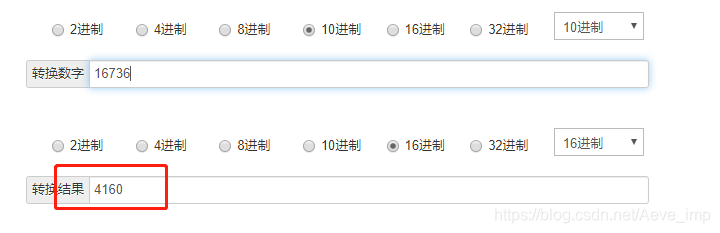

- 通过jstack {pid} 将进程堆栈打印,并将上一轮操作中获取的线程号在jstack文件中查询,定位线程(获取的线程号为十进制,需将获取的线程id转换为十六进制,再进行对照

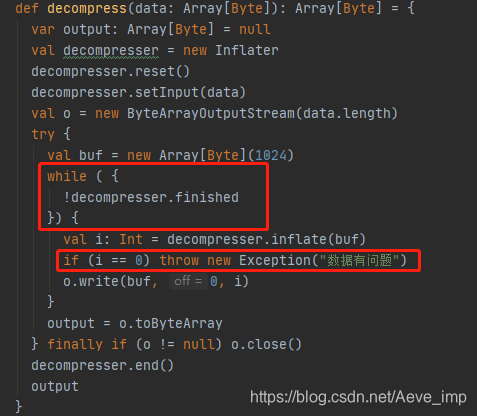

- 最终定位到是我们的一个zlib工具类,用来解base64和解压缩的。debug代码发现,有一条数据因为压缩不完善或者写入的有问题,导致在解压缩的时候,工具类一直认为该字符串未终止,所以就陷入了while的无限循环。

- 在每次解压获取下次字节时,判断如果为0,即未读到数据,则终止循环,抛弃该条数据。

- 重新打包,跑任务,发现任务恢复正常。

更多推荐

已为社区贡献2条内容

已为社区贡献2条内容

所有评论(0)