buffer/cach内存占用过高及k8s java后端pod容器超出内存限制被kill重启

现象之前某个后端服务pod在不停的重启导致线上环境很不稳定,于是开始分析问题的原因

现象

之前某个后端服务pod一直在不停的重启导致线上环境很不稳定,于是开始分析问题的原因

分析pod日志和服务器日志

- 执行

kubectl describe po <pod名> -n <命名空间>查看pod所在Node的ip和Events有时候通过Events可以直接找到问题原因 - 执行

kubectl logs --tail 100 <pod名> -n <命名空间>查看服务最后一百行日志或者用logs -p选项查看前一个 pod 的日志,分析是否是服务原因导致pod重启 - 若仍然没找到原因可以去分析下pod所在节点的系统服务日志例如

/var/log/messages

搜索和重启时间相近的日志发现内存不足导致pod被kill, 报错信息如下:Nov 11 17:30:43 host kernel: Memory cgroup out of memory: Kill process 31719 (grpc-default-ex) score 0 or sacrifice child Nov 11 17:30:43 host kernel: Killed process 24288 (java) total-vm:3747220kB, anon-rss:450448kB, file-rss:15868kB, shmem-rss:0kB Nov 11 17:30:43 host dockerd: time="2021-11-11T17:30:43.533974651+08:00" level=info msg="ignoring event" container=55ca2298ca9379b8bfcb4d977f9aeeb2eaa155f0126009bdab2747f8b1b5a77c module=libcontainerd namespace=moby topic=/tasks/delete type="*events.TaskDelete" Nov 11 17:30:43 host containerd: time="2021-11-11T17:30:43.535142442+08:00" level=info msg="shim disconnected" id=55ca2298ca9379b8bfcb4d977f9aeeb2eaa155f0126009bdab2747f8b1b5a77c Nov 11 17:30:43 host containerd: time="2021-11-11T17:30:43.535214178+08:00" level=error msg="copy shim log" error="read /proc/self/fd/75: file already closed" Nov 11 17:30:43 host dockerd: time="2021-11-11T17:30:43.619404307+08:00" level=info msg="ignoring event" container=84129b38c689b77781da0ac9f44811747d5cac1420565ee2848f96f08cc50634 module=libcontainerd namespace=moby topic=/tasks/delete type="*events.TaskDelete" Nov 11 17:30:43 host containerd: time="2021-11-11T17:30:43.620511825+08:00" level=info msg="shim disconnected" id=84129b38c689b77781da0ac9f44811747d5cac1420565ee2848f96f08cc50634 Nov 11 17:30:43 host containerd: time="2021-11-11T17:30:43.620570486+08:00" level=error msg="copy shim log" error="read /proc/self/fd/79: file already closed"

获取更多进程被kill 的相关信息可以用下面的命令

dmesg | egrep -i -B100 'killed process'

# 或:

egrep -i 'killed process' /var/log/messages

egrep -i -r 'killed process' /var/log

## 或:

journalctl -xb | egrep -i 'killed process'

分析并释放系统内存

执行 free -h 查看系统内存发现buff/cache占用特别高,如下:

[root@host ~]# free -h

total used free shared buff/cache available

Mem: 15G 3.4G 618M 3.0M 11G 10G

Swap: 0B 0B 0B

[root@host ~]#

使用hcache查看buff/cache占用情况

执行下面的命令安装hcache ,原hcache项目地址 https://github.com/silenceshell/hcache

wget https://silenceshell-1255345740.cos.ap-shanghai.myqcloud.com/hcache

chmod +x hcache

mv hcache /usr/local/bin/

执行hcache -top 10查看占用buff/cache最多的前十个进程,发现journal日志服务占用最多缓存

[root@host ~]# hcache -top 10

+-------------------------------------------------------------------------------------------------------------------------------------+----------------+------------+-----------+---------+

| Name | Size (bytes) | Pages | Cached | Percent |

|-------------------------------------------------------------------------------------------------------------------------------------+----------------+------------+-----------+---------|

| /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-0000000000329407-0005c6699e58bd55.journal | 109051904 | 26624 | 26624 | 100.000 |

| /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-0000000000304c93-0005c6690938adc6.journal | 109051904 | 26624 | 26624 | 100.000 |

| /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-000000000034db1c-0005c66a3238c43e.journal | 109051904 | 26624 | 26624 | 100.000 |

| /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-00000000003bb016-0005c66bee8ea309.journal | 109051904 | 26624 | 26624 | 100.000 |

| /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-0000000000396912-0005c66b5a798a6b.journal | 109051904 | 26624 | 26618 | 099.977 |

| /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-000000000037226d-0005c66ac733c378.journal | 109051904 | 26624 | 25587 | 096.105 |

| /usr/bin/kubelet | 109974336 | 26850 | 24274 | 090.406 |

| /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-0000000000272f82-0005c666b726fd70.journal | 109051904 | 26624 | 23422 | 087.973 |

| /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-000000000029773f-0005c6674ca7fd05.journal | 109051904 | 26624 | 23174 | 087.042 |

| /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-000000000022a0b5-0005c6658ce98577.journal | 109051904 | 26624 | 23118 | 086.831 |

+-------------------------------------------------------------------------------------------------------------------------------------+----------------+------------+-----------+---------+

清理journal系统服务日志释放buff/cache

journalctl 清理日志有 -vacuum-size和 -vacuum-time两个 选项

-

使用

-vacuum-size选项, 可硬性指定日志的总体体积,意味着其会不断删除旧有记录直到所占容量符合要求sudo journalctl --vacuum-size=1G -

使用

-vacuum-time选项, 任何早于这一时间点的条目都将被删除。例如,去年之后的条目才能保留:sudo journalctl --vacuum-time=1years清理journal系统服务日志之后发现释放了接近四个G内存

[root@host ~]# journalctl --vacuum-size=10M Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-000000000022a0b5-0005c6658ce98577.journal (104.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-000000000024e80d-0005c666220a2165.journal (104.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-0000000000272f82-0005c666b726fd70.journal (104.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-000000000029773f-0005c6674ca7fd05.journal (104.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-00000000002bbe76-0005c667e154f260.journal (104.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-00000000002e05de-0005c668764a4097.journal (104.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-0000000000304c93-0005c6690938adc6.journal (104.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-0000000000329407-0005c6699e58bd55.journal (104.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-000000000034db1c-0005c66a3238c43e.journal (104.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-000000000037226d-0005c66ac733c378.journal (104.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-0000000000396912-0005c66b5a798a6b.journal (104.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-00000000003bb016-0005c66bee8ea309.journal (104.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-00000000003dd5d7-0005c6797dbd575b.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-00000000003f3b1a-0005c6c7d65b43da.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-0000000000408a0c-0005c71ac660287a.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-000000000041d99b-0005c76adf59ca72.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-0000000000432929-0005c7ba9d58e557.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-00000000004478e6-0005c7fc337a8744.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-000000000045c963-0005c83c478e85f1.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-000000000047193f-0005c88c6a028753.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-00000000004868b7-0005c8db9e0f4dca.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-000000000049b85f-0005c92bf3a76242.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-00000000004b081b-0005c97cc0bc7999.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-00000000004c5792-0005c9ce7ed64920.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-00000000004da773-0005ca20902c33f1.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/user-1000@97a8aea485f24ad4838f0d1c95fe7d25-00000000004ea60c-0005ca5efa6b85ae.journal (8.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-00000000004ef6d5-0005ca72cb147442.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/user-1000@97a8aea485f24ad4838f0d1c95fe7d25-0000000000504084-0005cac288df1cd2.journal (8.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-00000000005046ab-0005cac403a650c0.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/user-1000@97a8aea485f24ad4838f0d1c95fe7d25-000000000050846e-0005cad2cc6b96ff.journal (8.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-0000000000519683-0005cb144065a5c7.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-000000000052e60d-0005cb648be882a1.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-000000000054367a-0005cbb3da7cdeea.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-0000000000558628-0005cc01b433b9d2.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/user-1000@97a8aea485f24ad4838f0d1c95fe7d25-0000000000563571-0005cc29a0f9f556.journal (8.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-000000000056d5f2-0005cc4e137659ad.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/user-1000@97a8aea485f24ad4838f0d1c95fe7d25-000000000057e128-0005cc8ae52ea534.journal (8.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-00000000005825d4-0005cc9a71258775.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/user-1000@97a8aea485f24ad4838f0d1c95fe7d25-00000000005839b8-0005cc9efcc61da0.journal (8.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-00000000005975a2-0005cce6a90a6723.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/user-1000@97a8aea485f24ad4838f0d1c95fe7d25-00000000005aad16-0005cd2d33ee489a.journal (8.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-00000000005ac57b-0005cd32bd5a00cd.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-00000000005c15ce-0005cd7f30cc6f2c.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-00000000005d6642-0005cdcdb55d1af0.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-00000000005eb6a5-0005ce18e29fdbf4.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-0000000000600697-0005ce68c7c50474.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-000000000061558d-0005ced1fec33fd1.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-000000000062a47d-0005cf438e0f44fc.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-000000000063f464-0005cf9db0bf93d4.journal (88.0M). Deleted archived journal /var/log/journal/20190711105006363114529432776998/system@00000000000000000000000000000000-0000000000654435-0005cfef91fc1a9f.journal (88.0M). Vacuuming done, freed 3.9G of archived journals on disk. [root@host ~]# free -h total used free shared buff/cache available Mem: 15G 3.5G 4.0G 3.0M 7.8G 10G Swap: 0B 0B 0B或者 修改

/etc/systemd/journald.confStorage=none后执行systemctl restart systemd-journald重启-journald进程其他更多有关

journalctl命令的操作请参考https://www.cnblogs.com/jasonboren/p/11493353.html

https://www.cnblogs.com/JerryTomcat/p/14742086.html

其他方法清理buff/cache

在保证了数据一致性的前提下还可以使用下面的命令清理buff/cache,谨慎使用

sync #描述:sync 命令运行 sync 子例程。如果必须停止系统,则运行sync 命令以确保文件系统的完整性。sync 命令将所有未写的系统缓冲区写到磁盘中,包含已修改的 i-Node、已延迟的块 I/O 和读写映射文件

echo 1 > /proc/sys/vm/drop_caches #表示清除pagecache。

echo 2 > /proc/sys/vm/drop_caches #表示清除回收slab分配器中的对象(包括目录项缓存和inode缓存)。slab分配器是内核中管理内存的一种机制,其中很多缓存数据实现都是用的pagecache。

echo 3 > /proc/sys/vm/drop_caches #表示清除pagecache和slab分配器中的缓存对象。

有关该命令和buff/cache的详细解释请参考 https://www.cnblogs.com/cpw6/p/11711817.html

定时任务清理脚本:

#! /bin/sh

used=`free -m | awk 'NR==2' | awk '{print $3}'`

free=`free -m | awk 'NR==2' | awk '{print $4}'`

echo "===========================" >> /var/log//mem.log

date >> /var/log//mem.log

echo "Memory usage before | [Use:${used}MB][Free:${free}MB]" >> /var/log/mem.log

if [ $free -le 4000 ] ; then

sync && echo 1 > /proc/sys/vm/drop_caches

sync && echo 2 > /proc/sys/vm/drop_caches

sync && echo 3 > /proc/sys/vm/drop_caches

used_ok=`free -m | awk 'NR==2' | awk '{print $3}'`

free_ok=`free -m | awk 'NR==2' | awk '{print $4}'`

echo "Memory usage after | [Use:${used_ok}MB][Free:${free_ok}MB]" >> /var/log//mem.log

echo "OK" >> /var/log//mem.log

else

echo "Not required" >> /var/log//mem.log

fi

exit 1

使用crontab -e命令编辑当前用户的crontab 然后执行sudo service crond restart重启定时任务服务即可生效

修改pod容器镜像参数配置

释放系统内存过后发现系统内存足够但是pod仍然会被kill,那么只能怀疑是pod的问题了.

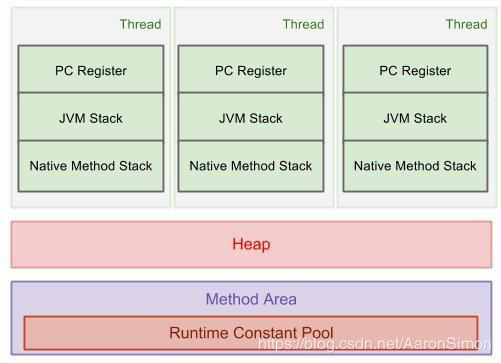

由于制作的镜像未对JVM进行配置,那么 JVM 会默认设置堆栈的大小,这个大小是根据物理机的内存进行分配的。 那么物理机的内存越大,默认分配的最大堆栈就越大(最大堆栈=1/4 * 物理机内存,初始堆栈=1/16 * 物理机内存),而我对Docker容器做了内存限制,JVM 无法感知(不知道自己在Docker容器中运行),就会出现container 被docker killed情况。

- 解决办法

启动时设置最大的堆大小 例如:设置最大堆为600M,-Xmx600m

需要特别注意的是docker镜像的内存上限,不能全部给java -Xms300m -Xmx600m -jar demo.jar-Xmx。因为JVM消耗的内存不仅仅是Heap,因为

JVM = Heap + Method Area + Constant Pool + Thread Stack * num of thread,所以Xmx的值必须要小于镜像上限内存,如图:

修改最大堆后参数后可以观察一段时间看下问题还会不会出现

其他JVM参数详解和调优可以参考 https://www.cnblogs.com/anyehome/p/9071619.html

分析内存中最大对象

- dump堆

当OLD区域占用大于90%的时候把内存dump下来然后用MemoryAnalyzer分析

命令 :jmap -dump:live,format=b,file=<自定义dump文件名> < 进程pid>

例如:jmap -dump:live,format=b,file=demo.dump 16733其他

jmap,jstat,jstack命令详细使用方式可参考https://blog.csdn.net/m0_38110132/article/details/79652962 - JVM出现 OOME 时生成堆 dump配置

-XX:+HeapDumpOnOutOfMemoryError - JVM出现 OOME 时生成堆文件路径配置

-XX:HeapDumpPath=/jvmlogs/

参考

1. journalctl 命令

2. Linux中buff/cache内存占用过高解决办法

3. 缓存查看利器hcache

4. linux 程序被Killed,如何精准查看日志

5. Docker 中 JVM 内存占用问题

6. linux下jmap,jstat和jstack使用

7. Linux服务器Cache占用过多内存导致系统内存不足最终java应用程序崩溃解决方案

8. https://github.com/silenceshell/hcache

9. JVM–参数调优

更多推荐

已为社区贡献21条内容

已为社区贡献21条内容

所有评论(0)